Geometry

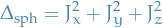

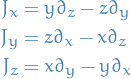

Table of Contents

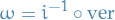

- Notation

- Stuff

- Definitions

- Words

- Regular curves

- Level set

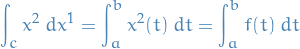

- Arc-length

- Curvature

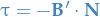

- Torsion

- Isometry

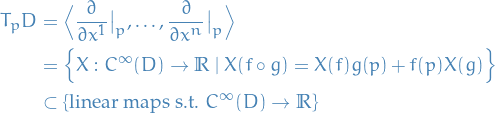

- Tangent spaces

- Tangent bundle

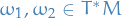

- Cotangent bundle

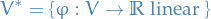

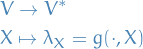

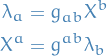

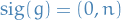

- Dual space

- 1-forms

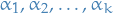

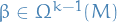

- k-form

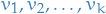

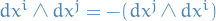

- Wedge product

- Multi-index

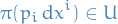

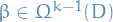

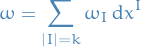

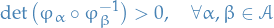

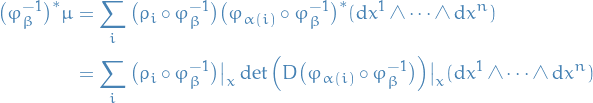

- Differential k-form

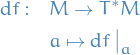

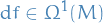

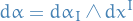

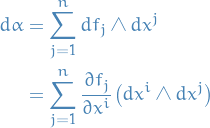

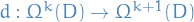

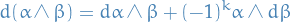

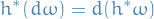

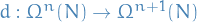

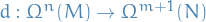

- Exterior derivative

- Integration in Rn

- Topological space

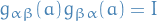

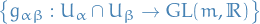

- Atlases & coordinate charts

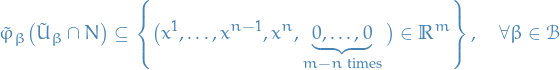

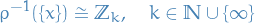

- Manifolds

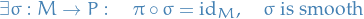

- Diffeomorphism

- Isometric

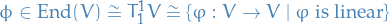

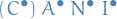

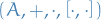

- Algebra

- Equations / Theorems

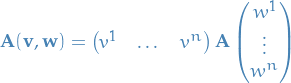

- Change of basis

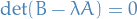

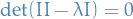

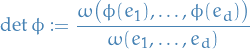

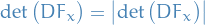

- Determinants

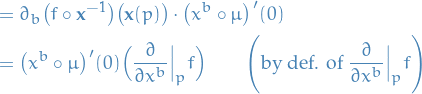

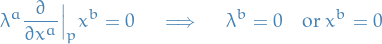

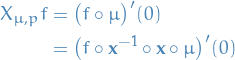

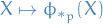

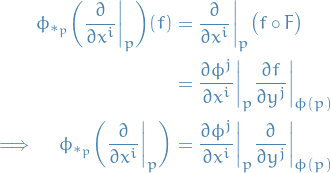

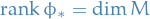

- Tangent space and manifolds

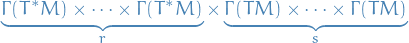

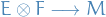

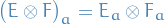

- Tensor Fields and Modules

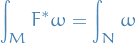

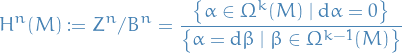

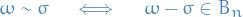

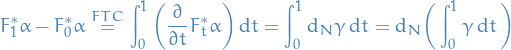

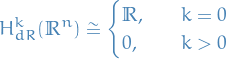

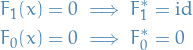

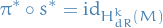

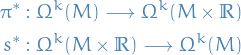

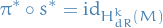

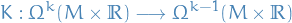

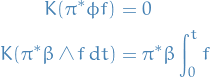

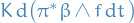

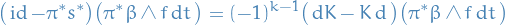

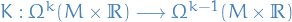

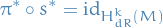

- Grassman algebra and deRham cohomology

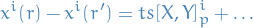

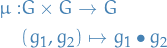

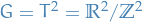

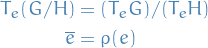

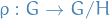

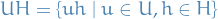

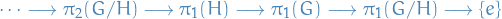

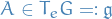

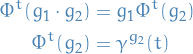

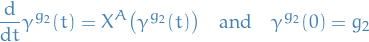

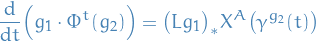

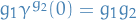

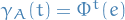

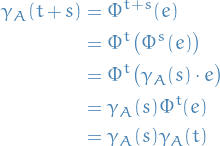

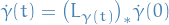

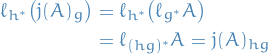

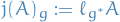

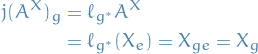

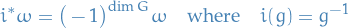

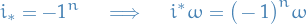

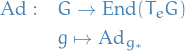

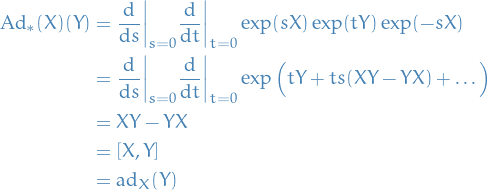

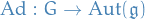

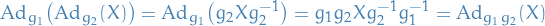

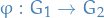

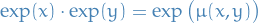

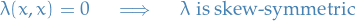

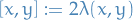

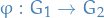

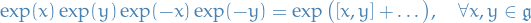

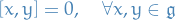

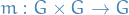

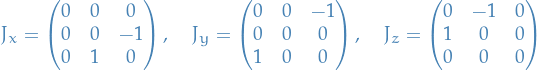

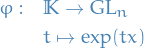

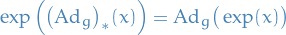

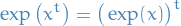

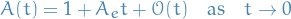

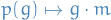

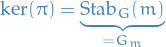

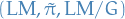

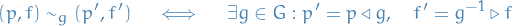

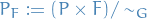

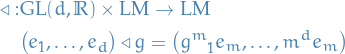

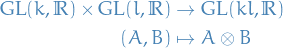

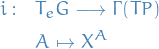

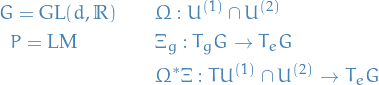

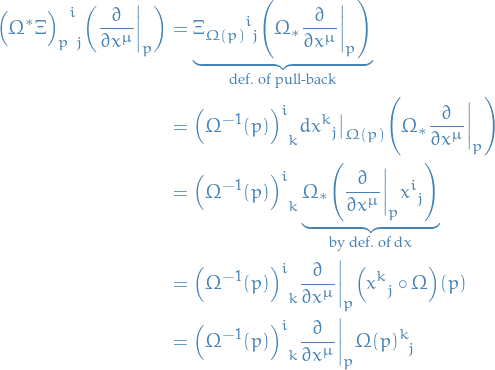

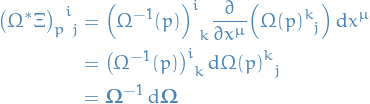

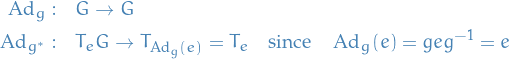

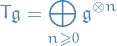

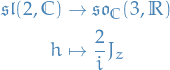

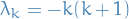

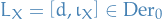

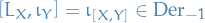

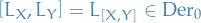

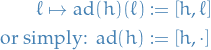

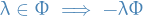

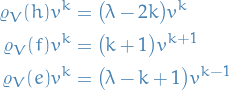

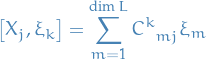

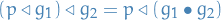

- Lie Theory

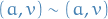

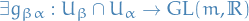

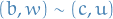

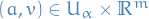

- Notation

- Stuff

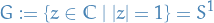

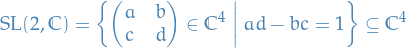

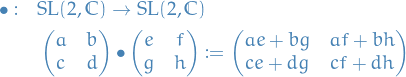

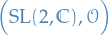

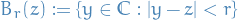

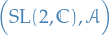

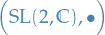

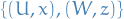

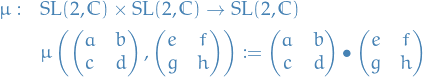

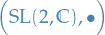

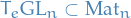

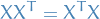

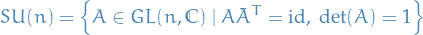

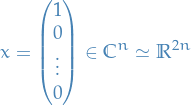

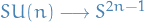

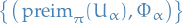

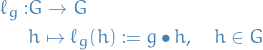

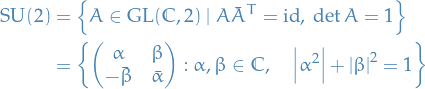

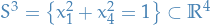

- Examples of Lie groups

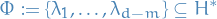

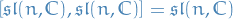

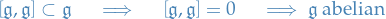

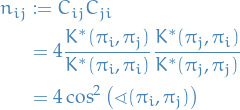

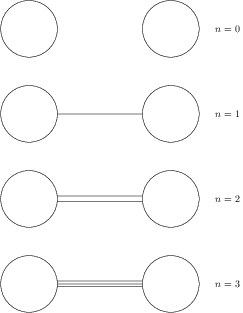

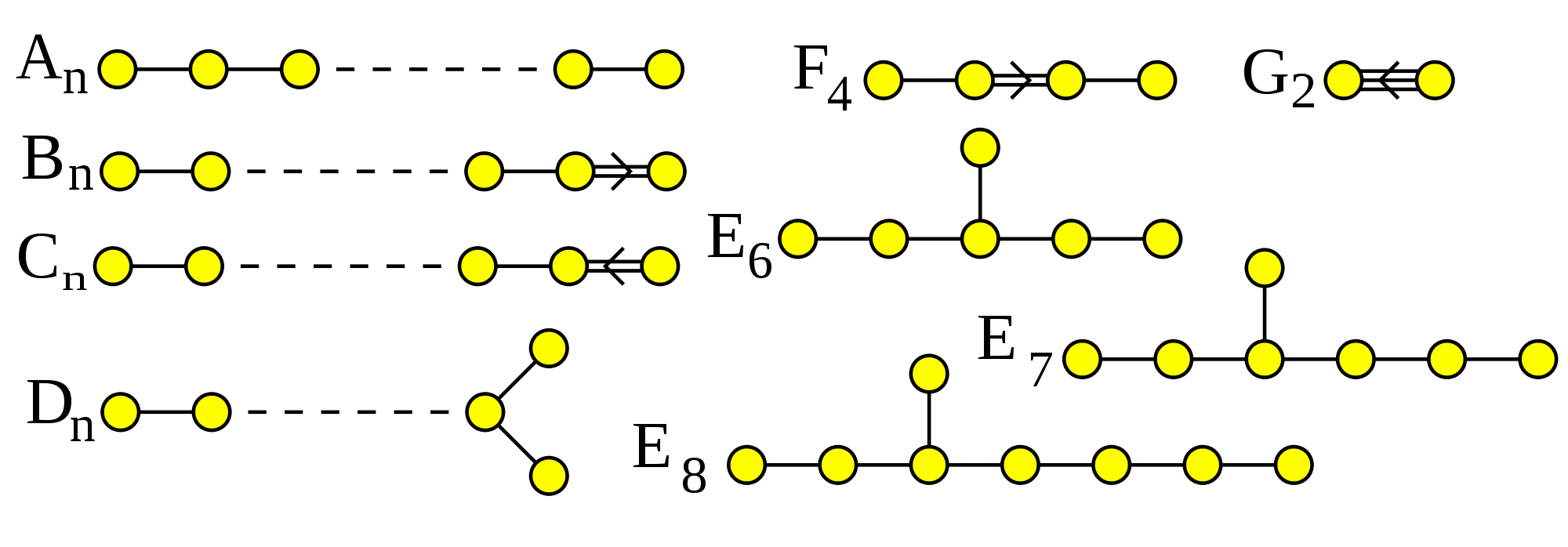

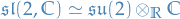

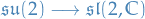

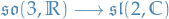

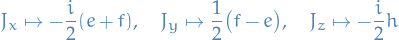

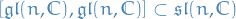

- Classification of Lie Algebras

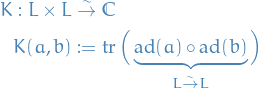

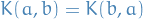

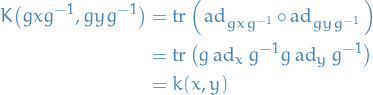

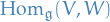

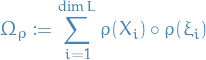

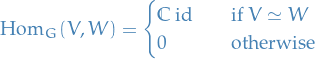

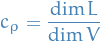

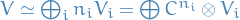

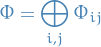

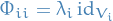

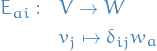

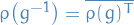

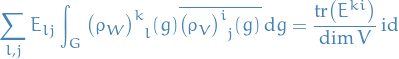

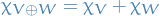

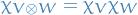

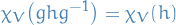

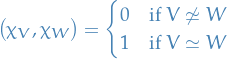

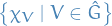

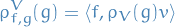

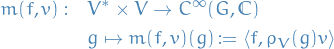

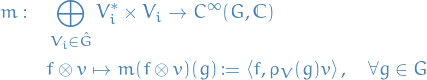

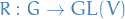

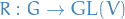

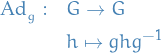

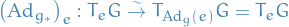

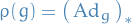

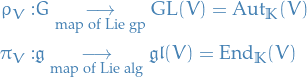

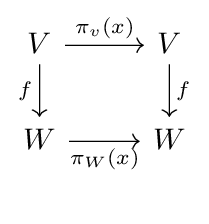

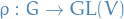

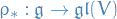

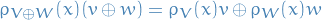

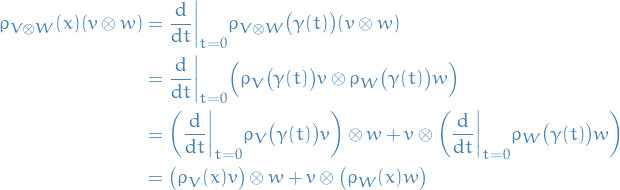

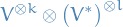

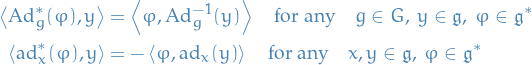

- Representation Theory of Lie groups and Lie algebras

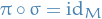

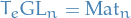

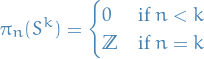

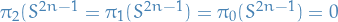

- Reconstruction of Lie group from it's Lie algebra

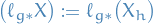

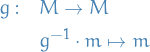

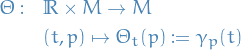

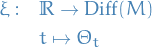

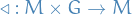

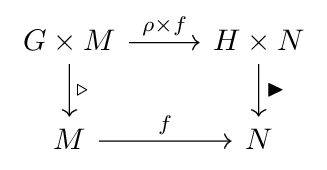

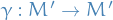

- Lie group action, on a manifold

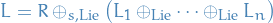

- Structure theory of Lie algebras

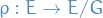

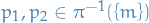

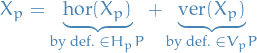

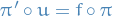

- Parallel Transport

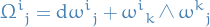

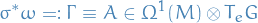

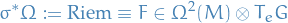

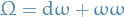

- Curvature and torsion (on principle G-bundles)

- TODO Covariant derivatives

- Spinors on curves spaces

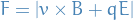

- Relating to Quantum Mechanics

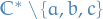

- Complex dynamics

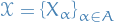

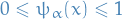

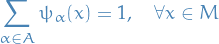

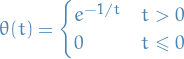

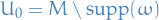

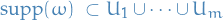

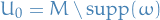

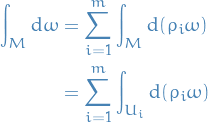

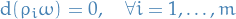

- Partitions of Unity

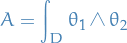

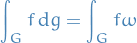

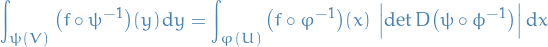

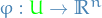

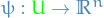

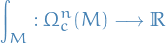

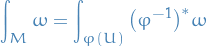

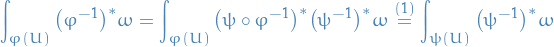

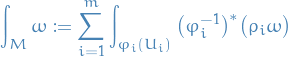

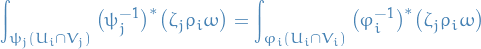

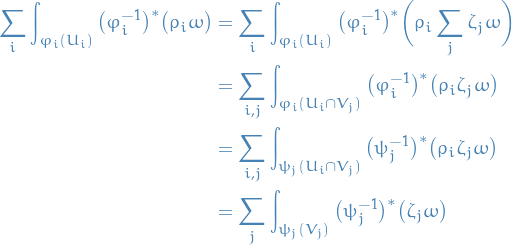

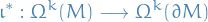

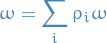

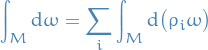

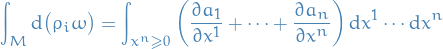

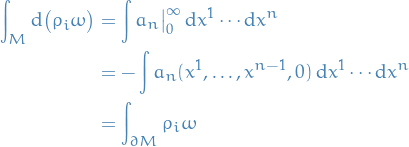

- Integration

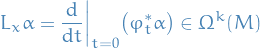

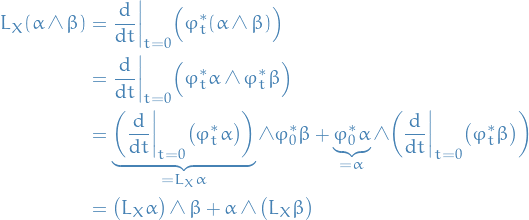

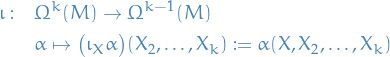

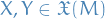

- Cartan calculus

- Temp

- Q & A

- Bibliography

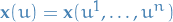

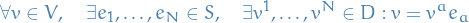

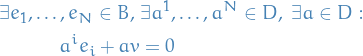

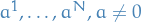

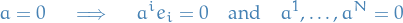

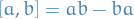

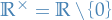

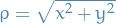

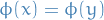

Notation

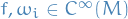

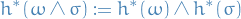

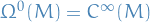

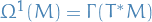

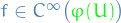

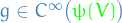

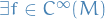

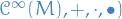

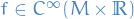

denotes the space of all functions which have continuous derivatives to the k-th order

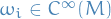

denotes the space of all functions which have continuous derivatives to the k-th order- smooth means

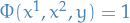

, i.e. infitively differentiable, more specificely,

, i.e. infitively differentiable, more specificely,  means all infitively differentiable functions with domain

means all infitively differentiable functions with domain

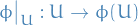

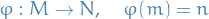

- Maps

are assumed to be smooth unless stated otherwise, i.e. partial derivatives of every order exist and are continuous on

are assumed to be smooth unless stated otherwise, i.e. partial derivatives of every order exist and are continuous on

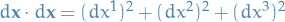

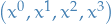

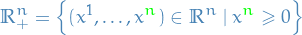

- Euclidean space

as the set

as the set  together with its natural vector space operations and the standard inner product

together with its natural vector space operations and the standard inner product - sub-scripts (e.g. basis vectors

for

for  and coeffs.

and coeffs.  for

for  ) are co-variant

) are co-variant - super-scripts (e.g. basis vectors of

for

for  and coeffs

and coeffs  for

for  ) are contra-variant

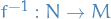

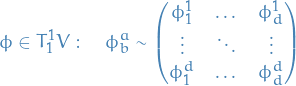

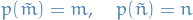

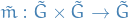

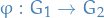

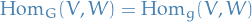

) are contra-variant  where

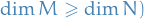

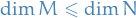

where  are manifolds, uses the

are manifolds, uses the  to refer to a linear map from

to refer to a linear map from  to

to

Stuff

Curves

Examples

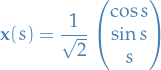

Helix

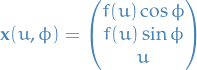

The helix in  is defined by

is defined by

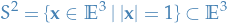

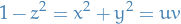

Constructing a sphere in Euclidean space

I suggest having a look at this page in the notes

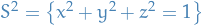

Surface of a sphere in the Euclidean space is defiend as:

As it turns out,  is not a vector space. How do you define vectors on this spherical surface

is not a vector space. How do you define vectors on this spherical surface  ?

?

Defining vectors on spherical surface

At each point on  , construct a whole plan which is tangent to the sphere, called the tangent plane.

, construct a whole plan which is tangent to the sphere, called the tangent plane.

This plane  is the two dimensional vector space of lines tangent to the sphere at the given point, called tangent vectors.

is the two dimensional vector space of lines tangent to the sphere at the given point, called tangent vectors.

Each point on the sphere  defines a different tangent plane. This leads to the notion of a vector field which is: a rule for smoothly assigning a tangent vector to each point on

defines a different tangent plane. This leads to the notion of a vector field which is: a rule for smoothly assigning a tangent vector to each point on  .

.

The above description of a vector space on  is valid everywhere, and so we refer to it as a global description.

is valid everywhere, and so we refer to it as a global description.

Usually, we don't have this luxury. Then we we parametrise a number of "patches" of the surface using coordinates, in such a way that the patches cover the whole surface. We refer to this as a local description.

Motivation

The tangent-space  at some point

at some point  on the 2-sphere is a function of the point

on the 2-sphere is a function of the point  .

.

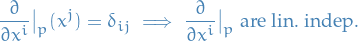

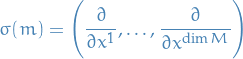

The issue with the 2-sphere is that we cannot obtain a (smooth) basis for the surface. We therefore want to think about the operations which do not depend on having a basis.  gives a way of doing this, since each of the derivatives are linear inpendent.

gives a way of doing this, since each of the derivatives are linear inpendent.

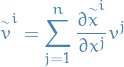

Ricci calculus and Einstein summation

This is reason why we're using superscript to index our coordinates,  .

.

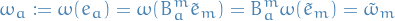

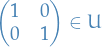

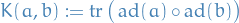

Suppose that I have a vector space  and dual space

and dual space  . A choice of basis

. A choice of basis  for

for  induces a dual basis

induces a dual basis  on the dual vector space

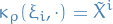

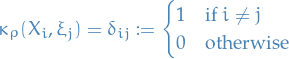

on the dual vector space  , determined by the rule

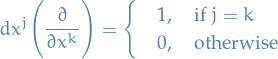

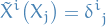

, determined by the rule

where  is the Kronecker delta.

is the Kronecker delta.

Any element  of

of  or

or  of

of  can be written as lin. comb. of these basis vectors:

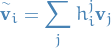

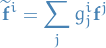

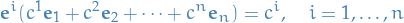

can be written as lin. comb. of these basis vectors:

and we have  .

.

If we do a change of basis of  , which induces a change of basis for

, which induces a change of basis for  , then the coefficients of a vector in

, then the coefficients of a vector in  transform in the same way as the basis of vectors

transform in the same way as the basis of vectors  and vice versa, the coefficients of a vector

and vice versa, the coefficients of a vector  transform in the same way as the basis vectors of

transform in the same way as the basis vectors of  .

.

Suppose a new basis for  is given by

is given by  , with

, with

where the  are the coefficients of the invertible change-of-basis matrix, and

are the coefficients of the invertible change-of-basis matrix, and  are the coefficients of its inverse (i.e.

are the coefficients of its inverse (i.e.  ). If we denote the new induced dual basis for

). If we denote the new induced dual basis for  by

by  , we have

, we have

Moreover, for any elements of  of

of  and

and  of

of  which we can write as

which we can write as

we have

See how the order of the indices are different?

The entities  and

and  are co-variant .

are co-variant .

The entities  and

and  are contra-variant .

are contra-variant .

One-forms are sometimes referred to as co-vectors , because their coefficients transform in a co-variant way.

The notation then goes:

- sub-scripts (e.g. basis vectors

for

for  and coeffs.

and coeffs.  for

for  ) are co-variant

) are co-variant - super-scripts (e.g. basis vectors of

for

for  and coeffs

and coeffs  for

for  ) are contra-variant

) are contra-variant

Very important: "super-script indicies in the denominator" are understood to be lower indices, i.e. co-variant in denominator equals contravariant.

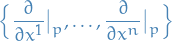

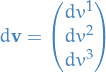

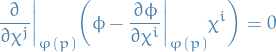

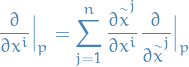

Now, consider this notation for our definition of tangent space and dual space:

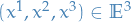

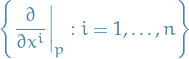

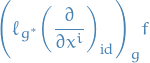

If you choose coordinates  on an open set

on an open set  containing a point

containing a point  , then you get a basis for the tangent space

, then you get a basis for the tangent space  at

at

which have super-script in denominator, indicating a co-variant entity (see note).

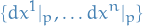

Similarily we get a basis for the cotangent space  at

at

which have super-script indices, indicating a contra-variant entity.

Why did we decide the first case is the co-variant (co- and contra- are of course relative)?

Because in differential geometry the co-variant entities transform like the coordinates do, and we choose the coordinates to be our "relative thingy".

Differential forms

Differential forms are an approach to multivariable calculus which is independent of coordinates.

Surfaces

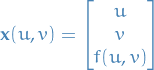

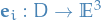

Notation

is the domain in the plane whose Cartesian coordinates will be denoted

is the domain in the plane whose Cartesian coordinates will be denoted  unless otherwise stated

unless otherwise stated , unless otherwise stated

, unless otherwise stated denotes the image of the smooth, injective map

denotes the image of the smooth, injective map

Regular surfaces

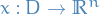

A local surface in  is smooth, injective map

is smooth, injective map  with a continuous inverse of

with a continuous inverse of  . Sometimes we denote the image

. Sometimes we denote the image  by

by  .

.

The assumptation that  is injective means that points in the image

is injective means that points in the image  are uniquely labelled by points in

are uniquely labelled by points in  .

.

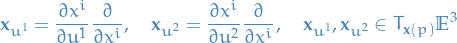

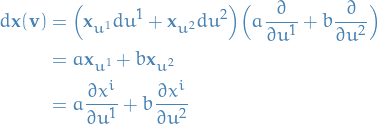

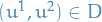

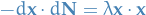

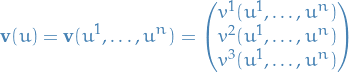

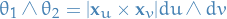

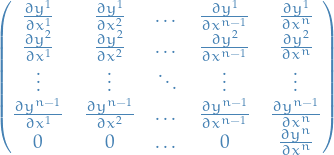

Given a local surface we define

For every point  , these are vectors in

, these are vectors in  , which we will identify with

, which we will identify with  itself.

We say that a local surface

itself.

We say that a local surface  is regular at

is regular at  if

if  and

and  are linearly independent.

A local surface is regular if it is regular at

are linearly independent.

A local surface is regular if it is regular at  for all

for all  .

.

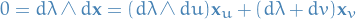

This gives rise to the differential form  :

:

Here is a quick example of evaluating the differential form induced by the definition of a regular local surface:

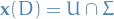

is a regular surface if for each

is a regular surface if for each  there exists a regular local surface

there exists a regular local surface  such that

such that  and

and  for some open set

for some open set  .

.

In other words, if for each point  on the surface we can construct a regular local surface, then the entire surface is said to be regular.

on the surface we can construct a regular local surface, then the entire surface is said to be regular.

A map  defines a local surface which is part of some surface

defines a local surface which is part of some surface  , is sometimes called a coordinate chart on

, is sometimes called a coordinate chart on  .

.

Thus, if the surface  is a regular surface (not just locally regular) we can "define"

is a regular surface (not just locally regular) we can "define"  from a set of all these coordinate charts .

from a set of all these coordinate charts .

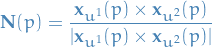

At a regular point on a local surface, the plane spanned by  and

and  is the tangent plane to the surface at

is the tangent plane to the surface at  , which we denote by

, which we denote by  . At a regular point, the unit normal to the surface is

. At a regular point, the unit normal to the surface is

Clearly,  is orthogonal to the tangent plane

is orthogonal to the tangent plane  .

.

Given a local surface the map  is a smooth function whose image lies in a unit sphere

is a smooth function whose image lies in a unit sphere  . The map

. The map  is called the local Gauss map.

is called the local Gauss map.

Standard Surfaces

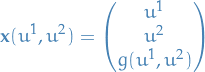

Let  be a smooth function. The graph of

be a smooth function. The graph of  is the local surface defined by

is the local surface defined by

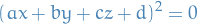

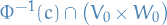

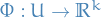

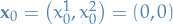

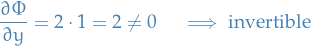

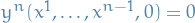

An implicitly defined surface  is zero set of a smooth function

is zero set of a smooth function  , i.e.

, i.e.

Note that  is a mapping from

is a mapping from  , and we're saying that the inverse of this function defines a surface, where it's also important to note the smooth requirement, as this implies that

, and we're saying that the inverse of this function defines a surface, where it's also important to note the smooth requirement, as this implies that  is differentiable.

is differentiable.

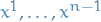

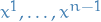

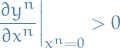

An implicitly defined surface  , such that

, such that  everywhere

on

everywhere

on  , is a regular surface.

, is a regular surface.

This is due to the fact that if there is a point  such that

such that  , then that implies that

, then that implies that  and

and  are linearly dependent, hence not a regular surface.

are linearly dependent, hence not a regular surface.

A surface of revolution with profile curve  is a local surface of the form

is a local surface of the form

A surface of revolution can be constructed by rotation a curve  around the

around the  axis in

axis in  . It thus has cylindrical symmetry.

. It thus has cylindrical symmetry.

A ruled surface is a surface of the form

Notice that curves of constant  are straight lines in

are straight lines in  through

through  in the direction

in the direction  .

.

Examples of surfaces

Quadratic surfaces

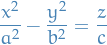

Quadratic surfaces are the graphs of any equation that can be put into the general form:

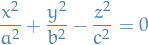

The general equation for a cone

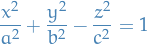

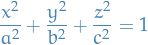

The general equation for a hyperboloid of one sheet

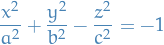

The general equation for a hyperboloid of two sheets

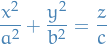

The general equation for an ellipsoid

with  being a sphere.

being a sphere.

General equation for an elliptic paraboloid

General equation for an hyperbolic paraboloid

Fundamental forms

Symmetric tensors

Notation

are coordinates

are coordinates

Definitions

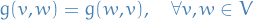

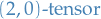

A (Riemannian) metric on  is a symmetric tensor

is a symmetric tensor  which is positive definite at each point;

which is positive definite at each point;  , with equality if and only if

, with equality if and only if  .

.

Equivalently, it is a choice for each  of an inner product on

of an inner product on

First fundamental form

Notation

and

and  are our coordinates

are our coordinates

Stuff

This bijectivity can be used to give a coordinate free definition of regularity of a local surface.

Given a regular local surface  , the first fundamental form is defined by

, the first fundamental form is defined by

where we have introduced the notation  .

.

The first fundamental form is a metric on  .

.

Second Fundamental form

Notation

and

and  are our coordinates

are our coordinates is the normal of the surface (if my understanding is correct)

is the normal of the surface (if my understanding is correct)

Stuff

Given a local surface  , the second fundamental form is defined by

, the second fundamental form is defined by

with the dot product interpreted as usual.

The  valued 1-form

valued 1-form  is linear map which may have a non-trivial kernel. It is convenient to use the isomorphism

is linear map which may have a non-trivial kernel. It is convenient to use the isomorphism  to rewrite the map

to rewrite the map  as a symmetric bilinear form.

as a symmetric bilinear form.

Since  is unit normalised it follows that

is unit normalised it follows that  (by differentiating

(by differentiating  by

by  and

and  respectively).

respectively).

Hence,  and

and  must belong to the tangent plane

must belong to the tangent plane  . In other words,

. In other words,  .

.

The second fundamental form is given by

where  are continuous functions on

are continuous functions on  given by

given by

Which can also be written as

Q & A

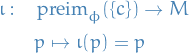

- DONE What do we mean by a 1-form having a "non-trivial kernel"?

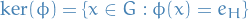

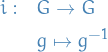

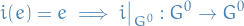

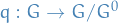

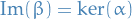

In Group-theory we have the following definition of a kernel :

where

is a homomorphism.

is a homomorphism.

When we say the mapping

has a non-trivial kernel, we mean that there are more elements in

has a non-trivial kernel, we mean that there are more elements in  than just the identity element which is being mapped to the identity-element in

than just the identity element which is being mapped to the identity-element in  , i.e.

, i.e.

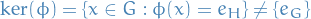

Hence, in the case of the some 1-form

, we have mean

, we have mean

i.e. non-trivial kernel refers to the 1-form mapping more than just the zero-vector to the zero-vector in the target vector-space.

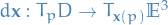

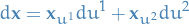

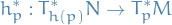

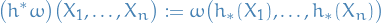

- DONE What do we mean when we write dx from TpD to Tx(p) S?

What do we mean when we write the following:

where:

is some surface in

is some surface in

is the domain of our "coordinates"

is the domain of our "coordinates" is a smooth map

is a smooth map

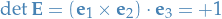

Curvature

Notation

are symmetric bilinear forms on a real vector space

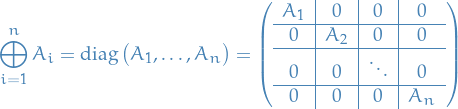

are symmetric bilinear forms on a real vector space  , which we can represent in matrix form as:

, which we can represent in matrix form as:

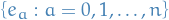

is a basis of

is a basis of

represents the principal curvatures

represents the principal curvatures

Bilinear algebra

The eigenvalues of  wrt.

wrt.  are roots of the polynomial

are roots of the polynomial

where  are represented by symmetric

are represented by symmetric  matrices.

matrices.

If  is positive definite (i.e.

is positive definite (i.e.  defines an inner product) there exists a basis

defines an inner product) there exists a basis  of

of  such that:

such that:

is orthonormal wrt.

is orthonormal wrt.

- each

is an eigenvector of

is an eigenvector of  wrt.

wrt.  with a real eigenvalue

with a real eigenvalue

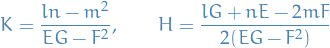

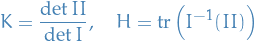

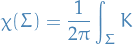

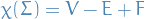

Gauss and mean curvatures

have 2 symmetric bilinear forms on

have 2 symmetric bilinear forms on  ,

,  and

and  look for eigenvalues & eigenvectors of

look for eigenvalues & eigenvectors of  and

and  .

.

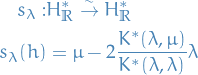

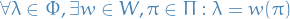

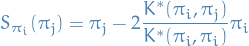

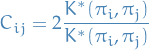

The eigenvalues  of

of  wrt.

wrt.  are the principal curvatures of the surface. The corresponding eigenvectors are the principal directions of the surface. Hence the principal curvatures are the roots of the polynomial

are the principal curvatures of the surface. The corresponding eigenvectors are the principal directions of the surface. Hence the principal curvatures are the roots of the polynomial  .

.

The principal curvatures may vary with position and so are (smooth) functions on  .

.

The product of the principal curvatures is the Gauss curvature :

Average of the principal curvatures is the Mean curvature :

If  we have that all directions are principal.

we have that all directions are principal.

where all variables are as given by the first and second fundamental forms.

We get the elegant basis independent expressions

Thus, the Gauss curvature is positive if and only if  is positive definite.

is positive definite.

Meaning of curvature

Notation

is the domain of the plane with coordinates

is the domain of the plane with coordinates

be a regular local surface

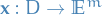

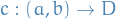

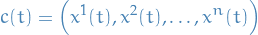

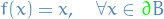

be a regular local surface![$c:[a, b] \to D$](../../assets/latex/geometry_72874374fa11c82f125fb710232b4461655d7653.png) given by the map

given by the map  is a regular curve in

is a regular curve in

is the tangent-vector of the curve

is the tangent-vector of the curve

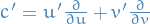

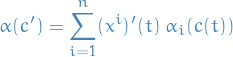

Curves on surfaces

The composition

![\begin{equation*}

\mathbf{x} \circ c : [a, b] \to \mathbb{E}^3, \quad t \mapsto \mathbf{x}\big(c(t)\big)

\end{equation*}](../../assets/latex/geometry_ba543ff5b7e3840f5ad88345dbb3eb313fe92a9b.png)

describes a curve in  lying on the surface.

lying on the surface.

and

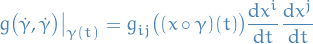

The arclength of the curve ![$\mathbf{x}\big( c(t) \big), \quad t \in [a, b]$](../../assets/latex/geometry_707cefbe575c0da587357b01136798bd13d9fd39.png) , lying on the surface is

, lying on the surface is

Invariance under Euclidean motions

Let  and

and  be two surfaces related by a Euclidean motion, so

be two surfaces related by a Euclidean motion, so

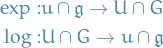

where  is a orthogonal matrix with

is a orthogonal matrix with  and

and  .

.

Then,

and hence, in particular,

The first fundamental form and second fundamental form determine the surface (up to Euclidean motions).

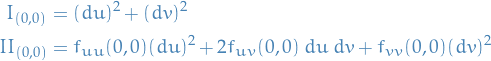

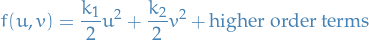

Taylor series

Let  be a point on a regular local surface. By Euclidean motion, choose

be a point on a regular local surface. By Euclidean motion, choose  to be at the origin, and the unit normal at that point to be along the positive

to be at the origin, and the unit normal at that point to be along the positive  axis so

axis so  is the

is the  plane.

plane.

Near  we can parametrise the surface as a graph:

we can parametrise the surface as a graph:

where at the origin

Using the above parametrization, and observing that  and

and  span

span  , which is the plane orthogonal to

, which is the plane orthogonal to  , we see that

, we see that  and

and  .

.

Further, supposing the  axes correspond to the principal directions, then the Taylor series of the surface near the origin is

axes correspond to the principal directions, then the Taylor series of the surface near the origin is

where  are the principal curvatures at

are the principal curvatures at  .

.

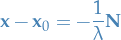

Umbilical points

Let  be a regular local surface.

be a regular local surface.

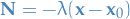

We then say a point  is a umbilical if and only if

is a umbilical if and only if

or equivalently,

i.e. all directions are pricipal directions.

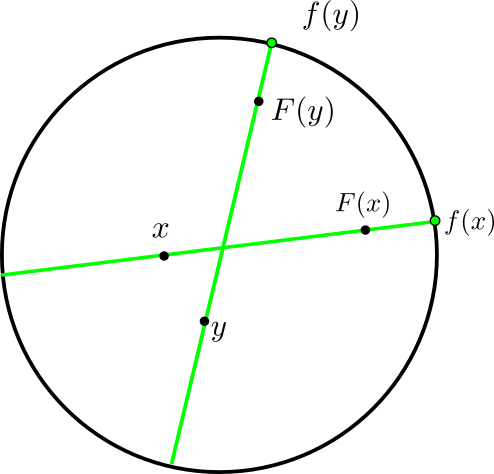

An umbilical point is part of a sphere.

We can see the "being a part of a sphere" from the fact that a point  on a sphere can be written as

on a sphere can be written as

where  corresponds to

corresponds to  pointing inwards, while

pointing inwards, while  is

is  pointing outwards. In this case, we have

pointing outwards. In this case, we have

hence,

Conversely, if  then

then

Which tells us that

Thus,

where  is just some constant. Then,

is just some constant. Then,

A regular local surface has  if and only if it is (a piece of) a plane.

if and only if it is (a piece of) a plane.

The statement that  or

or  is equivalent of saying that

is equivalent of saying that  is part of a plane, since the tangents of the map

is part of a plane, since the tangents of the map  are perpendicular to the normal.

are perpendicular to the normal.

Every point is umbilical if and only if the surface is a plane or a sphere.

If  for some smooth function

for some smooth function  , then

, then

(here we have  as a function, thus the exterior derivative of

as a function, thus the exterior derivative of  gives us a 1-form).

gives us a 1-form).

And since

and hence by regularity of the surface  . Thus

. Thus  is a constant function on

is a constant function on  which implies

which implies  .

.

This is because we've already stated that if

is part of a plane (thm:second-fundamental-form-zero-everywhere-on-surface) and if

is part of a plane (thm:second-fundamental-form-zero-everywhere-on-surface) and if  and constant we have

and constant we have  to be part of a sphere (thm:all-points-umbilical-surface-is-sphere-or-plane).

to be part of a sphere (thm:all-points-umbilical-surface-is-sphere-or-plane).

Problems

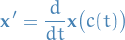

Lemma 9.1 (in the notes)

![\begin{equation*}

\begin{split}

\frac{d}{dt} \mathbf{x} \big( c(t) \big) &= \frac{\partial x^i}{\partial t} \frac{\partial}{\partial x^i} \\

&= \bigg( \frac{\partial x^i}{\partial u} \frac{\partial u}{\partial t} \bigg) \frac{\partial}{\partial x^i} + \bigg( \frac{\partial x^i}{\partial v} \frac{\partial v}{\partial t} \bigg) \frac{\partial}{\partial x^i} \qquad \text{(by chain rule)} \\

&= \mathbf{x}_u u'(t) + \mathbf{x}_v v'(t) \qquad \qquad \qquad \qquad \qquad \bigg( \frac{du}{dt} = u'(t) \bigg) \\

&= \mathbf{x}_u u'(t) \bigg( du \frac{\partial}{\partial u} \bigg) + \mathbf{x}_v v'(t) \bigg( dv \frac{\partial}{\partial v} \bigg), \qquad \bigg( dv \frac{\partial}{\partial v} = 1 \bigg) \\

&= d \mathbf{x} \Bigg[ u'(t) \frac{\partial}{\partial u} + v'(t) \frac{\partial}{\partial v} \Bigg] \\

&= d \mathbf{x} \big( c'(t) \big)

\end{split}

\end{equation*}](../../assets/latex/geometry_2c48634547b2e9f574736880e760809f7b45f7bd.png)

![\begin{align*}

\frac{d}{dt} \mathbf{x} \big( c(t) \big) &= \frac{\partial x^i}{\partial t} \frac{\partial}{\partial x^i} \\

&= \bigg( \frac{\partial x^i}{\partial u} \frac{\partial u}{\partial t} \bigg) \frac{\partial}{\partial x^i} + \bigg( \frac{\partial x^i}{\partial v} \frac{\partial v}{\partial t} \bigg) \frac{\partial}{\partial x^i}, & \text{(by chain rule)} \\

&= \mathbf{x}_u u'(t) + \mathbf{x}_v v'(t), & \bigg( \frac{du}{dt} = u'(t) \bigg) \\

&= \mathbf{x}_u u'(t) \bigg( du \frac{\partial}{\partial u} \bigg) + \mathbf{x}_v v'(t) \bigg( dv \frac{\partial}{\partial v} \bigg), & \bigg( dv \frac{\partial}{\partial v} = 1 \bigg) \\

&= d \mathbf{x} \Bigg[ u'(t) \frac{\partial}{\partial u} + v'(t) \frac{\partial}{\partial v} \Bigg] \\

&= d \mathbf{x} \big( c'(t) \big)

\end{align*}](../../assets/latex/geometry_5c1c6bd3ef571c61e7048498f8aab76bd3170e1a.png)

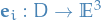

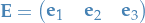

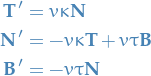

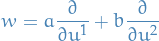

Moving frames in Euclidean space

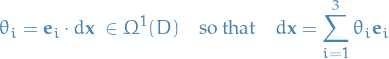

Notation

is a smooth map

is a smooth map

denotes the coordinates on

denotes the coordinates on

- moving frame denotes a collection of maps

for

for  such that these

such that these  form a oriented orthonormal basis of

form a oriented orthonormal basis of

- oriented means that

, which, because the frame is oriented, we have

, which, because the frame is oriented, we have  , i.e. it's a rotation matrix

, i.e. it's a rotation matrix

Stuff

A moving frame for  on

on  is a collection of maps

is a collection of maps  for

for  such that for all

such that for all  the

the  form an oriented orthonormal basis of

form an oriented orthonormal basis of  .

.

Oriented means that  .

.

This definition uses the notation of orientedness in three dimensions. For general  there is a different definition of a oriented frame.

there is a different definition of a oriented frame.

If  , given by

, given by

we write  for its entry by entry exterior derivative:

for its entry by entry exterior derivative:

Thus,  takes vector fields in

takes vector fields in  and spits out vectors in

and spits out vectors in  .

.

Connection forms and the structure equations

Since  is an orthonormal basis for

is an orthonormal basis for  , any vector

, any vector  can be expanded as

can be expanded as  in the moving frame, and the same applies to a vector-valued 1-form, e.g.

in the moving frame, and the same applies to a vector-valued 1-form, e.g.  .

.

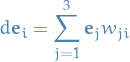

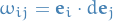

Therefore we define 1-forms  by

by

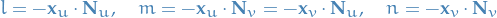

The 1-forms  are called the connection 1-forms and by definition satisfy

are called the connection 1-forms and by definition satisfy

Each  are in this case a 1-form.

are in this case a 1-form.

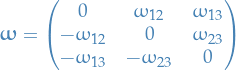

We can now write the structure equations for a surface using matrix-notation:

We can also write

We will also write

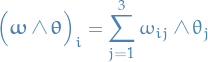

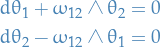

The first structure equations are

where the wedge product between the vectors are taken as

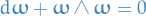

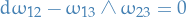

The second structure equations are

Definition of connection 1-forms and second structure equations only requires the existence of a moving frame and not a map  .

.

The structure equations exist in the more general context of Riemannian geometry, where  is the Riemann curvature, which in general is non-vanishing. In our case it's zero because our moving frame is in

is the Riemann curvature, which in general is non-vanishing. In our case it's zero because our moving frame is in  .

.

Structure equations for surfaces

Notation

are 1-forms

are 1-forms are "connection" 1-forms

are "connection" 1-forms

Adapted frames and the structure equations

A moving frame  for

for  on

on  is said to be adapted to the surface if

is said to be adapted to the surface if  .

.

I.e. it's adapted to the surface if we orient the basis such that  corresponds to the normal of the surface.

corresponds to the normal of the surface.

The first and second structure equations for a local surface wrt. to an adapted frame, give the structure equations for a surface:

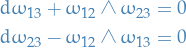

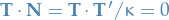

First structure equations:

Symmetry equation:

Gauss equation:

Codazzi equations:

Notice how  has just vanished if you compared to in a moving frame, which comes from the fact that in an adapted moving frame we have

has just vanished if you compared to in a moving frame, which comes from the fact that in an adapted moving frame we have  .

.

The Gauss equation above is equivalent to

This shows that the Gauss curvature can be computed simply from a knowledge of  and

and  without reference to the local description of the surface

without reference to the local description of the surface  .

.

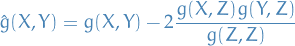

Let  be a local surface with the first fundamental form

be a local surface with the first fundamental form  and

and  be the 1-forms on

be the 1-forms on  such that

such that

Then there exists a unique adapted frame such that  and

and  .

.

We say a 1-form is degenerate if wrt. any basis , the matrix representing the 1-form has  .

.

Two local surfaces  and

and  are isometric if and only if

are isometric if and only if  .

.

Isometric surfaces have the same Gauss curvature. More specifically,

If  are two isometric surfaces, then

are two isometric surfaces, then

The Guass curvature is an instrinsic invariant of a surface!

The first fundamental form  of a surface actually then turns out to determine the following properties:

of a surface actually then turns out to determine the following properties:

- distance

- angles

- area

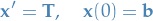

Geodesics

Notation

![$\mathbf{x}: [a, b] \to \mathbb{E}^n$](../../assets/latex/geometry_fd54d2659862f5acb5d8923d837a55e7851b62bf.png) which defines the map

which defines the map  , and has unit speed

, and has unit speed  joining two points

joining two points

Stuff

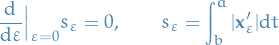

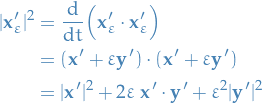

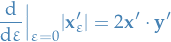

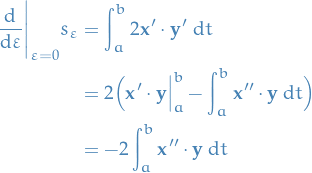

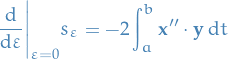

Consider a 1-parameter family of nearby curves

where  and

and  so that all curves in the family join

so that all curves in the family join  to

to  . We refer to

. We refer to  as a connecting vector.

as a connecting vector.

It's very important that  , because if

, because if  has a component along

has a component along  we could remove the shared component by reparametrising

we could remove the shared component by reparametrising  .

.

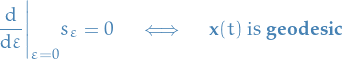

We say a unit speed curve  as above has stationary length if the length of the nearby curves

as above has stationary length if the length of the nearby curves  satisfies

satisfies

for all connecting vector  .

.

A unit speed curve  in Euclidean space has stationary length if and only if it is the straight line joining the two points.

in Euclidean space has stationary length if and only if it is the straight line joining the two points.

Let  be a unit speed curve in Euclidean space. We then have to prove the following:

be a unit speed curve in Euclidean space. We then have to prove the following:

is a straight line, then it has stationary length

is a straight line, then it has stationary length has stationary length then it's a straight line

has stationary length then it's a straight line

Remember, stationary length is equivalent of

First, suppose that  is in fact a straight line, then

is in fact a straight line, then

Now, taking the square root and the derivative wrt.  we have

we have

Remembering that  is a unit-speed curve, i.e.

is a unit-speed curve, i.e.  , thus

, thus

Now, substituting this into the expression for  , and observing that interchanging the integral wrt.

, and observing that interchanging the integral wrt.  and derivative wrt.

and derivative wrt.  is alright to do, we get

is alright to do, we get

since  by definition of connecting vectors. The final integral is zero if and only if

by definition of connecting vectors. The final integral is zero if and only if  , which is equivalent of saying that

, which is equivalent of saying that  is linear in

is linear in  and thus is a straigt line, concluding the first part of our proof.

and thus is a straigt line, concluding the first part of our proof.

Now, for the second part, we suppose that  has stationary length

has stationary length

We again perform exactly the same computation and end up with the same integral as we got previously (since we did not use any of our assumptions until the very end), i.e.

And since  is assumed to have stationary length,

is assumed to have stationary length,

which is true if and only if  , hence by the same argument as above,

, hence by the same argument as above,  is the straight line between the two points

is the straight line between the two points  and

and  .

.

Notice the "calculus of variations" spirit of the proof! Marvelous, innit?!

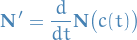

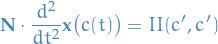

Geodesics on surfaces

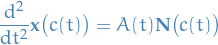

A unit-speed curve  lying in a surface is a geodesic if its acceleration is everywhere normal to the surface, that is,

lying in a surface is a geodesic if its acceleration is everywhere normal to the surface, that is,

where  is the unit normal to the surface and

is the unit normal to the surface and  is some function along the curve.

is some function along the curve.

This means that for a geodesic the acceleration in the direction tangent to the surface vanishes thus generalising the concept of a straight line in a plane.

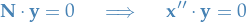

You can see this from looking at the proof of stationary length in Euclidean space being equivalent to the curve being the straight line: in the final integral we have a dot-product between  and

and  ,

,

But, all  defined in the definition of a connecting vector / nearby curves also lies on the surface, hence

defined in the definition of a connecting vector / nearby curves also lies on the surface, hence  cannot have a component in the direction perpendicular to surface. Neither can

cannot have a component in the direction perpendicular to surface. Neither can  since this is also on the surface, which implies

since this is also on the surface, which implies  also cannot have a component normal to the surface. Thus,

also cannot have a component normal to the surface. Thus,

Finally implying

A curve lying in a surface has stationary length (among nearby curves on the surface joining the same endpoints) if and only if it's a geodesic.

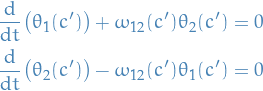

A curve  lying in a surface is a geodesic if and only if, in an adapted moving frame it obeys the geodesic equations

lying in a surface is a geodesic if and only if, in an adapted moving frame it obeys the geodesic equations

and the energy equation

Given a point  on a surface and a unit tangent vector

on a surface and a unit tangent vector  to the surface at

to the surface at  , there exists a unique geodesic on the surface

, there exists a unique geodesic on the surface  for

for  (with

(with  sufficiently small), such that

sufficiently small), such that  and

and  .

.

The geodesic equations only depend on the first fundamental form of a surface. Hence they are partof the intrinsic geometry of a surface and isometric surefaces have the same geodesics!

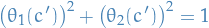

Two-dimensional hyperbolic space is the upper half plane

equipped with the first fundamental form given by

Integration over surfaces

Notation

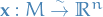

defines a local map , where we drop the bold-face notation due to not anymore using the Euclidean structure

defines a local map , where we drop the bold-face notation due to not anymore using the Euclidean structure denotes the pull-back of

denotes the pull-back of  by the map

by the map

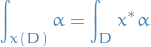

Integration of 2-forms over surfaces

Let  define a local surface

define a local surface

Note we do not write the map defining the surface in bold here, to emphasise we are not going to use the Euclidean structure).

Let

be a 2-form on  . We define the pull-back

. We define the pull-back  of

of  by the map

by the map  to be the 2-form on

to be the 2-form on  given by

given by

IMPORTANT: where here  is the exterior derivative of

is the exterior derivative of  , i.e.

, i.e.

Let  be a local surface and let

be a local surface and let  be a 2-form on

be a 2-form on  . We define the integral of

. We define the integral of  over the local surface to be

over the local surface to be

So, we're defining the integral of the 2-form  over the map

over the map  as the integral over the pull-back of

as the integral over the pull-back of  over the domain

over the domain  .

.

Why is this useful? It's useful because we can integrate some 2-form in the "target" manifold  over the "input" domain

over the "input" domain  .

.

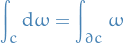

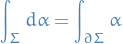

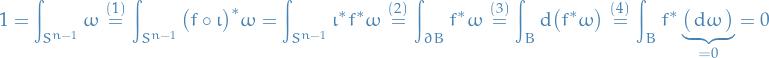

Let  be a k-dimensional oriented closed and bounded submanifold in

be a k-dimensional oriented closed and bounded submanifold in  with boundary

with boundary  given the induced orientation and

given the induced orientation and  . Then

. Then

The Stokes' and divergence of vector calculus are the  and

and  special cases respectively.

special cases respectively.

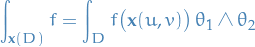

Integration of functions over surfaces

For a local surface, we have

Hence, we obtain an alternate expression for the area

Thus the are depends only on  , hence it's an intrinsic property of the surface.

, hence it's an intrinsic property of the surface.

For a local surface  with an adapted frame,

with an adapted frame,

Let  be a local surface and

be a local surface and  be a function.

be a function.

Then the integral over  over the surface is given by

over the surface is given by

In particular,

gives the are of the local surface. The 2-form  is called the area form.

is called the area form.

Definitions

Words

- space-curves

- curves in

- plane curves

- curves in

- canonically

- "independent of the choice"

- rigid motion / euclidean motion

- motion which does not change the "structure", i.e. translation or rotation

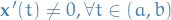

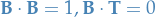

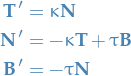

Regular curves

A curve  is regular if its velocity (or tangent) vector

is regular if its velocity (or tangent) vector  .

.

The tangent line to a regular curve  at

at  is the line

is the line  .

.

A unit-speed curve  is biregular if

is biregular if  , where

, where  denotes the curvature.

denotes the curvature.

(Note that a unit-speed curve is necessarily regular.)

The principal normal along a unit-speed biregular curve  is

is

The binormal vector field along  is

is

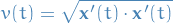

The norm of the velocity

is the speed of th curve at  .

.

A parametrisation of a regular curve  s.t.

s.t.  is called a unit-speed parametrisation.

is called a unit-speed parametrisation.

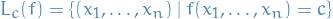

Level set

The level set of a real-valued function  of

of  variables is the set of the form

variables is the set of the form

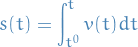

Arc-length

The arg-length of a regular curve  from

from  to

to  is

is

For a unit-speed parametrisation we have  , hence it is also called an arc-length parametrisation.

, hence it is also called an arc-length parametrisation.

As we can see in the notes, there's a theorem which says that for any regular curve, there exists a reparametrisation of which is unit-speed.

Most reparametrisations are difficult to compute, and thus it's mostly used as a theoretical tool.

Example: Helix

The helix in  is defined by

is defined by

which is an arc-length parametrisation

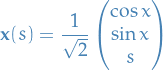

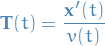

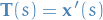

Curvature

The unit tangent vector field along a regular curve is  is

is

Thus, for a unit-speed curve  it is simply

it is simply  .

.

For a unit-speed curve  the curvature

the curvature  is defined by

is defined by

Torsion

The torsion of a biregular unit-speed curve  is defined by

is defined by

or equivalently  .

.

The oscillating plane at a point on a curve is the plane spanned by  and

and  . The torsion measure how fast the curve is twisting out of this plane.

. The torsion measure how fast the curve is twisting out of this plane.

Isometry

An isometry of  is a map

is a map  given by

given by

where  is an orthogonal matrix and

is an orthogonal matrix and  is a fixed vector.

is a fixed vector.

If  , so that

, so that  is a rotation matrix, then the isometry is said to be Euclidean motion or a rigid motion.

is a rotation matrix, then the isometry is said to be Euclidean motion or a rigid motion.

If  the isometry is orientation-reversing.

the isometry is orientation-reversing.

By definition, an isometry preserves the Euclidean distance between two points  .

.

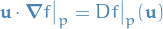

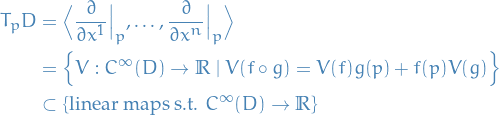

Tangent spaces

we define the tangent space

we define the tangent space  to

to  at

at  as the set of all derivative operators at

as the set of all derivative operators at  , called tangent vectors at

, called tangent vectors at

and thus we have

in the notation we love so much.

Vector fields are directional derivatives.

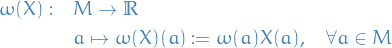

A vector field is defined by the tangent at each point  for all

for all  in the domain of the vector field.

in the domain of the vector field.

It's important to remember that these  are curves which are parametrised arbitrarily, and thus describe any potential curve not just the

are curves which are parametrised arbitrarily, and thus describe any potential curve not just the  you are "used" to seeing.

you are "used" to seeing.

In words

- Tangent space of a manifold facilitiates the generalization of vectors from affine spaces to general manifolds

Tangent vector

There are different ways to view a tangent vector:

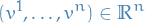

Physists view

Basically considers the tangent vector as a directional derivative

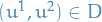

A tangent vector to  at

at  is determined by an n-tuple

is determined by an n-tuple

for each choice of coordinates  at

at  , such that,

, such that,  is the set of coordinates, we have

is the set of coordinates, we have

In your "normal" vector spaces we're used to thinking about direction and derivatives as two different concepts (which they are) which can exist independently of each other.

Now, in differential geometry, we only consider these concepts together ! That is, the direction is defined by the basis which the tangent vectors ("derivative" operators) defines.

"Geometric" view

This is a more "intuitive" way of looking at tangent vectors, which directly generalises the concept used in Euclidean space.

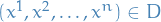

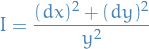

A (regular) curve in  is a (smooth) map

is a (smooth) map  , given by

, given by

where each  is a smooth function, such that its velocity

is a smooth function, such that its velocity

is non-vanishing,  , (as an element of

, (as an element of  ) for all

) for all  . We say that a curve

. We say that a curve  passes through

passes through  if, say

if, say  (without loss of generality one can always take the parameter value at

(without loss of generality one can always take the parameter value at  to be 0).

to be 0).

means a map from the open range

means a map from the open range  to

to  , NOT a map which "takes two arguments", duh…

, NOT a map which "takes two arguments", duh…

Let  be a curve that passes through

be a curve that passes through  . There exists a unique

. There exists a unique  such that for any smooth function

such that for any smooth function

There is a one-to-one correspondence between velocities of curves that pass through  and tangent vectors in

and tangent vectors in  . By (standard) abuse of notation sometimes we denote

. By (standard) abuse of notation sometimes we denote  by the corresponding velocity

by the corresponding velocity  .

.

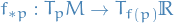

Tangent vector of smooth curves

This approach is quite similar to the geometric view of tangent vectors described above, but I prefer this one.

As of right now, you should have a look at the section about Tangent space and manifolds, as I'm not entirely sure whether or not this can be confusing together with the different notation and all. Nonetheless, the other section is more interesting as it's talking about tangent vectors and general manifolds rather than the more "specific" cases we've been looking at above.

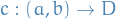

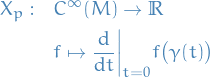

Let  be a smooth curve and

be a smooth curve and  (wlog).

(wlog).

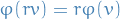

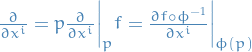

The tangent vector to curve  at

at  is a linear map

is a linear map

where

where  is a chart map.

is a chart map.

Often denote  by

by  .

.

Tangent as the dual-space of the cotangent space

This section introduces the tanget space as the dual of the cotangent space. Furthermore, we construct the cotangent space in quite a "axiomatic" manner: defining the cotangent space as a quotient space of real-valued smooth functions on the manifold  . It is almost an exact duplicate of the lecture notes provided by Prof. José Miguel Figueroa-O'Farrill in the course Differentiable Manifolds taught at University of Edinburgh in 2019.

. It is almost an exact duplicate of the lecture notes provided by Prof. José Miguel Figueroa-O'Farrill in the course Differentiable Manifolds taught at University of Edinburgh in 2019.

- Notation

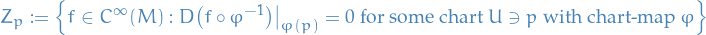

Zero-derivative vector subspace

- Stuff

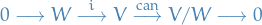

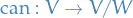

The cotangent space at some point

is the quotient vector space

is the quotient vector space

where

i.e. all those functions which have vanishing derivative when composed with the inverse of some chart map

.

.

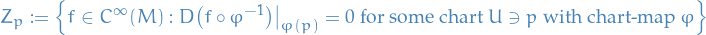

The derivative of

at

at  is the image of

is the image of  under the surjective linear map

under the surjective linear map

which is simply the canonical map arising from the original space

to the quotient space

to the quotient space  .

.

Observe that

, and so we can indeed take the derivative.

, and so we can indeed take the derivative.

as defined in the definition of the cotangent space forms a vector subspace.

as defined in the definition of the cotangent space forms a vector subspace.

If

is a smooth function in a neighborhood of

is a smooth function in a neighborhood of  , we can multiply

, we can multiply  by a some bump-function

by a some bump-function  to construct

to construct  .

.

For any choice of bump function

,

,  agrees with

agrees with  in some neighborhood of

in some neighborhood of  . Therefore its derivative at

. Therefore its derivative at  is independent of the bump function chosen. Thus we can define the derivative at

is independent of the bump function chosen. Thus we can define the derivative at  of

of  functions which are only defined in a neighborhood of

functions which are only defined in a neighborhood of  , e.g. the coordinate functions!

, e.g. the coordinate functions!

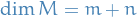

Let

be an n-dimensional manifold. Then

be an n-dimensional manifold. Then

is an n-dimensional vector space

is an n-dimensional vector space

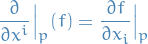

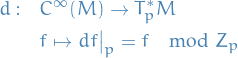

- If

is a coordinate chart around

is a coordinate chart around  with local coordinates

with local coordinates  then

then  are a basis for

are a basis for  .

. If

, then

, then

If

then letting

then letting  means that

means that

is a (locally defined) smooth function whose derivative vanish at

. This is seen by considering the composition with

. This is seen by considering the composition with  :

:

where

denotes the Euclidean coordinates, i.e.

denotes the Euclidean coordinates, i.e.  . This implies that

. This implies that

(since the partial derivative wrt.

"pass through

"pass through  ).

Therefore,

).

Therefore,

and hence

span

span  .

.

Now we just need to show that

are also linearly independent. Suppose

are also linearly independent. Suppose

Then the function

has vanishing derivative at

has vanishing derivative at  , and so

, and so  has vanishing derivative at

has vanishing derivative at  . But

. But  is a linear function and so the derivative at any point vanish if and only if it is the zero function. Therefore

is a linear function and so the derivative at any point vanish if and only if it is the zero function. Therefore  for all

for all  , and so

, and so  are also linearly independent, and hence form a basis of

are also linearly independent, and hence form a basis of  .

.

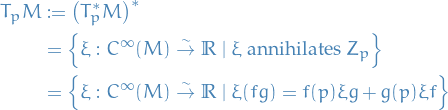

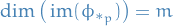

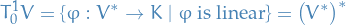

The tangent space

at

at  is the dual of the cotangent vector space.

is the dual of the cotangent vector space.  .

.

This is reasonable for finite-dimensional spaces since in these cases

for vector space

for vector space  , i.e. dual of dual is original vector space.

, i.e. dual of dual is original vector space.

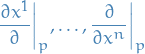

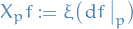

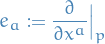

If

is the local coordinate at

is the local coordinate at  and

and  is a basis of

is a basis of  , the canonical basis for

, the canonical basis for  is denoted

is denoted

To relate the tangent space to a more intuitive notion, we introduce the directional derivative.

A directional derivative at

is a linear map

is a linear map

s.t.

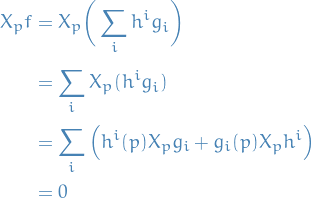

Observe that if

it defines a linear map

it defines a linear map

and from the formula for

,

,

Therefore

is a directional derivative at

.

.

All tangent vectors are of this form.

An example of a directional derivative is if

, then for any tangent direction

, then for any tangent direction  to

to  at

at  we can define the derivative of

we can define the derivative of  at

at  along

along  to be the real number

to be the real number

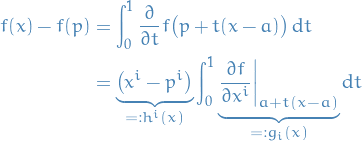

Let

be a directional derivative at

be a directional derivative at  and let

and let  . Then

. Then

Use a coordinate chart near

. By the FTC,

. By the FTC,

Using a bump function we can extend

and

and  from a neighborhood of

from a neighborhood of  to

to  .

.

Notice that

and if

and if  then

then  as well. Therefore

as well. Therefore

By the Leibniz rule,

and by linearity,

. Therefore

. Therefore

Therefore

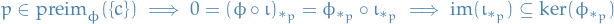

kills

kills  and descends to a linear map

and descends to a linear map  , i.e.

, i.e.

Therefore, as a result of the above lemma, we get

we can also write

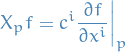

Relative to local coordinates,

and

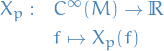

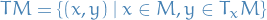

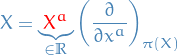

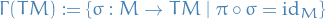

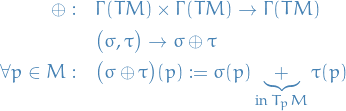

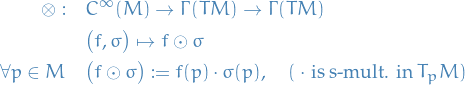

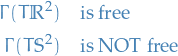

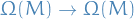

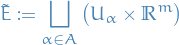

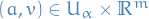

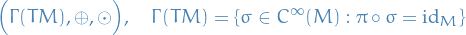

Tangent bundle

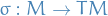

The tangent bundle of a differential manifold  is a manifold

is a manifold  , which assembles all the tangent vectors in

, which assembles all the tangent vectors in  . As a set it's given by the disjoint union of the tangent spaces of

. As a set it's given by the disjoint union of the tangent spaces of  , i.e.

, i.e.

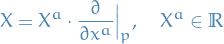

Thus, an element in  can be thought of as a pair

can be thought of as a pair  , where

, where  is a point in the manifold

is a point in the manifold  and

and  is a tangent vector to

is a tangent vector to  at the point

at the point  .

.

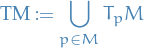

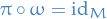

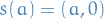

Let  be a smooth manifold. Then the tangent bundle is the set

be a smooth manifold. Then the tangent bundle is the set

and further we define the bundle projection:

where  is the point for which

is the point for which  . This gives us a set bundle; now we just have to show that the fibres are indeed isomorphic, and thus we've obtained a fibre bundle.

. This gives us a set bundle; now we just have to show that the fibres are indeed isomorphic, and thus we've obtained a fibre bundle.

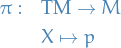

Idea: construct a smooth atlas on  from a given smooth atlas on

from a given smooth atlas on  .

.

- Take some chart

Construct

where we define

as

as

where

- First

coordinates we observe is projecting the tangent at some point

coordinates we observe is projecting the tangent at some point  onto the point itself

onto the point itself  , i.e.

, i.e.  (we don't write

(we don't write  in the above because we can do this for any point in the manifold)

in the above because we can do this for any point in the manifold) Second

coordinates account of the direction and magnitude of the tangent

coordinates account of the direction and magnitude of the tangent  , i.e. we choose the coefficients of

, i.e. we choose the coefficients of  in the tangent space at that point!

in the tangent space at that point!

- First

Finally we need to ensure that this map

is indeed smooth :

We start by considering the total space, which is the space of all sections

is indeed smooth :

We start by considering the total space, which is the space of all sections  , i.e.

, i.e.

equipped with the two operations:

and multiplication:

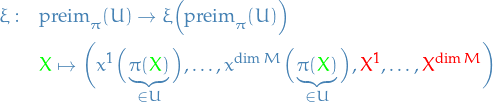

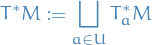

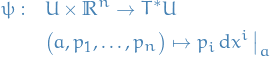

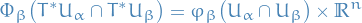

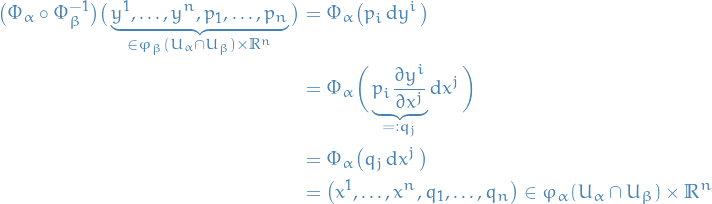

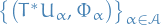

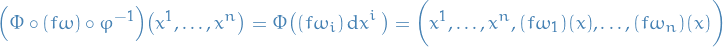

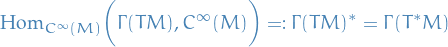

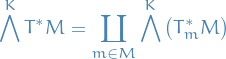

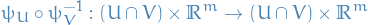

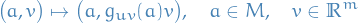

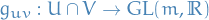

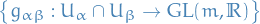

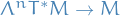

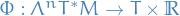

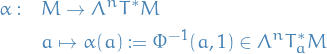

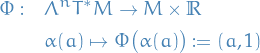

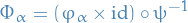

Cotangent bundle

Let  be n-dimensional and let

be n-dimensional and let

denote the disjoint union of all the cotangent spaces of  .

.

If  is a chart of

is a chart of  , then the map

, then the map

defines a bijection, and allows us to define

It then follows that  is a bjection from

is a bjection from  to an open subsets of

to an open subsets of  , hence

, hence  defines a chart of

defines a chart of  . In this way we can bring the charts of

. In this way we can bring the charts of  up to

up to  , thus

, thus  is a manifold.

is a manifold.

Since  we have that

we have that  . Therefore we only need to check that indeed the transition maps

. Therefore we only need to check that indeed the transition maps

First observe that

which is an open subset of  . So the transition map is open. To see smoothness, let

. So the transition map is open. To see smoothness, let  be local coordinates of

be local coordinates of  and

and  be local coordinates of

be local coordinates of  . Then

. Then

and

so

Therefore

Since  is a diffeomorphism

is a diffeomorphism  is smooth in the first

is smooth in the first  components. Furthermore,

components. Furthermore,  is smooth since the derivative of smooth functions are smooth and

is smooth since the derivative of smooth functions are smooth and  depends linearly on

depends linearly on  .

.

Hence,  is smooth for all

is smooth for all  , and so

, and so  defines an atlas for

defines an atlas for  .

.

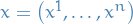

Let

i.e. in local coords we have  .

.

is smooth.

is smooth.

being "smooth" means that

being "smooth" means that

is a smooth map.

Let  be local coordinates of

be local coordinates of  . Then

. Then

since  . More concretely,

. More concretely,

Hence,

which is clearly smooth since  are all smooth maps.

are all smooth maps.

is Hausdorff and second countable.

is Hausdorff and second countable.

Second-countability follows directly from the fact that  is second-countable, and so

is second-countable, and so  is second-countable.

is second-countable.

In what follows we are considering  as points, i.e.

as points, i.e.  for some

for some  .

.

Let  and

and  , then we have the following two cases:

, then we have the following two cases:

: since

: since  is Hausdorff, there exists two sets

is Hausdorff, there exists two sets  such that

such that  , and so we're good.

, and so we're good. : we have chart

: we have chart  , and so

, and so  is homeomorphic to some subset of

is homeomorphic to some subset of  .

.  is Hausdorff, therefore

is Hausdorff, therefore  such that

such that  and

and  .

.

Hence  is also Hausdorff.

is also Hausdorff.

In the above we are talking about open subsets of  but as we have seen before, since chart

but as we have seen before, since chart  induces chart

induces chart  , any set

, any set  open is equivalent to saying that the intersection

open is equivalent to saying that the intersection  is open for

is open for  . This in turn means that there exist some

. This in turn means that there exist some  such that

such that  , therefore we can equivalently consider this open set

, therefore we can equivalently consider this open set  .

.

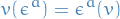

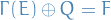

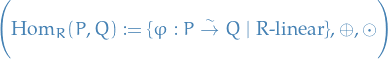

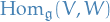

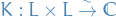

Dual space

Let  be a vectorspace over

be a vectorspace over  . Then the dual space of

. Then the dual space of  denoted as

denoted as  , is given by

, is given by

Properties

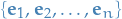

Dual Basis

Honestly, "automatically" is a bit weird. What is actually happening as follows:

Suppose that we have a basis in  defined by the set of vectors

defined by the set of vectors  . then we can construct a basis in the dual space

. then we can construct a basis in the dual space  , called the dual basis. This dual basis is defined by the set

, called the dual basis. This dual basis is defined by the set  of linear functions / 1-forms on

of linear functions / 1-forms on  , defined by the relation

, defined by the relation

for any choice of coefficients  in the field we're working in (which is usally

in the field we're working in (which is usally  ).

).

In particular, letting each of these coefficients be equal to 1 and the rest equal zero, we get the following set of equations

which defines a basis.

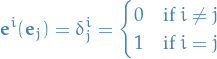

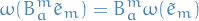

If  is a basis for

is a basis for  , we automatically get a dual basis

, we automatically get a dual basis  for

for  , defined by

, defined by

If  (is finite), then

(is finite), then

Dual of the dual

If

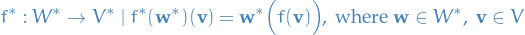

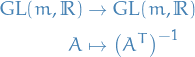

Map between duals

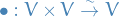

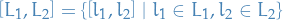

If  in a linear map between (dual) vector spaces

in a linear map between (dual) vector spaces  get canonically a dual map :

get canonically a dual map :

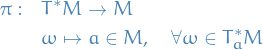

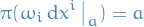

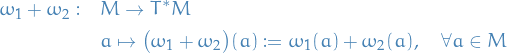

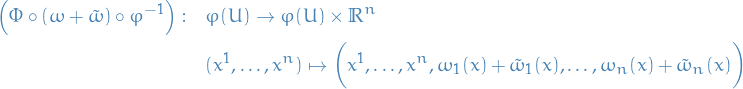

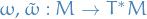

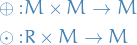

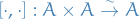

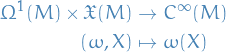

1-forms

Aight, so this is the proper definition of a one-form.

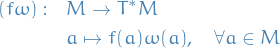

A (differential) one-form is a smooth section  on the cotangent bundle, i.e. satisfying

on the cotangent bundle, i.e. satisfying

We denote the space of one-forms as  .

.

is a

is a  .x

.x

Let

be a chart of

be a chart of  with local coordinates

with local coordinates

be a chart of

be a chart of  as defined in def:cotangent-bundle

as defined in def:cotangent-bundleDefine

To see that

we need the map

we need the map

to be smooth. Writing the the map out explicitly, we have

where

(i.e. it's really just

(i.e. it's really just  ), which is smooth by smoothness of

), which is smooth by smoothness of  .

.

Define

Again we require smoothness of the corresponding composition with the charts:

which again is smooth since

are smooth sections, i.e.

are smooth sections, i.e.  .

.

Hence,  is closed under (scalar)

is closed under (scalar)  and addition, i.e. defines a module.

and addition, i.e. defines a module.

: follows directly from the fact that both

: follows directly from the fact that both  and

and  are

are  , and

, and  .

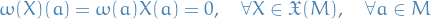

.Non-degenerate: suppose

is non-zero and

is non-zero and

Then either

or

or  forall

forall  . The non-degeneracy for

. The non-degeneracy for  follows by an almost identical argument.

follows by an almost identical argument.

[DEPRECATED] Old definition

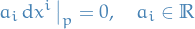

A 1-form at  is a linear map

is a linear map  . This means, for all

. This means, for all  and

and  ,

,

1-forms is equivalent to linear functionals

The set of 1-forms at  , denoted by

, denoted by  , is called the dual vector space of

, is called the dual vector space of

We define 1-forms  at each

at each  by their action on the basis

by their action on the basis  :

:

Or equivalently,  are defined by their action on an arbitrary tangent vector

are defined by their action on an arbitrary tangent vector  :

:

Differential 1-form

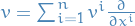

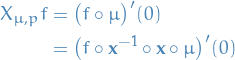

A differential 1-form on  is a smooth map

is a smooth map  which assigns to each

which assigns to each  a 1-form in

a 1-form in  ; it can be written as:

; it can be written as:

where  are smooth functions.

are smooth functions.

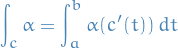

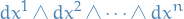

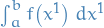

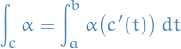

Line integrals

Let ![$c : [a, b] \to D$](../../assets/latex/geometry_5e5e47eb13f6fa87443f659e0904306b92113ff6.png) be a curve (the end points are included to ensure the integrals exist) and

be a curve (the end points are included to ensure the integrals exist) and  on the 1-form on

on the 1-form on  . The integral of

. The integral of  over the curve

over the curve  is

is

where  is the tangent vector field to the curve.

is the tangent vector field to the curve.

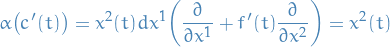

Working in coordinates, the result of applying the 1-form  on

on  gives the expression

gives the expression

i.e. the derivative of  wrt.

wrt.  times the evaluation of

times the evaluation of  at

at  , where

, where  denotes the evaluation of

denotes the evaluation of  along

along  .

.

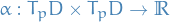

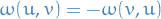

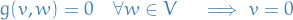

k-form

A 2-form at  is a map

is a map  which is linear in each argument and alternating

which is linear in each argument and alternating

More generally, a k-form at  is a map of

is a map of  vectors in

vectors in  to

to  which is multilinear (linear in each argument) and alternating (changes sign under a swap of any two arguments).

which is multilinear (linear in each argument) and alternating (changes sign under a swap of any two arguments).

And even more general, on the vector space  with

with  , a k-form (

, a k-form ( ) is a

) is a  tensor that is anti-symmetric, e.g. for a 2-form

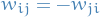

tensor that is anti-symmetric, e.g. for a 2-form

In the case of a k-form, if  , where

, where  , then

, then  are top forms, both non-vanishing:

are top forms, both non-vanishing:

i.e. any two top-forms are equal up to a constant factor.

Further, the definition of a volume on some d-dimensional vector space, completely depends on your choice of top-form.

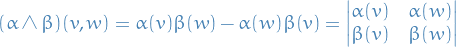

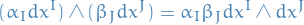

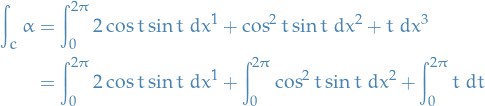

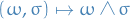

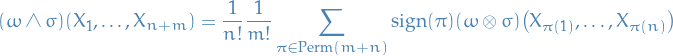

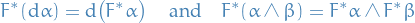

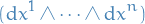

Wedge product

The wedge product or exterior product  of 1-forms

of 1-forms  and

and  is a 2-form defined by the following bilinear (linear in both arguments) and alternating map

is a 2-form defined by the following bilinear (linear in both arguments) and alternating map

More generally, the wedge product of  1-forms,

1-forms,  can be defined as a map acting on

can be defined as a map acting on  vectors

vectors

From the properties of the determinant it follows that the resulting map is linear in each vector sperarately an changes sign if any pair of vectors is exchanged (this corresponds to exchanging two columns in the determinant). Hence it defines a k-form.

Wedge product between different forms

We extend  linearly in order to define the wedge product of a

linearly in order to define the wedge product of a  -form

-form  and an

and an  -form

-form  . Explicitly,

. Explicitly,

Here the sum is happening over all multi-indices  and

and  with

with  and

and  .

.

Now two things can happen:

, in which case

, in which case  since there will be a repeated index

since there will be a repeated index , in which chase

, in which chase  , for some muli-index K of length

, for some muli-index K of length  . The sign is due to having to reorder them to be increasing.

. The sign is due to having to reorder them to be increasing.

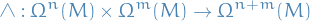

Therefore, the wedge product defines a (bilinear) map

Multi-index

Useful as more "compact" notation.

By a multi-index  of length

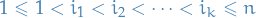

of length  we shall mean an increasing sequence

we shall mean an increasing sequence  of integers

of integers  . We will write

. We will write

The set of k-forms at  is a vector space of dimension

is a vector space of dimension  for

for  with basis

with basis  .

.

Here  denotes the maximum number of dimensions. So we're just saying that we're taking the wedge-product between some

denotes the maximum number of dimensions. So we're just saying that we're taking the wedge-product between some  indicies of the 1-forms we're considering.

indicies of the 1-forms we're considering.

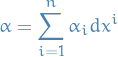

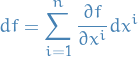

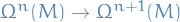

Differential k-form

A differential k-form or a differential form of degree k on  is a smooth map

is a smooth map  which assigns to each

which assigns to each  a k-form at

a k-form at  ; it can be written as

; it can be written as

where  are smooth functions, and the sum happens over all multi-indices

are smooth functions, and the sum happens over all multi-indices  with

with  .

.

Given two differential k-forms  and a function

and a function  the differential k-forms

the differential k-forms  and

and  are

are

The set of k-forms on  is denoted

is denoted  .

.

By convention, a zero-form is a function. If  then

then  (for every form has a repeated index).

(for every form has a repeated index).

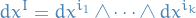

To make the notation used a bit more apparent, we can expand  for

for  in for a vector-space in

in for a vector-space in  , i.e.

, i.e.  , defined above as follows:

, defined above as follows:

where we've used the fact that  . and just combined the "common" wedge-products.

It's very important to remember that the

. and just combined the "common" wedge-products.

It's very important to remember that the  here represents a 0-form / smooth function.

The actual definition of

here represents a 0-form / smooth function.

The actual definition of  is as a sum of all possible

is as a sum of all possible  but the above definition is just encoding the fact that

but the above definition is just encoding the fact that  .

.

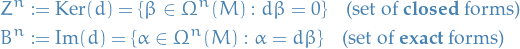

A form  is said to be closed if

is said to be closed if  .

.

A form  is said to be exact if

is said to be exact if

for some  .

.

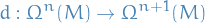

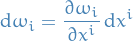

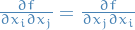

Exterior derivative

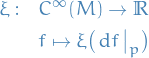

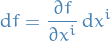

Let  (i.e. it's a 0-form), and define

(i.e. it's a 0-form), and define

where  denotes the exterior derivative (or the "differential") of

denotes the exterior derivative (or the "differential") of  .

.

Then  is a one-form, i.e.

is a one-form, i.e.

First recall that for a chart  with local coordinates

with local coordinates  ,

,

is smooth:

Let

is smooth:

Let  be a chart defined as in def:cotangent-bundle, then the map is smooth iff

be a chart defined as in def:cotangent-bundle, then the map is smooth iff

is smooth. Writing out this map we simply have

REMINDER: recall what

actually means.

actually means.

where

denotes the partial derivative wrt. the i-th component of the map.

denotes the partial derivative wrt. the i-th component of the map.

is clear

is clear

Given a smooth function  on

on  , its exterior derivative (or differential) is the 1-form

, its exterior derivative (or differential) is the 1-form  defined by

defined by

for any vector field  . Equivalently

. Equivalently

Let  be a smooth function, i.e.

be a smooth function, i.e.  .

.

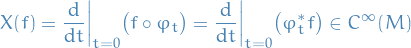

As it turns out, in this particular case, the push-forward of  , denoted

, denoted  is equivalent to the exterior derivative!

is equivalent to the exterior derivative!

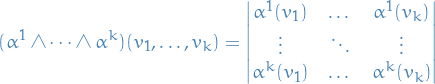

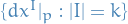

If  , then its exterior derivative

, then its exterior derivative  is

is

where  denotes the exterior derivative of the function

denotes the exterior derivative of the function  (which we defined earlier).

(which we defined earlier).

More explicitly, take the example of the exterior derivative of a 1-form, i.e.  :

:

from the the definition of  where

where  is a function (0-form), and

is a function (0-form), and  .

.

Theorems

The exterior derivative  is a linear map satisfying the following properites

is a linear map satisfying the following properites

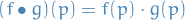

obeys the graded derivation property, for any

obeys the graded derivation property, for any

for any

for any  , or more compactly,

, or more compactly,

Example problems

Handin 2

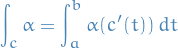

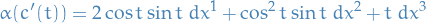

Let ![$c : [0, 2 \pi] \to \mathbb{R}^3$](../../assets/latex/geometry_05ac517ec743fcf34e663993a224a289b0158f45.png) be the helix

be the helix  and consider the 1-form on

and consider the 1-form on

Find the tangent

at each point along curve. Hence evaluate the line integral of the 1-form

at each point along curve. Hence evaluate the line integral of the 1-form  along the curve

along the curve  .

.

1

1

Hence the integral is

The tangent plane at some point

along the curve

along the curve  for a specified

for a specified  is given by

is given by

2

2

which in this case is equivalent of

3

3

Concluding the first part of the claim.

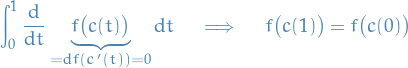

For the integral, we know that

4

4

for the boundaries

and

and  .

Computing

.

Computing  we get

we get

5

5

6

6

- Show that

. Now find a smooth function

. Now find a smooth function  such that

such that  . Hence evaluate the above line integral without explicit integration.

. Hence evaluate the above line integral without explicit integration.

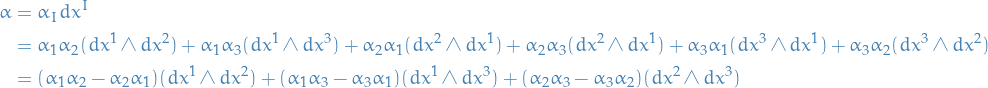

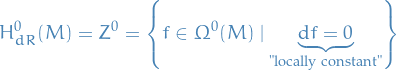

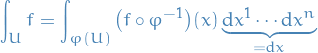

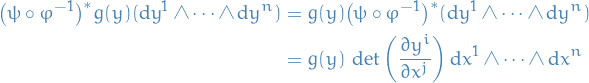

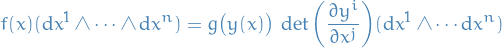

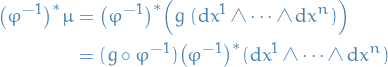

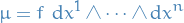

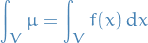

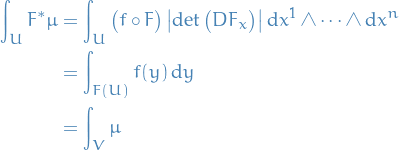

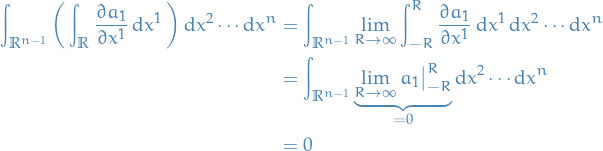

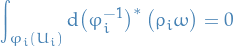

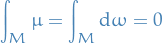

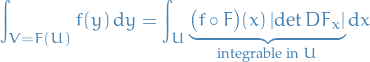

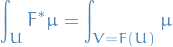

Integration in Rn

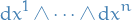

The standard orientation (which we always assume) is defined by

Coordinates  (an ordered set) are said to be oriented on

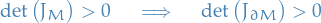

(an ordered set) are said to be oriented on  if and only if

if and only if  is a positive multiple of

is a positive multiple of  for all

for all  .

.

Observe that this induces an orientation on  , since we simply apply

, since we simply apply  to the coordinates

to the coordinates  , thus returning a

, thus returning a  or

or  dependening on whether or not the surface is oriented.

dependening on whether or not the surface is oriented.

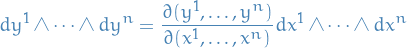

Let  be oriented coordinates for

be oriented coordinates for  . Let

. Let  be smooth functions on

be smooth functions on  . Then

. Then

where the factor on the RHS is hte Jacobian of the coordinate transformation (i.e. the determinant of the matrix whose  component is

component is  .

.

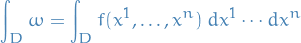

Let  be oriented coordinates on

be oriented coordinates on  and write

and write

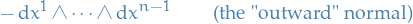

Then the integral of  over

over  is defined by

is defined by

where the RHS is now the usual multi-integral of several variable caculus (provided it exists).

Topological space

A topological space may be defined as a set of points, along with a set of neighbourhoods for each point, satisfying a set of axioms relating points and neighbourhoods.

Or more rigorously, let  be a set. A topology on

be a set. A topology on  is a collection

is a collection  of subsets of

of subsets of  , called open subsets, satisfying:

, called open subsets, satisfying:

and

and  are open

are open- The union of any family of open subsets is open

- The intersection of any finite family of open subsets is open

A topological space is then a pair  consisting of a set

consisting of a set  together with a topology

together with a topology  on

on  .

.

The definition of a topological space relies only upon set theory and is the most general notion of mathematical space that allows for the definition of concepts such as:

- continuity

- connectedness

- convergence

A topology is a way of constructing a set of subsets of  such that theese subsets are open and satisfy the properties described above.

such that theese subsets are open and satisfy the properties described above.

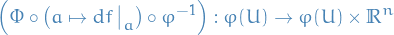

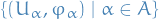

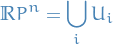

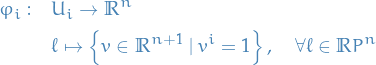

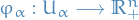

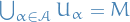

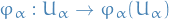

Atlases & coordinate charts

A chart for a topological space  (also called a coordinate chart, coordinate patch, coordinate map, or local frame ) is a homeomorphism

(also called a coordinate chart, coordinate patch, coordinate map, or local frame ) is a homeomorphism  , where

, where  is an open subset of

is an open subset of  . The chart is traditionally denoted as the ordered pair

. The chart is traditionally denoted as the ordered pair  .

.

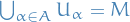

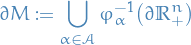

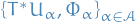

An atlas for a topological space  is a collection

is a collection  , indexed by the set

, indexed by the set  , of charts on

, of charts on  s.t.

s.t.  .

.

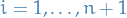

If the codomain of each chart is the n-dimensional Euclidean space, then  is said to be n-dimensional manifold.

is said to be n-dimensional manifold.

Two atlases  and

and  on

on  are compatible if their union is also an atlas.

are compatible if their union is also an atlas.

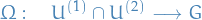

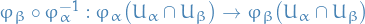

So we need to check the following properties:

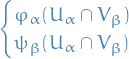

The following are open in

for all

for all  and all

and all

and

and  are

are  for all

for all  and all

and all  .

.

Compatibility of atlases define a equivalence relation of atlases.

A differentiable structure on  is an equivalence class of compatible atlases.

is an equivalence class of compatible atlases.

Often one defines differentiable structure with a "maximal atlas" instead of an equivalence class. The "maximal atlas" is obtained by simply taking the union of all atlases in the equivalence class.

A transition map is a composition of one chart with the inverse of another chart, which defines a homeomorphism of an open subset of the  onto another open subset of the

onto another open subset of the  ..

..

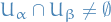

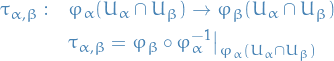

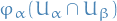

Suppose we have the following two charts on some manifold

such that

The transition map is defined

where we've used the notation

to denote that the function  is restricted to the domain

is restricted to the domain  , i.e. the statement is only true on that domain.

, i.e. the statement is only true on that domain.

A differentiable manifold is a topological manifold equipped with an equivalence class of atlases whose transition map are all differentiable.

More generally, a  manifold is a topological manifold for which all the transition maps are all k-times differentiable.

manifold is a topological manifold for which all the transition maps are all k-times differentiable.

A smooth manifold or  manifold is a differentiable manifold for which all the transition map are smooth.

manifold is a differentiable manifold for which all the transition map are smooth.

To prove  is a smooth manifold if suffices to find one atlas due to the compatibility of atlases being an equivalence relation.

is a smooth manifold if suffices to find one atlas due to the compatibility of atlases being an equivalence relation.

A complex manifold is a topological space modeled on the Euclidean space over the complex field and for which all the transition maps are holomorphic.

When talking about "some-property-manifold", it's important to remember that the "some-property" part is specifying properties of the atlas which we have equipped the manifold with.

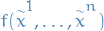

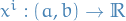

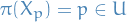

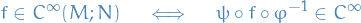

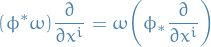

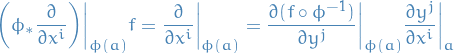

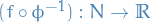

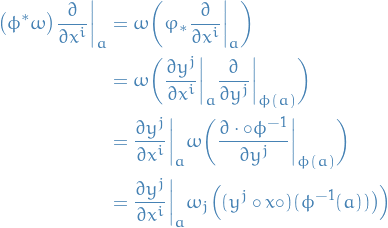

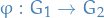

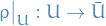

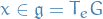

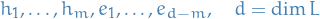

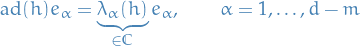

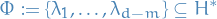

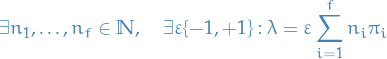

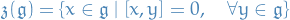

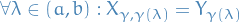

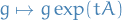

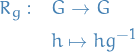

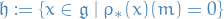

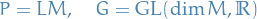

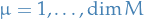

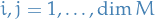

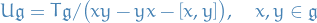

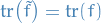

is smooth if for any chart