Probability & Statistics

Table of Contents

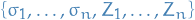

Notation

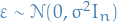

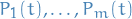

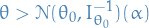

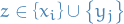

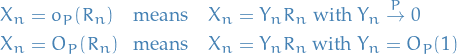

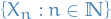

denotes the distribution of some random variable

denotes the distribution of some random variable

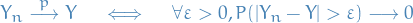

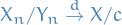

means convergence in probability for random elements

means convergence in probability for random elements  , refers to

, refers to

means convergence in distribution for random elements

means convergence in distribution for random elements  , i.e.

, i.e.  in distribution if

in distribution if  for all continuity points

for all continuity points  of

of  .

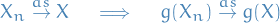

. means almost surely convergence for random elements

means almost surely convergence for random elements

Theorems

Chebyshev Inequality

Let  (integrable) be a random variable with finite expected value

(integrable) be a random variable with finite expected value  and finite non-zero variance

and finite non-zero variance  .

.

Then for any real number  ,

,

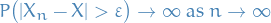

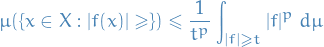

Let  be a measure space, and let

be a measure space, and let  be an extended real-valued measureable-function defined on

be an extended real-valued measureable-function defined on  .

.

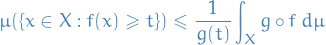

Then for any real number  and

and

Or more generally, if  is an extended real-valued measurable function, nonnegative and nondecreasing on the range of

is an extended real-valued measurable function, nonnegative and nondecreasing on the range of  , then

, then

Hmm, not quite sure how to relate the probabilistic and measure-theoretic definition..

Markov Inequality

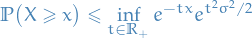

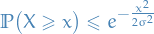

If  is a nonnegative random variable and

is a nonnegative random variable and  , then

, then

![\begin{equation*}

P \big( X \ge a \big) \le \frac{\mathbb{E}[X]}{a}

\end{equation*}](../../assets/latex/probability_and_statistics_019a98278452845f8b8bb6b44608297d85c8e1a4.png)

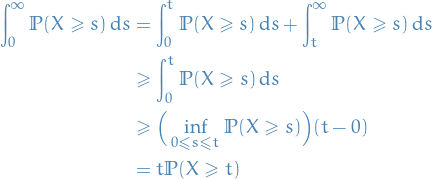

First note that

![\begin{equation*}

\mathbb{E}[X] = \int_{0}^{\infty} \mathbb{P}(X \ge t) \dd{t}

\end{equation*}](../../assets/latex/probability_and_statistics_8c1c901415a27fd332fa45ee2e8acb4dbb3c486c.png)

and

Hence,

![\begin{equation*}

\mathbb{P}(X \ge t) \le \frac{\mathbb{E}[X]}{t}

\end{equation*}](../../assets/latex/probability_and_statistics_fe338901aa40dfd0b5f0c6f1454f4a90f05dc8b9.png)

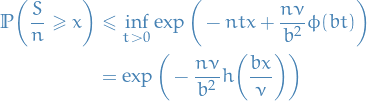

Law of large numbers

There are mainly two laws of large numbers:

Often the assumption of finite variance is made, but this is in fact not necessary (but makes the proof easier). Large or infinite variance will make the convergence slower, but the Law of Large Numbers holds anyway.

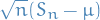

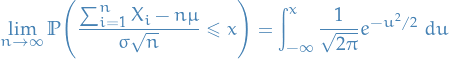

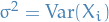

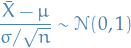

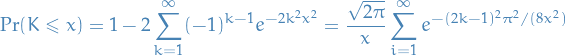

Central Limit Theorem

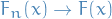

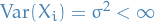

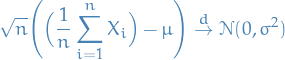

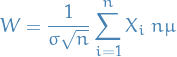

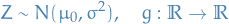

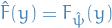

Suppose  is a sequence of i.i.d. random variables with

is a sequence of i.i.d. random variables with ![$\mathbb{E}[X_i] = \mu$](../../assets/latex/probability_and_statistics_49407bea91d8d9a9fb1131a9ad1c70aa646f9c12.png) and

and  . Then,

. Then,

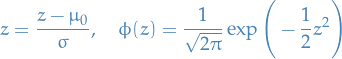

where  means convergence in distribution, i.e. that the cumulative distribution functions of

means convergence in distribution, i.e. that the cumulative distribution functions of  converge pointwise to the cdf of the

converge pointwise to the cdf of the  distribution: for any real number

distribution: for any real number  ,

,

![\begin{equation*}

\underset{n \to \infty}{\lim} \Pr [ \sqrt{n} (S_n - \mu) \le z ] = \Phi \Big( \frac{z}{\sigma} \Big)

\end{equation*}](../../assets/latex/probability_and_statistics_93e25db4d6b2ee1fdff8306810f893dd273d7c21.png)

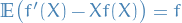

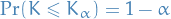

Jensen's Inequality

If

is a convex function.

is a convex function. is a rv.

is a rv.

Then ![$f(E[X]) \leq E[f(x)]$](../../assets/latex/probability_and_statistics_5a704c191584b112ce2eae76d3f11effcc23f4d9.png) .

.

Further, if  (

( is strictly convex), then

is strictly convex), then

![$E[(f(X)] = f(E[X]) \iff X = E[X]$](../../assets/latex/probability_and_statistics_1a19363be0c70d388d505c858c79d669d8e4944c.png) "with probability 1".

"with probability 1".

If we instead have:

is a concave function

is a concave function

Then ![$f(E[X]) \geq E[f(X)]$](../../assets/latex/probability_and_statistics_e3025aae464d32e3c1aa1104a8897f1d7c98b121.png) . This is the one we need for the derivation of EM-algorithm.

. This is the one we need for the derivation of EM-algorithm.

To get a visual intuition, here is a image from Wikipedia:

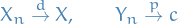

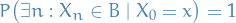

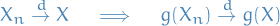

Continuous Mapping Theorem

Let  ,

,  be random elements defined on a metric space

be random elements defined on a metric space  .

.

Suppose a function  (where

(where  is another metric space) has the set of discountinuity points

is another metric space) has the set of discountinuity points  such that

such that  .

.

Then,

We start with convergence in distribution, for which we will need a particular statement from the

Slutsky's Lemma

Let  ,

,  be sequences of scalarvectormatrix random elements.

be sequences of scalarvectormatrix random elements.

If  and

and  , where

, where  is a constant, then

is a constant, then

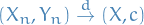

This follows from the fact that if:

then the joint vector  converges in distribution to

converges in distribution to  , i.e.

, i.e.

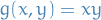

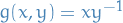

Next we simply apply the Continuous Mapping Theorem, recognizing the functions

as continuous (for the last mapping to be continuous,  has to invertible).

has to invertible).

Definitions

Probability space

A probability space is a measure space  such that the measure of the whole space is equal to one, i.e.

such that the measure of the whole space is equal to one, i.e.

is the sample space (some arbitrary non-empty set)

is the sample space (some arbitrary non-empty set) is the σ-algebra over

is the σ-algebra over  , which is the set of possible events

, which is the set of possible events![$P: \mathcal{A} \to [0, 1]$](../../assets/latex/probability_and_statistics_f7513392d4fb753f764bd899e9b66b18ef63e836.png) which is the probability measure such that

which is the probability measure such that

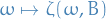

Random measure

Let  be a separable metric space (e.g.

be a separable metric space (e.g.  ) and let

) and let  be its Borel σ-algebra.

be its Borel σ-algebra.

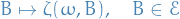

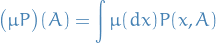

is a transition kernel from a probability space

is a transition kernel from a probability space  to

to  if

if

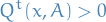

For any fixed

, the mapping

, the mapping

is a measurable function from

to

to

For every fixed

, the mapping

, the mapping

is a (often, probability) measure on

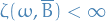

We say a transition kernel is locally finite, if

satisfy  for all bounded measurable sets

for all bounded measurable sets  and for all

and for all  except for some zero-measure set (under

except for some zero-measure set (under  ).

).

Let  be a separable metric space (e.g.

be a separable metric space (e.g.  ) and let

) and let  be its Borel σ-algebra.

be its Borel σ-algebra.

A random measure  is a (a.s.) /locally finite transition kernel from a (abstract) probability space

is a (a.s.) /locally finite transition kernel from a (abstract) probability space  to

to  .

.

Let  be a random measure on the measurable space

be a random measure on the measurable space  and denote the expected value of a random element

and denote the expected value of a random element  with

with ![$\mathbb{E}[Y]$](../../assets/latex/probability_and_statistics_9e0e384984976b658d0d13ce54607cbf71b8971c.png) .

.

The intensity measure  is defined

is defined

![\begin{equation*}

\begin{split}

\mathbb{E}\zeta: \quad & \mathcal{A} \to [0, \infty] \\

& A \mapsto \mathbb{E}\zeta(A) := \mathbb{E} \big[ \zeta(A) \big], \quad \forall A \in \mathcal{A}

\end{split}

\end{equation*}](../../assets/latex/probability_and_statistics_5e3283180c137582df4df73bf4f0e9d976dd4389.png)

So it's a non-random measure which sends an element  of the sigma-algebra

of the sigma-algebra  to the expected value of the

to the expected value of the  , since

, since  is a measurable function, i.e. a random variable.

is a measurable function, i.e. a random variable.

Poisson process

A Poisson process is a generalized notion of a Poisson random variable.

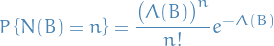

A point process  is a (general) Poisson process with intensity

is a (general) Poisson process with intensity  if it has the two following properties:

if it has the two following properties:

- Number of points in a bounded Borel set

is a Poisson random variable with mean

is a Poisson random variable with mean  .

.

In other words, denote the total number of points located in

by

by  , then the probability of random variable

, then the probability of random variable  being equal to

being equal to  is given by

is given by

- Number of points in

disjoint Borel sets form

disjoint Borel sets form  independent random variables.

independent random variables.

The Radon measure  maintains its previous interpretation of being the expected number of points

maintains its previous interpretation of being the expected number of points  located in the bounded region

located in the bounded region  , namely

, namely

![\begin{equation*}

\Lambda(B) = \mathbb{E} \big[ N(B) \big]

\end{equation*}](../../assets/latex/probability_and_statistics_bf9b7cb287260c314f383ac0bf30d6631f3b9b4b.png)

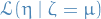

Cox process

Let  be a random measure.

be a random measure.

A random measure  is called a Cox process directed by

is called a Cox process directed by  , if

, if  is a Poisson process with intensity measure

is a Poisson process with intensity measure  .

.

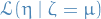

Here  is the conditional distribution of

is the conditional distribution of  , given

, given  .

.

Degeneracy of probability distributions

A degenerate probability distribution is a probability distribution with support only on a lower-dimensional space.

E.g. if the degenerate distribution is univariate (invoving a single random variable), then it's a deterministic distribution, only taking on a single value.

Characteristic function

Let  be a random variable with density

be a random variable with density  and cumulative distribution function

and cumulative distribution function  .

.

Then the characteristic equation is defined as the Fourier transform of  :

:

![\begin{equation*}

\begin{split}

\varphi_X(t) &= \mathbb{E} \big[ e^{i t X} \big] \\

&= \int_{\mathbb{R}}^{} e^{i t x} \dd{F_X(x)} \\

&= \int_{\mathbb{R}}^{} e^{i t x} f(x) \ \dd{x} \\

&= \mathcal{F} \left\{ f(x) \right\}

\end{split}

\end{equation*}](../../assets/latex/probability_and_statistics_0ae57b2266ee55fcc78f2b1e6a84e6daaceda032.png)

Examples

10 dice: you want at least one 4, one 3, one 2, one 1

Consider the number of misses before a first "hit". When we say "hit", we refer to throwing something in the set  .

.

Then we can do as follows:

is the # of misses before our first "hit"

is the # of misses before our first "hit" is the # of misses between our first "hit" and second "hit"

is the # of misses between our first "hit" and second "hit"- …

We then observe the following:

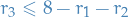

since if we have more than

since if we have more than  misses before our first hit, we'll never be able to get all the events in our target set

misses before our first hit, we'll never be able to get all the events in our target set

We also observe that there are  ,

,  ,

,  and

and  ways of missing for each of the respective target events.

ways of missing for each of the respective target events.

from sympy import * r_1, r_2, r_3, r_4 = symbols("r_1 r_2 r_3 r_4", nonnegative=True, integers=True) s = Sum( Sum( Sum( Sum( (2**(r_1 - 1) * 3**(r_2 - 1) * 4**(r_3 - 1) * 5**(r_4 - 1)) \ / 6**(r_1 + r_2 + r_3 + r_4), (r_4, 1, 10 - r_1 - r_2 - r_3) ), (r_3, 1, 9 - r_1 - r_2) ), (r_2, 1, 8 - r_1) ), (r_1, 1, 7) ) res = factorial(4) * s.doit() print(res.evalf())

Probability "metrics" / divergences

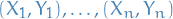

Notation

Overview of the "metrics" / divergences

These definitions are taken from arjovsky17_wasser_gan

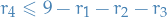

The Total Variation (TV) distance

Or, using slightly different notation, the total variation between two probability measures  and

and  is given by

is given by

These two notions are completely equivalent. One can see this by observing that any discrepancy between  and

and  is "accounted for twice", since

is "accounted for twice", since  , which is why we get the factor of

, which is why we get the factor of  in front. We can then gather all

in front. We can then gather all  where

where  into a subset

into a subset  , making sure to choose

, making sure to choose  or it's complement

or it's complement  such that the difference is positive when

such that the difference is positive when  in the first term. Then we end up with the

in the first term. Then we end up with the  seen above.

seen above.

The Jensen-Shannon (JS) divergence

where  is the mixture

is the mixture  .

.

This divergence is symmetrical and always defiend because we can choose  .

.

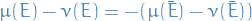

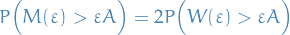

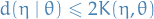

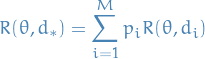

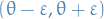

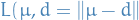

The Earth-Mover (EM) distance or Wasserstein-1

![\begin{equation*}

W(\mathbb{P}_r, \mathbb{P}_g) = \inf_{\gamma \in \Pi(\mathbb{P}_r, \mathbb{P}_g)} \mathbb{E}_{(x, y) \sim \gamma} \big[ \norm{x - y} \big]

\end{equation*}](../../assets/latex/probability_and_statistics_4dc254a423d055e723e06f3b67d8f610b955f76c.png)

where  denotes the set of all joint distributions

denotes the set of all joint distributions  whose marginals are respectively

whose marginals are respectively  and

and  .

.

Intuitively,  indicates how much "mass" must be transported from

indicates how much "mass" must be transported from  to

to  in order to transform the distributions

in order to transform the distributions  into the distribution

into the distribution  . The EM distance then is the "cost" of the optimal transport plan.

. The EM distance then is the "cost" of the optimal transport plan.

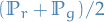

Kullback-Leibler divergence

Definition

The Kullback-Leibler (KL) divergence

where both  and

and  are assumed to be absolutely continuous, and therefore admit densities, wrt. to the same measure

are assumed to be absolutely continuous, and therefore admit densities, wrt. to the same measure  defined on

defined on  .

.

The KL divergence is famously asymmetric and possibly infinite / undefined when there are points s.t.  and

and  .

.

where in the inequality we have use Jensen's inequality together with the fact that  is a convex function.

is a convex function.

Why?

Kullback-Leibner divergence from some probability-distributions  and

and  , denoted

, denoted  ,

is a measure of the information gained when one revises one's beliefs from the prior distribution

,

is a measure of the information gained when one revises one's beliefs from the prior distribution

to the posterior distribution

to the posterior distribution  . In other words, amount of information lost when

. In other words, amount of information lost when  is used to approximate

is used to approximate  .

.

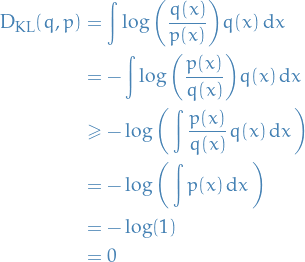

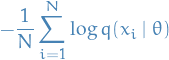

Most importantly, the KL-divergence can be written

![\begin{equation*}

D_{\rm{KL}}(p \ || \ q) = \mathbb{E}_p\Bigg[\log \frac{p(x \mid \theta^*)}{q(x \mid \theta)} \Bigg] = \mathbb{E}_p \big[ \log p(x \mid \theta^*) \big] - \mathbb{E}_p \big[ \log q(x \mid \theta) \big]

\end{equation*}](../../assets/latex/probability_and_statistics_bf4cacc304ab57b5792eb66e803c948e203e94f0.png)

where  is the optimal parameter and

is the optimal parameter and  is the one we vary to approximate

is the one we vary to approximate  . The second term in the equation above is the only one which depend on the "unknown" parameter

. The second term in the equation above is the only one which depend on the "unknown" parameter  (

( is fixed, since this is the parameter we assume

is fixed, since this is the parameter we assume  to take on). Now, suppose we have

to take on). Now, suppose we have  samples

samples  from

from  , then observe that the negative log-likelihood for some parametrizable distribution

, then observe that the negative log-likelihood for some parametrizable distribution  is given by

is given by

By the Law of Large numbers, we have

![\begin{equation*}

\lim_{N \to \infty} - \frac{1}{N} \sum_{i=1}^{N} \log q(x_i \mid \theta) = - \mathbb{E}_p[\log q(x \mid \theta)]

\end{equation*}](../../assets/latex/probability_and_statistics_96e04ce0e8b58d7c798481548bd91823470368e0.png)

where  denotes the expectation over the probability density

denotes the expectation over the probability density  . But this is exactly the second term in the KL-divergence! Hence, minimizing the KL-divergence between

. But this is exactly the second term in the KL-divergence! Hence, minimizing the KL-divergence between  and

and  is equivalent of minimizing the negative log-likeliood, or equivalently, maximizing the likelihood!

is equivalent of minimizing the negative log-likeliood, or equivalently, maximizing the likelihood!

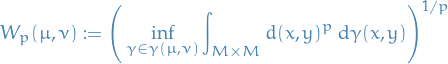

Wasserstein metric

Let  be a metric space for which every probability measure on

be a metric space for which every probability measure on  is a Radon measure.

is a Radon measure.

For  , let

, let  denote the collection of all probability measures

denote the collection of all probability measures  on

on  with finite p-th moment (expectation of rv. to the p-th power) for some

with finite p-th moment (expectation of rv. to the p-th power) for some  ,

,

Then the p-th Wasserstein distance between two probability measures  and

and  in

in  is defined as

is defined as

where  denotes the collection of all measures on

denotes the collection of all measures on  with marginals

with marginals  and

and  on the first and second factors respectively (i.e. all possible "joint distributions").

on the first and second factors respectively (i.e. all possible "joint distributions").

Or equivalently,

![\begin{equation*}

W_p(\mu, \nu)^p = \inf \mathbb{E} \big[ d(X, Y)^p \big]

\end{equation*}](../../assets/latex/probability_and_statistics_eff0640986bca7f4d56bdce5777eadd3e5f24976.png)

Intuitively, if each distribution is viewed as a unit amount of "dirt" piled on the metric space  , the metric minimum "cost" of turning one pile into the other, which is assumed to eb the amount dirt that needs to moved times the distance it has to be moved.

, the metric minimum "cost" of turning one pile into the other, which is assumed to eb the amount dirt that needs to moved times the distance it has to be moved.

Because of this analogy, the metric is sometimes known as the "earth mover's distance".

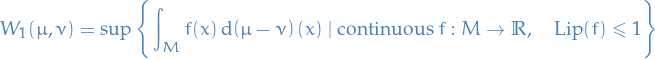

Using the dual representation of  , when

, when  and

and  have bounded support:

have bounded support:

where  denotes the minimal Lipschitz constant for

denotes the minimal Lipschitz constant for  .

.

Compare this with the definition of the Radon metric (metric induced by distance between two measures):

![\begin{equation*}

\rho(\mu, \nu) := \sup \Bigg\{ \int_M f(x) \dd(\mu - \nu)(x) \mid \text{continuous } f: M \to [-1, 1] \Bigg\}

\end{equation*}](../../assets/latex/probability_and_statistics_4496867e36c7eb7a37a1a4f07a2561baefb11961.png)

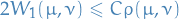

If the metric  is bounded by some constant

is bounded by some constant  , then

, then

and so convergence in the Radon metric implies convergence in the Wasserstein metric, but not vice versa.

Observe that we can write the duality as

![\begin{equation*}

\begin{split}

W_1(\mu, \nu) &= \sup_{\norm{f}_L \le 1} \int_M f(x) \dd (\mu - \nu)(x) \\

&= \sup_{\norm{f}_L \le 1} \int_M f(x) \dd \mu - \int_M f(x) \dd \nu \\

&= \sup_{\norm{f}_L \le 1} \Big( \mathbb{E}_{x \sim \mu(x)} \big[ f(x) \big] - \mathbb{E}_{x \sim \nu(x)} \big[ f(x) \big] \Big)

\end{split}

\end{equation*}](../../assets/latex/probability_and_statistics_15553e9aefcfe1dedcc8397433ee45e41b303211.png)

where we let  denotes that we're finding the supremum over all functions which are 1-Lipschitz, i.e. Lipschitz continuous with Lipschitz constant 1.

denotes that we're finding the supremum over all functions which are 1-Lipschitz, i.e. Lipschitz continuous with Lipschitz constant 1.

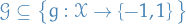

Integral Probability Metric

Let  and

and  be a probablity distributions, and

be a probablity distributions, and  be some space of real-valued functions. Further, let

be some space of real-valued functions. Further, let

![\begin{equation*}

d_{\mathcal{H}}(q, p) = \sup_{h \in \mathcal{H}} \Big[ \mathbb{E}_q [h(X)] - \mathbb{E}_p[h(Z)] \Big]

\end{equation*}](../../assets/latex/probability_and_statistics_0c6c78693beb9e0630c1502d0c10caca561a8c31.png)

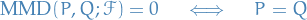

When  is sufficently "large", then

is sufficently "large", then

We then say that  together with

together with  defines an integral probabilty metric (IPM).

defines an integral probabilty metric (IPM).

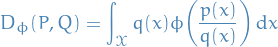

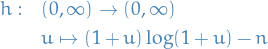

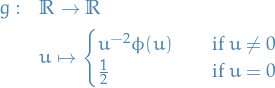

ϕ-divergences

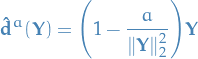

Stein's method

Absolutely fantastic description of it: https://statweb.stanford.edu/~souravc/beam-icm-trans.pdf

Notation

any random variable

any random variable std. Gaussian random variable

std. Gaussian random variable

Overview

- Technique for proving generalized central limit theorems

Ordinary central limit theorem: if

are i.i.d. rvs. then

are i.i.d. rvs. then

where

and

and

- Usual method of proof:

- LHS is computed using Fourier Transform

- Independence implies that FT decomposes as a product

- Analysis

- Stein's motivation: what if

are not exactly independent?!

are not exactly independent?!

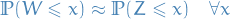

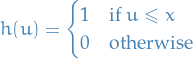

Method

Suppose we want to show that  is "approximately Gaussian", i.e.

is "approximately Gaussian", i.e.

or,

![\begin{equation*}

\mathbb{E}[h(W)] \approx \mathbb{E}[h(Z)]

\end{equation*}](../../assets/latex/probability_and_statistics_06ba62cf66ccd8f2d07b4e7af4c372d3006f9c65.png)

for any well-behaved

It's a generalization because if

then

![\begin{equation*}

\mathbb{P}(W \le x) = \mathbb{E}[h(W)]

\end{equation*}](../../assets/latex/probability_and_statistics_4b3f596ed4f34ba8748fa9f9529443e4336722a6.png)

Suppose

and we want to show that the rv.  is approximately std. Gaussian, i.e.

is approximately std. Gaussian, i.e. ![$\mathbb{E}[h(W)] \approx \mathbb{E}[h(Z)$](../../assets/latex/probability_and_statistics_519176343a58bbe82f34d8418f7478264192526c.png) for all well-behaved

for all well-behaved  .

.

Given

, obtain a function

, obtain a function  by solving the differential equation

by solving the differential equation

![\begin{equation*}

f'(x) - x f(x) = h(x) - \mathbb{E}[h(z)]

\end{equation*}](../../assets/latex/probability_and_statistics_08424e2fe22e1ee741c245c231dd7f9855374942.png)

2.Show that

![\begin{equation*}

\mathbb{E} \big( f'(W) - W f(W) \big) = \mathbb{E}[h(W)] - \mathbb{E}[h(Z)]

\end{equation*}](../../assets/latex/probability_and_statistics_ed612add542dfee721c1920259327eff80b35f05.png)

using the properties of

Since

![\begin{equation*}

\mathbb{E} \big( f'(W) - W f(W) \big) = \mathbb{E}[h(W)] - \mathbb{E}[h(Z)

\end{equation*}](../../assets/latex/probability_and_statistics_352fb025d181c9a4a0af1ba900d2354c4280269b.png)

conclude that

![$\mathbb{E}[h(W)] \approx \mathbb{E}[h(Z)]$](../../assets/latex/probability_and_statistics_d41733f6a99a480b597c447684432a841ad974c5.png) .

.

More generally, two smooth densities  and

and  supported on

supported on  are indentical if and only if

are indentical if and only if

![\begin{equation*}

\mathbb{E}_p [\mathbf{s}_p(x) f(x) + \nabla_x f(x) ] = 0

\end{equation*}](../../assets/latex/probability_and_statistics_21eabfd0a3a9afee8f36fed3fff6a71b11356427.png)

for smooth functions  with proper zero-boundary conditions, where

with proper zero-boundary conditions, where

is called the Stein score function of  .

.

Stein discrepancy measure between two continuous densities  and

and  is defined

is defined

![\begin{equation*}

\mathbb{S}(p, q) = \max_{f \in \mathcal{F}} \big( \mathbb{E}_p [ \mathbf{s}_q(x) f(x) + \nabla_x f(x)] \big)^2

\end{equation*}](../../assets/latex/probability_and_statistics_f88b77c8cb9a1b198313c15cbf27b3e14a1ef50c.png)

where  is the set of functions which satisfies

is the set of functions which satisfies

Two smooth densities  and

and  supported on

supported on  are indentical if and only if

are indentical if and only if

![\begin{equation*}

\mathbb{E}_p [\mathbf{s}_q(x) f(x) + \nabla_x f(x) ] = 0

\end{equation*}](../../assets/latex/probability_and_statistics_bc24722f15cc69291023190e774861f5f083e897.png)

for smooth functions  with proper zero-boundary conditions, where

with proper zero-boundary conditions, where

is called the Stein score function of  .

.

Stein discrepancy measure between two continuous densities  and

and  is defined

is defined

![\begin{equation*}

\mathbb{S}(p, q) = \max_{f \in \mathcal{F}} \big( \mathbb{E}_p [ \mathbf{s}_q(x) f(x) + \nabla_x f(x)] \big)^2

\end{equation*}](../../assets/latex/probability_and_statistics_f88b77c8cb9a1b198313c15cbf27b3e14a1ef50c.png)

where  is the set of functions which previous expectation and is also "rich" enough to ensure that

is the set of functions which previous expectation and is also "rich" enough to ensure that  whenever

whenever  .

.

Why does it work?

- Turns out that if we replace the

![$\mathbb{E}[h(Z)]$](../../assets/latex/probability_and_statistics_94d7d167dd4ca6ec844eb249c4e3e3b5f0be774c.png) in Stein's method, then

in Stein's method, then  is not well-behaved; it blows up at infinity.

is not well-behaved; it blows up at infinity. - A random variable

has the standard Gaussian distribution if and only if

has the standard Gaussian distribution if and only if  for all

for all  !

(you can easilty check this by observing that the solution to this is

!

(you can easilty check this by observing that the solution to this is  ).

). Differential operator

, defined as

, defined as

is called a characterizing operator for the standard Gaussian distribution.

Stochastic processes

Discrete

- Markov chains is an example of a discrete stochastic process

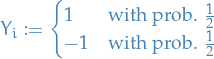

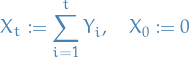

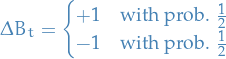

Simple random walk

Let  be a set of i.i.d. random variables such that

be a set of i.i.d. random variables such that

Then, let

We then call the sequence  a simple random walk.

a simple random walk.

For large  , due to CLT we have

, due to CLT we have

i.e.  follows a normal distribution with

follows a normal distribution with ![$\mathbb{E}[X_t] = 0$](../../assets/latex/probability_and_statistics_d3e8c75430646506718908bbc841c0b1bb0b8eef.png) and

and  .

.

We then have the following properties:

![$\mathbb{E}[X] = 0$](../../assets/latex/probability_and_statistics_56be98dff9b0eedfc7b4bba0860fc3638ddd82e1.png)

Independent measurements : if

then

are mutually independent, with

are mutually independent, with  .

.

- Stationary : for all

and

and  , the distribution of

, the distribution of  is the same as the distribution of

is the same as the distribution of  .

.

Martingale

A martingale is a stochastic process which is fair game.

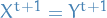

We say a stochastic process  is a martingale if

is a martingale if

![\begin{equation*}

X_t = \mathbb{E}[X_{t + i} \mid \mathcal{F}_t], \quad i \ge 0

\end{equation*}](../../assets/latex/probability_and_statistics_270a8ecf4eafe631538496cac4746d3846577297.png)

where

Examples

- Simple random walk is a martingale

- Balance of a Roulette player is NOT martingale

Optional stopping theorem

A given stochastic process  , a non-negative integer r.v.

, a non-negative integer r.v.  is called a stopping time if

is called a stopping time if

depends only on  (i.e. stopping at some time

(i.e. stopping at some time  only depends on the first

only depends on the first  r.v.s).

r.v.s).

This implies that if we a process can be described as a martingale, then it's a "zero-sum".

Continuous

Lévy process

A stochastic process  is said to be a Lévy process if it satisfies the following properties:

is said to be a Lévy process if it satisfies the following properties:

almost surely.

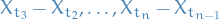

almost surely.- Independence of increments: for any $0 ≤ t1 ≤ t2 ≤ … ≤ tn < ∞, the increments

are independent.

are independent. - Stationary increments: For any

, the rvs.

, the rvs.  is equal in distribution to

is equal in distribution to  .

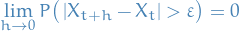

. Continuity in probability: For any

and

and  it holds that

it holds that

If  is a Lévy process then one may construct a version of

is a Lévy process then one may construct a version of  such that

such that  is almost surely right-continuous with left limits.

is almost surely right-continuous with left limits.

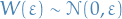

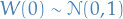

Wiener process

The Wiener process (or Brownian motion) is a continuous-time stochastic process  characterized by the following properties:

characterized by the following properties:

(almost surely)

(almost surely) has independent increments: for

has independent increments: for  , future increments

, future increments  with

with  , are independent of the past values

, are independent of the past values  ,

,

has Gaussian increments:

has Gaussian increments:

has continuous paths: with probability

has continuous paths: with probability  ,

,  is continuous in

is continuous in

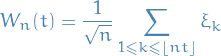

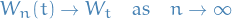

Further, let  be i.i.d. rv. with mean 0 and variance 1. For each

be i.i.d. rv. with mean 0 and variance 1. For each  , define the continuous-time stochastic process

, define the continuous-time stochastic process

Then

i.e. a random walk approaches a Wiener process.

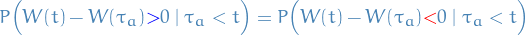

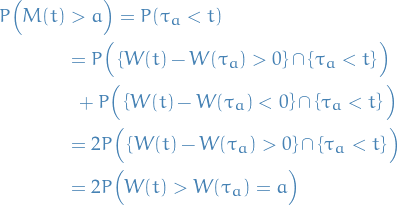

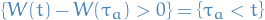

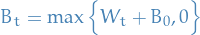

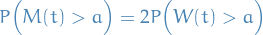

Properties

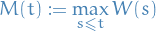

Let  be the first time such that

be the first time such that

Then

Observe that

which is saying that at the point  we have

we have  , and therefore for all time which follows we're equally likely to be above or below

, and therefore for all time which follows we're equally likely to be above or below  .

.

Therefore

where in the last inequality we used the fact that the set of events  .

.

This theorem helps us solve the gambler's ruin problem!

Let  denote your bankroll at time

denote your bankroll at time  , and at each

, and at each  it changes by

it changes by

The continuous approximation of this process is given by a Brownian motion, hence we can write the bankroll as

where  denotes a Brownian motion (with

denotes a Brownian motion (with ![$\mathbb{E}[W_t] = 0$](../../assets/latex/probability_and_statistics_7a7e0761a608ecf7946800a88f5b331d904e6ff3.png) ).

).

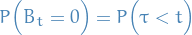

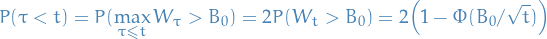

We're interested in how probable it is for us to be ruined before some time  . Letting

. Letting  be the time were we go bankrupt, we have

be the time were we go bankrupt, we have

i.e. that we hit the time were we go bankrupt before time  .

.

Bankrupt corresponds to

where  denotes a Wiener process (hence centered at 0).

denotes a Wiener process (hence centered at 0).

Then we can write

where the last equality is due to the symmetry of a Normal distribution and thus a Brownian motion.

Hence

where  denotes a standard normal distribution.

denotes a standard normal distribution.

![\begin{equation*}

\lim_{n \to \infty} \Big[ B \Big(T (t / n) \Big) - B\Big(T (t - 1) / n \Big) \Big]^2 = T

\end{equation*}](../../assets/latex/probability_and_statistics_d2676857ceb9dd02ba9d8dd6aa20b7abc46bcd3c.png)

This is farily straightforward to prove, just recall the Law of Large numbers and you should be good.

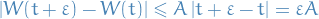

Non-differentiability

is non-differentiable with probability 1.

is non-differentiable with probability 1.

Suppose, for the sake of contradiction, that  is differentiable with probability 1.

Then, by MVP we have

is differentiable with probability 1.

Then, by MVP we have

for some  and all

and all  . This implies that

. This implies that

since  .

.

Hence

As  , since

, since  , then

, then

where

Thus, when  , we have

, we have

since  Hence,

Hence,

contracting our initial statement.

Geometric Brownian motion

A stochast process  is said to follow a Geometric Brownian Motion if it satisfies the following stochastic differential equation (SDE):

is said to follow a Geometric Brownian Motion if it satisfies the following stochastic differential equation (SDE):

where  is the Brownian motion, and

is the Brownian motion, and  (percentage drift) and

(percentage drift) and  (percentage volatility) are constants.

(percentage volatility) are constants.

Brownian bridge

A Brownian bridge is a continuous-time stochastic process  whose probability distribution is the conditional probability distribution of a Brownian motion

whose probability distribution is the conditional probability distribution of a Brownian motion  subject to the condition that

subject to the condition that

so that the process is pinned at the origin at both  and

and  . More precisely,

. More precisely,

![\begin{equation*}

B_t := \big( W_t \mid W_T = 0 \big), \quad t \in [0, T]

\end{equation*}](../../assets/latex/probability_and_statistics_8238711534767675a010b92c07f261a709260728.png)

Then

![\begin{equation*}

\mathbb{E}[B_t] = 0, \quad \text{Var}(B_t) = \frac{t (T - t)}{T}

\end{equation*}](../../assets/latex/probability_and_statistics_400aba49f998227142fefa7ee51e38a6da33a6af.png)

implying that the most uncertainty is in the middle of the bridge, with zero uncertainty at the nodes.

Sometimes the notation  is used for a Wiener process / Brownian motion rather than for a Brownian bridge.

is used for a Wiener process / Brownian motion rather than for a Brownian bridge.

Markov Chains

Notation

denotes the transition matrix (assumed to be ergodic, unless stated otherwise)

denotes the transition matrix (assumed to be ergodic, unless stated otherwise) is the stationary distribution

is the stationary distribution denotes the initial state

denotes the initial state is the state space

is the state space denotes an uncountable state space

denotes an uncountable state space denotes

denotes  which means all points except those with zero-measure, i.e.

which means all points except those with zero-measure, i.e.  such that

such that

Definitions

A Markov chain is said to be irreducible if it's possible to reach any state from any state.

A state  has a period

has a period  if any return to state

if any return to state  must occur in multiples of

must occur in multiples of  time steps.

time steps.

Formally, the period of a state is defined as

provided that the set is non-empty.

A state  is said to be transient if, given that we start in state

is said to be transient if, given that we start in state  ,t there is a non-zero probability that we will never return to

,t there is a non-zero probability that we will never return to  .

.

A state  is said to be recurrent (or persistent) if it is not transient, i.e. gauranteed (with prob. 1) to have a finite hitting time.

is said to be recurrent (or persistent) if it is not transient, i.e. gauranteed (with prob. 1) to have a finite hitting time.

A state  is said to be ergodic if it is aperiodic and positive recurrent, i.e.

is said to be ergodic if it is aperiodic and positive recurrent, i.e.

- aperiodic: period of 1, i.e. can return to current state in a single step

- positive recurrent: has a finite mean recurrence time

If all states in an irreducible Markov chain are ergodic , then the chain is said to be ergodic.

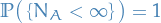

The above definitions can be generalized to continuous state-spaces by considering measurable sets  rather than states

rather than states  . E.g. recurrence now means that for any measurable set

. E.g. recurrence now means that for any measurable set  , we have

, we have

where  denotes the number of steps we need to take until we "hit"

denotes the number of steps we need to take until we "hit"  , i.e. the hitting time.

, i.e. the hitting time.

[This is a personal thought though, so not 100% guaranteed to be correct.]

Coupling

- Useful for bouding the mixing rate of Markov chains, i.e. the number of steps it takes for the Markov chain to converge to the stationary distribution

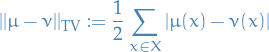

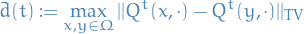

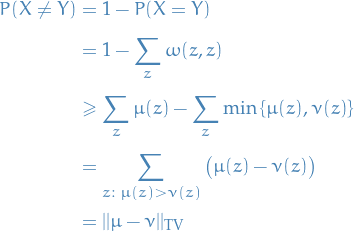

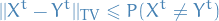

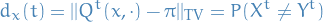

Distance to Stationary Distribution

Use Total Variance as a distance measure, therefore convergence is defined through

denotes the variation distance between two Markov chain random variables

denotes the variation distance between two Markov chain random variables  and

and  , i.e.

, i.e.

One can prove that

which allows us to bound the distance between a chain and the stationary distribution, by considering the difference between two chains, without knowing the stationary distribution!

Coupling

Let  and

and  be random variables with probability distributions

be random variables with probability distributions  and

and  on

on  , respectively.

, respectively.

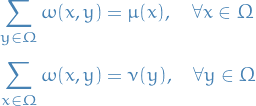

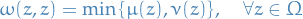

A distribution  on

on  is a coupling if

is a coupling if

Consider a pair of distributions  and

and  on

on  .

.

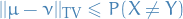

For any coupling

of

of  and

and  , with

, with

There always exists a coupling

s.t.

s.t.

Observe that

Therefore,

Concluding the proof.

"Inspired" by our proof in 1., we fix diagonal entries:

to make one of the inequalities an equality. Now we simply need to construct

such that the above is satisfied, and it's a coupling. One can check that

such that the above is satisfied, and it's a coupling. One can check that

does indeed to the job.

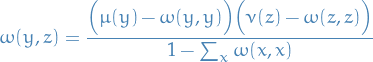

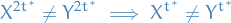

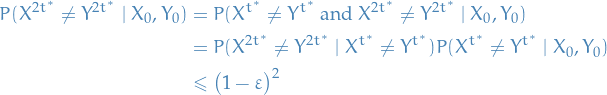

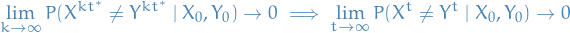

Ergodicity Theorem

If  is irreducible and aperiodic, then there is a unique stationary distribution

is irreducible and aperiodic, then there is a unique stationary distribution  such that

such that

Consider two copies of the Markov chain  and

and  , both following

, both following  . We create the coupling distribution as follows:

. We create the coupling distribution as follows:

- If

, then choose

, then choose  and

and  independently according to

independently according to

- If

, choose

, choose  and set

and set

From the couppling lemma, we know that

Due to ergodicity, there exists  such that

such that  . Therefore, there is some

. Therefore, there is some  such that for all initial states

such that for all initial states  and

and  ,

,

Similarily, due to the Markovian property, we know

Since

we have

Hence, for any positive integer  :

:

Hence,

Coupling lemma then gives

Finally, we observe that, letting  ,

,

which shows that  , i.e. converges to the stationary distribution.

, i.e. converges to the stationary distribution.

Finally, observe that  is unique, since

is unique, since

Mixing time

Let  be some

be some  and let

and let  have the stationary distribution. Fix

have the stationary distribution. Fix  .

By the coupling lemma, there is a coupling and random variables

.

By the coupling lemma, there is a coupling and random variables  and

and  such that

such that

Using this coupling, we define a coupling of the distributions of  as follows:

as follows:

- If

, set

, set

- Else, let

and

and  independently

independently

Then we have,

The first inequality holds due to the coupling lemma, and the second inequality holds by construction of the coupling.

Since  never decreases, we can define the mixing time

never decreases, we can define the mixing time  of a Markov chain as

of a Markov chain as

for some chosen  .

.

General (uncountable) state space

Now suppose we have some uncountable state-space  . Here we have to be a bit more careful when going about constructing Markov chains.

. Here we have to be a bit more careful when going about constructing Markov chains.

- Instead of considering transitions

for some

for some  , we have to consider transitions

, we have to consider transitions  for

for  and

and  .

.

- Transition probability is therefore denote

- Transition probability is therefore denote

- This is due to the fact that typically, the measure of a singleton set

is zero, i.e.

is zero, i.e.  , when we have an uncountable number possible inputs.

, when we have an uncountable number possible inputs. - That is; we have to consider transitions to subsets of the state-space, rather than singletons

- This requires a new definition of irreducibility of a Markov chain

A chain is  if there exists a non-zero sigma-finite measure

if there exists a non-zero sigma-finite measure  on

on  such that for all

such that for all  with

with  (i.e. all non-zero measurable subsets), and for all

(i.e. all non-zero measurable subsets), and for all  , there exists a positive integer

, there exists a positive integer  such that

such that

We also need a slightly altered definition of periodicity of a Markov chain:

A Markov chain with stationary distribution  is periodic if there exist

is periodic if there exist  and disjoint subsets

and disjoint subsets  with

with

and

such that  , and hence

, and hence  for all

for all  . That is, we always transition between subsets, and never within these disjoint subsets.

. That is, we always transition between subsets, and never within these disjoint subsets.

If such does not exist, i.e. the chain is not periodic, then we say the chain is aperiodic.

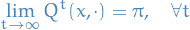

Results such as the coupling lemma also hold for the case of uncountable state-space, simply by replacing the summation with the corresponding integrand over the  . From roberts04_gener_state_space_markov_chain_mcmc_algor, similarily to the countable case, we have the following properties:

. From roberts04_gener_state_space_markov_chain_mcmc_algor, similarily to the countable case, we have the following properties:

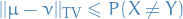

Let  and

and  be probability measures on the space

be probability measures on the space  . Then

. Then

![$||\mu - \nu||_{\rm{TV}} = \sup_{f : \mathcal{X} \to [0, 1]} \left| \int f d \mu - \int f d \nu \right|$](../../assets/latex/probability_and_statistics_381334c21ab2d08946d2920a57405b378136dc70.png)

More generally,

![\begin{equation*}

||\mu - \nu||_{\rm{TV}} = \frac{1}{b - a} \sup_{f: \mathcal{X} \to [a, b]} \left| \int f \ d \mu - \int f \ d \nu \right|

\end{equation*}](../../assets/latex/probability_and_statistics_7f1df4fa4639c3eac471ad5d77867381654a9621.png)

In particular,

![\begin{equation*}

||\mu - \nu||_{\rm{TV}} = \frac{1}{2} \sup_{f: \mathcal{X} \to [-1, 1]} \left| \int f \ d \mu - \int f \ d \nu \right|

\end{equation*}](../../assets/latex/probability_and_statistics_5fc1b80015a31e2ab01be527365a3eb8647b7be4.png)

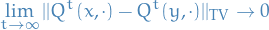

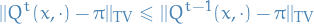

If

is a stationary distribution for a Markov chain with kernel

is a stationary distribution for a Markov chain with kernel  , then

, then  is non-increasing in

is non-increasing in  , i.e.

, i.e.

for

.

.

More generally, letting

we always have

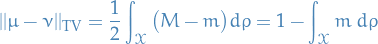

If

and

and  have densities

have densities  and

and  , respectively, wrt. to some sigma-finite measure

, respectively, wrt. to some sigma-finite measure  , then

, then

with

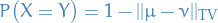

Given probability measures

and

and  , there are jointly defined random variables

, there are jointly defined random variables  and

and  such that

such that

and

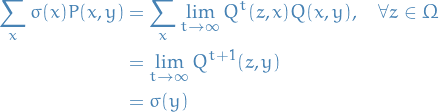

From roberts04_gener_state_space_markov_chain_mcmc_algor we also have the following, important theorem:

If a Markov chain on a state space with countable generated sigma-algebra is  irreducible and aperiodic, and has a stationary distribution

irreducible and aperiodic, and has a stationary distribution  , then for

, then for  almost-every

almost-every  ,

,

In particular,

We also introduce the notion of Harris recurrent:

We say a Markov chain is Harris recurrent if for all  with

with  (i.e. all non-zero-measurable subsets), and all

(i.e. all non-zero-measurable subsets), and all  , the chain will eventually reach

, the chain will eventually reach  from

from  with probability 1, i.e.

with probability 1, i.e.

This notion is stronger than the notion of irreducibility introduced earlier.

Convergence rates

Uniform Ergodicity

From this theorem, we have implied asymptotic convergence to stationarity, but it does not provide us with any information about the rate of convergence. One "qualitative" convergence rate property is

A Markov chain having stationary distribution  is uniformly ergodic if

is uniformly ergodic if

for some  and

and  .

.

For further developments of ensuring uniform ergodicity, we need to define

A subset  is small or

is small or  if there exists a positive integer

if there exists a positive integer  ,

,  , and a probability measure

, and a probability measure  on

on  s.t. the following minorisation condition holds:

s.t. the following minorisation condition holds:

i.e.  for all

for all  and all measurable

and all measurable  .

.

Geometric ergodicity

A Markov chain with stationary distribution  is geometrically ergodic if

is geometrically ergodic if

for some  where

where  for

for

.

.

Difference between uniform ergodicity and geometric ergodicity is the fact that in geometric ergodicity the  can also depend on the initial state

can also depend on the initial state  .

.

In practice

Diagnostics

Effective sample size (ESS)

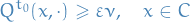

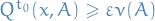

(split)  statistic

statistic

- Consider running

chains, each for

chains, each for  steps, from different initializations

steps, from different initializations  , where

, where  is even

is even - Then consider splitting each chain in half, resulting in two half-chains for each chain, leaving us with a total of

chains

chains

- Reason: If a chain indeed represents independent samples from the stationary distribution, then at the very least the first half and second half of the chain should indeed have the display similar behavior (for sufficiently large

)

)

- Reason: If a chain indeed represents independent samples from the stationary distribution, then at the very least the first half and second half of the chain should indeed have the display similar behavior (for sufficiently large

You might wonder, why not split in 3? Or 4? or, heck,  ?!

?!

Likely answer: "You could, but be sensible!" Very unsatisfying…

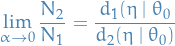

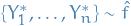

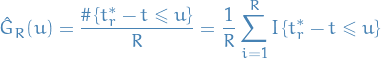

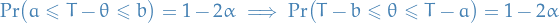

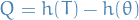

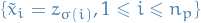

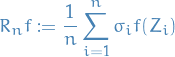

Let  be the scalar estimand, i.e. the variable we're trying to estimate. Let

be the scalar estimand, i.e. the variable we're trying to estimate. Let  be the "for

be the "for  and

and  denote the different samples for the variable of interest

denote the different samples for the variable of interest  obtained by

obtained by  MCMC chains, each with

MCMC chains, each with  samples each, with different initializations.

samples each, with different initializations.

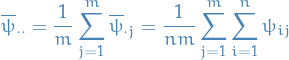

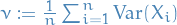

We denote the (B)etween- and (W)ithin-sequence variances

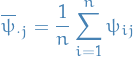

where

empirical mean within a chain

empirical mean across all chains

empirical variance within each chain

Notice that  contains a factor of

contains a factor of  because it is based on the variance of the within-sequence means

because it is based on the variance of the within-sequence means  , each of which is an averaeg of

, each of which is an averaeg of  values

values  .

.

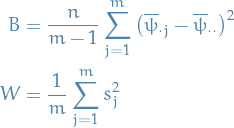

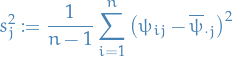

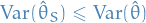

We can then estimate  , the marginal posterior variance of the estimand, by a weighted average of

, the marginal posterior variance of the estimand, by a weighted average of  and

and  , namely

, namely

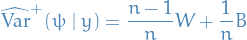

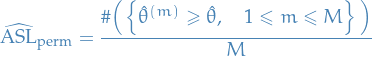

Finally, we can then monitor convergence of the iterative simulation by estimating the factor by which the scale of the current distribution for  might be reduced if the simulations were continued in the limit

might be reduced if the simulations were continued in the limit  . This potential scale reduction is estimated by

. This potential scale reduction is estimated by

which has the property that

(remember that  depend on

depend on  ).

).

If

is large → reason to believe that further simulations may improve our inference about the target distribution of the associated scalar estimand

is large → reason to believe that further simulations may improve our inference about the target distribution of the associated scalar estimand is small → ???

is small → ???

Specific distributions & processes

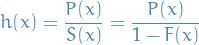

Hazard function

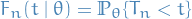

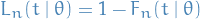

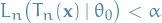

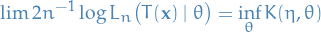

The hazard function / failure rate  is defined

is defined

where  denotes the PDF and

denotes the PDF and  denotes the CDF.

denotes the CDF.

TODO Renewal processes

- Let

with

with  be a sequence of waiting times between

be a sequence of waiting times between  and the

and the  event.

event. Arrival time of the

event is

event is

- Denote

by the total number of events in

by the total number of events in

- If

is fixed, then

is fixed, then  is the count variable we wish to model

is the count variable we wish to model

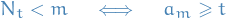

It follows that

i.e. total number of events is less than

if and only if the arrival time of the

if and only if the arrival time of the  event is greater than

event is greater than  , which makes sense

, which makes sense

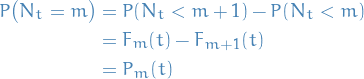

If

is the distribution function of

is the distribution function of  , we have

, we have

Furthermore

- This is the fundamental relationship between the count variable

and the timing process

and the timing process

- This is the fundamental relationship between the count variable

- If

, the process is called a renewal process

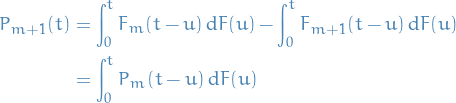

, the process is called a renewal process In this case, we can extend the above equation to the following recursive relationship:

where we have

- Intuitively: probability of exactly

events occuring by time

events occuring by time  is the probability that the first event occurs at time

is the probability that the first event occurs at time  , and that exactly

, and that exactly  events occur in the remaining time interval, integrated over all times

events occur in the remaining time interval, integrated over all times  .

. - Evaluting this integral, we can obtain

from the above recursive relationship.

from the above recursive relationship.

- Intuitively: probability of exactly

Weibull renewal process

Statistics

Notation

denotes

denotes  converges in probability to

converges in probability to

denotes

denotes  convergence in law to

convergence in law to

Definitions

Efficency

The efficency of an unbiased estimator  is the ratio of the minimum possible variance to

is the ratio of the minimum possible variance to  .

.

An unbiased estimator with efficiency equal to 1 is called efficient or a minimum variance unbiased estimator (MVUE).

Statistic

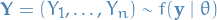

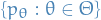

Suppose a random vector  has distribution function in a parametric family

has distribution function in a parametric family  and realized value

and realized value  .

.

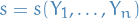

A statistic is r.v.  which is a function of

which is a function of  independent of

independent of  . Its realized value is

. Its realized value is  .

.

A statistic  is said to be sufficient for

is said to be sufficient for  if the distribution of

if the distribution of  given

given  does not depend on

does not depend on  , i.e.

, i.e.

Further, we say  is a minimal sufficient statistic if it's the smallest / least complex "proxy" for

is a minimal sufficient statistic if it's the smallest / least complex "proxy" for  .

.

Observe that,

- if

is sufficient for

is sufficient for  , so is any one-to-one function of

, so is any one-to-one function of

is trivially sufficient

is trivially sufficient

We say a statistic is pivotal if it does not depend on any unknown parameters.

E.g. if we are considering a normal distribution  and

and  is known, then the mean could be a pivotal statistic, since we know all information about the distribution except the statistic itself. But if we didn't know

is known, then the mean could be a pivotal statistic, since we know all information about the distribution except the statistic itself. But if we didn't know  , the mean would not be pivotal.

, the mean would not be pivotal.

Let  .

.

Then statistic  is sufficient for

is sufficient for  if and only if there exists functions

if and only if there exists functions  of

of  and

and  of

of  such that

such that

where  denotes the likelihood.

denotes the likelihood.

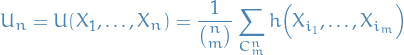

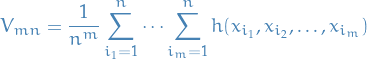

U-statistic

Let  be independent observations on a distribution

be independent observations on a distribution  .

.

Consider a "parametric function"  for which there is an unbiased estimator. That is,

for which there is an unbiased estimator. That is,  may be represented as

may be represented as

![\begin{equation*}

\theta(F) = \mathbb{E}_F \big[ h(X_1, \dots, X_m) \big] = \int \dots \int h(x_1, \dots, x_m) \dd{F(x_1)} \cdots \dd{F(x_m)}

\end{equation*}](../../assets/latex/probability_and_statistics_d65325ac98ad0431810d295a5d19bfb7fe5b58ee.png)

for some function  , called a kernel.

, called a kernel.

Without loss of generality, we may assume  is symmetric, otherwise we could simply

is symmetric, otherwise we could simply

For any kernel  , the corresponding U-statistic for estimation of

, the corresponding U-statistic for estimation of  on the basis of a sample

on the basis of a sample  for size

for size  is obtained by averaging the kernel

is obtained by averaging the kernel  symmetrically over the observations:

symmetrically over the observations:

where  denotes the summation over

denotes the summation over  combinations of

combinations of  distinct elements

distinct elements  from

from  .

.

Clearly,  is then an unbiased estimate of

is then an unbiased estimate of  .

.

To conclude, a U-statistic is then a statistic which has the property of being an unbiased estimator for the corresponding  , where

, where  is such that it can be written stated above.

is such that it can be written stated above.

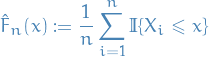

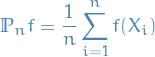

V-statistic

Statistics that can be represented as functionals  of the empirical distribution function,

of the empirical distribution function,  , are called statistical functionals.

, are called statistical functionals.

A V-statistic is a statistical function (of a sample) defined by a particular statistical functional of a probability distribution.

Suppose  is a sample. In typical applications the statistical function has a representation as the V-statistic

is a sample. In typical applications the statistical function has a representation as the V-statistic

where  is a symmetric kernel function.

is a symmetric kernel function.

is called a V-statistic of degree

is called a V-statistic of degree  .

.

Seems very much like a form of boostrap-estimate, does it not?

Informally, the type of asymptotic distribution of a statistical function depends on the order of "degeneracy," which is determined by which term is the first non-vanishing term in the Taylor expansion of the functional  .

.

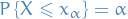

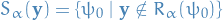

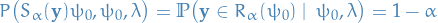

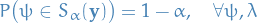

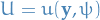

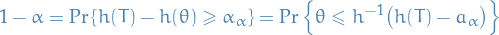

Quantiles

Let  be a random variable with cumulative density function (CDF)

be a random variable with cumulative density function (CDF)  , i.e.

, i.e.

Let  be such that

be such that

for some ![$\alpha \in [0, 1]$](../../assets/latex/probability_and_statistics_df706ca088cfab3f5c3067f3ca880bfddf0f6fa5.png) .

.

Then we say that  is called the

is called the  quantile of

quantile of  , i.e. the value such that

, i.e. the value such that

We then say:

is the median; half probability mass on each side

is the median; half probability mass on each side is the lower quantile

is the lower quantile is the upper quantile

is the upper quantile

is strictly monotonically increasing so

is strictly monotonically increasing so  exists, hence we can compute the quantiles!

exists, hence we can compute the quantiles!

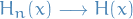

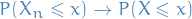

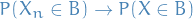

Convergence in law

A sequence of cdfs  is said to converge to

is said to converge to  iff

iff  on all continuity points of

on all continuity points of  .

.

We say that if a random variable  has cdf

has cdf  and the rv

and the rv  has cdf

has cdf  , then

, then  converges in law to

converges in law to  , and we write

, and we write

This does not mean that  and

and  are arbitrarily close as random variables. Consider the random variables

are arbitrarily close as random variables. Consider the random variables  and

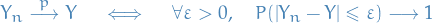

and  .

.

Let  and

and  be random variables. Then

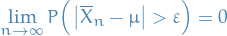

be random variables. Then

where  means converges in probability.

means converges in probability.

Or, equivalently

This is the notion used by WLLN.

If  where

where  is the limit distribution and

is the limit distribution and  then

then

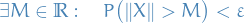

Let  be a sequence of random variables, and

be a sequence of random variables, and  be a random variable.

be a random variable.

Then

This is the notion of convergence used by SLLN.

Kurtosis

The kurtosis of a random variable is the 4th moment, i.e.

![\begin{equation*}

\text{Kurt}[X] = \kappa(X) = \mathbb{E} \Bigg[ \bigg( \frac{X - \mu}{\sigma} \bigg)^4 \Bigg] = \frac{\mu_4}{\sigma^4} = \frac{\mathbb{E}[(X - \mu)^4]}{\big(\mathbb{E}[(X - \mu)^2]\big)^2}

\end{equation*}](../../assets/latex/probability_and_statistics_999127ed41a92556298ca94fe627e7880cb44028.png)

where  denotes the 4th central moment and

denotes the 4th central moment and  is the std. dev.

is the std. dev.

Words

A collection of random variables is homoskedastic if all of these random variables have the same finite variance.

This is also known as homogeneity of variance.

A collection of random variables is heteroskedastic if there are sub-populations that have different variability from others.

Thus heteroskedasticity is the absence of homoskedastic.

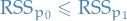

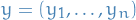

Consistency

Loosely speaking, an estimator  of parameter

of parameter  is said to be consistent, if it converges in probability to the true value of the parameter:

is said to be consistent, if it converges in probability to the true value of the parameter:

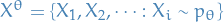

Or more rigorously, suppose  is a family of distributions (the parametric model) and

is a family of distributions (the parametric model) and  is an infinite sample from the distribution

is an infinite sample from the distribution  .

.

Let  be a sequence of estimators for some parameter

be a sequence of estimators for some parameter  . Usually

. Usually  will be based on the first

will be based on the first  observations of a sample. Then this sequence is said to be (weakly) consistent if

observations of a sample. Then this sequence is said to be (weakly) consistent if

Jeffreys Prior

The Jeffreys prior is a non-informative prior distribution for a parameter space, defiend as:

It has the key feature that it is invariant under reparametrization.

Moment generating function

For r.v.  the moment generating function is defined as

the moment generating function is defined as

![\begin{equation*}

M_x(t) = \mathbb{E} [e^{tX}], \quad t \in \mathbb{R}

\end{equation*}](../../assets/latex/probability_and_statistics_478db7622be48bf5634482c422f45780b09638e0.png)

Taking the derivative wrt.  we observe that

we observe that

![\begin{equation*}

\frac{\partial M_x}{\partial t} = \mathbb{E}[X e^{tX}]

\end{equation*}](../../assets/latex/probability_and_statistics_b737676d842bc4dfef42eab4fb0aaf297a3623ba.png)

Letting  , we get

, we get

![\begin{equation*}

\frac{\partial M_x}{\partial t} = \mathbb{E}[X]

\end{equation*}](../../assets/latex/probability_and_statistics_e540fafcfcf7bb424d3e256240d9ee11a3f94635.png)

i.e. the mean. We can take this derivative  times to obtain the expectation of

times to obtain the expectation of  , which is why

, which is why  is useful.

is useful.

Distributions

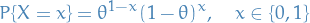

Binomial

or equivalently,

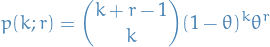

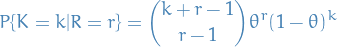

Negative binomial

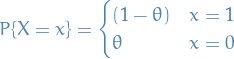

A negative binomial random variable represents the number of success  before obtaining

before obtaining  failures, where

failures, where  is the probability of a failure for each of these binomial rvs.

is the probability of a failure for each of these binomial rvs.

Derivation

Let  denote the rv. for # of successes and

denote the rv. for # of successes and  the number of failures.

the number of failures.

Suppose we have a run of  successes and

successes and  failure, then

failure, then

where the failure is of course the last thing that happens before we terminate the run.

Now, suppose that the run above is followed up by  failures, i.e.

failures, i.e.  but we have a specific ordering. Then, letting

but we have a specific ordering. Then, letting  denote the result of the i-th Binomial trail,

denote the result of the i-th Binomial trail,

But for  of the failures, we don't actually care when they happen, so we don't want any ordering. That is, we have

of the failures, we don't actually care when they happen, so we don't want any ordering. That is, we have  sequences of the form described above which are acceptable.

sequences of the form described above which are acceptable.

Hence we get the pmf

Gamma distribution

Chi-squared distribution

The chi-squared distribution with  degrees of freedom is the distribution of a sum of the squares of

degrees of freedom is the distribution of a sum of the squares of  independent standard normal random variables.

independent standard normal random variables.

It's a special case of the gamma distribution.

T-distribution

The T-distribution arises as a result of the Bessel-corrected sample variance.

Why?

Our population-model is as follows:

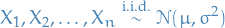

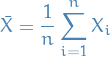

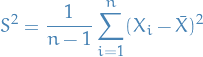

Let

then let

be the Bessel-corrected variance. Then the random variable

and the random variable

(where  has been substituted for

has been substituted for  ) has a Student's t-distribution with

) has a Student's t-distribution with  degrees of freedom.

degrees of freedom.

Note that the numerator and the denominator in the preceding expression are independent random variables, which can be proved by a simple induction.

F-distribution

A random variate of the F-distribution with parameters  and

and  arises as the ratio of the two appropriately scaled chi-quared variates:

arises as the ratio of the two appropriately scaled chi-quared variates:

where

and

and  have a chi-squared distribution with

have a chi-squared distribution with  and

and  degrees of freedom respectively

degrees of freedom respectively and

and  are independent

are independent

Power laws

Notation

such that we have a power law

such that we have a power law  , in such cases we say that the tail of the distribution follows a power law

, in such cases we say that the tail of the distribution follows a power law

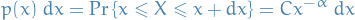

Definition

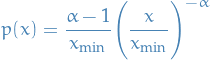

A continuous power-law is one described by probability density  such that

such that

where  is the observed value and

is the observed value and  is the normalization constant. Clearly this diverges as

is the normalization constant. Clearly this diverges as  , hence cannot hold for all

, hence cannot hold for all  ; must be a lower bound to power-law behavior.

; must be a lower bound to power-law behavior.

Provided  , we have

, we have

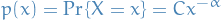

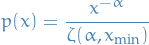

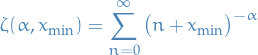

A discrete power-law is defined by

Again, diverges at zero, hence must be some lb  on power-law behaviour:

on power-law behaviour:

where

is the generalized or Hurwitz zeta function.

Important

Sources: clauset07_power_law_distr_empir_data and so_you_think_you_have_a_power_law_shalizi

- Log-log plot being roughly linear is necessary but not sufficient

- Errors are hard to estimate because they are not well-defined by the usual regression formulas, which are based on assumptions that do not apply in this case: noise of logarithm is not Gaussian as assumed by linear regression. In continuous case, this can be made worse by choice of binning scheme used to construct histogram, hence we have an additional free parameter.

- A fit to a power-law distribution can account for a large fraction of the variance even when fitted data do not follow a power law, hence high values of

("explained variance by regression fit") cannot be taken as evidence in favor of the power-law form.

("explained variance by regression fit") cannot be taken as evidence in favor of the power-law form. - Fits extracted by regression methods usually do not satisfy basic requirements on probability distributions, such as normalization and hence cannot be correct.

- Abusing linear regression. Standard methods, e.g. least-squares fitting are known to produce systematically biased estimates of parameters for power-law distributions and should not be used in most circumstances

- Use MLE to estimate scaling exponent

- Use goodness of fit to estimate scaling regions.

- In some cases there are only parts of the data which actually follows a power law.

- Method based on Kolmogorv-Smirnov goodness-of-fit statistic

- Use goodnes-of-fit test to check goodness of fit

- E.g. Kolmogorov-Smirnov test to check data drawn from estimated power law vs. real data

- Use Vuong's test to check for alternative non-power laws (see likelihood_ratio_test_for_model_selection_and_non_nested_hypothesis_vyong89)

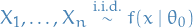

Maximum Likelihood Estimation

Notation

denotes the log-likelihood , i.e.

denotes the log-likelihood , i.e.

is the Fisher's information (expected information)

is the Fisher's information (expected information) is the observed information , i.e. information without taking the expectation

is the observed information , i.e. information without taking the expectation is called the score function

is called the score function denotes that true (and thus unobserved) parameter

denotes that true (and thus unobserved) parameter means the probability density

means the probability density  evaluated at

evaluated at

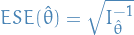

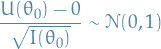

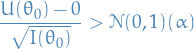

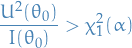

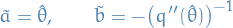

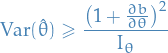

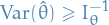

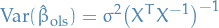

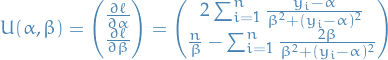

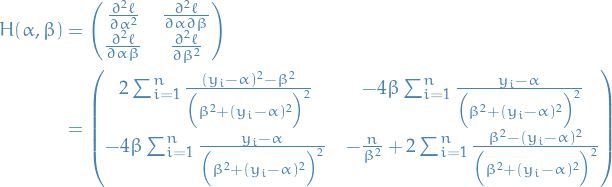

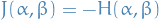

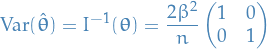

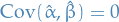

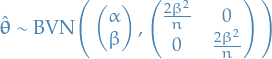

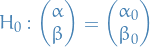

Appoximate (asymptotic) variance of MLE

For large samples (and under certain conditions ) the (asymptotic) variance of the MLE  is given by

is given by

where

![\begin{equation*}

I_\theta = \mathbb{E} \Bigg( \Bigg[ \frac{dl}{d\theta} \Bigg]^2 \Bigg) = - \mathbb{E} \Bigg( \frac{d^2 l}{d\theta^2} \Bigg)

\end{equation*}](../../assets/latex/probability_and_statistics_c6026d15fa470bb7097a7a0dde8138840c1a4282.png)

where  is called the Fisher information .

is called the Fisher information .

Estimated standard error

The estimated standard error of the MLE  of

of  is given by

is given by

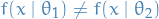

Regularity conditions

The lower bound of the variance of the MLE is true under the following conditions on the probability density function,  , and the estimator

, and the estimator  :

:

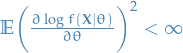

- The Fisher information is always defined; equivalently for all

such that

such that  :

:

exists and is finite.

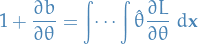

- The operations of integration wrt. to

and differentiation wrt.

and differentiation wrt.  can be interchanged in the expectation of

can be interchanged in the expectation of  ; that is,

; that is,

![\begin{equation*}

\frac{\partial}{\partial \theta} \Bigg[ \int T(x) f(x;\theta) \ dx \Bigg] = \int T(x) \Big[ \frac{\partial}{\partial \theta} f(x; \theta) \Big] \ dx

\end{equation*}](../../assets/latex/probability_and_statistics_6c64d356fe0aa2961efd3466b02125c5a6e1c96a.png)

whenever the RHS is finite. This condition can often be confirmed by using the fact that integration and differentiation can be swapped when either of the following is true:

- The function

has bounded support in

has bounded support in  , and the bounds do not depend on

, and the bounds do not depend on

- The function

has infinite support, is continuously differentiable, and the integral converges uniformly for all

has infinite support, is continuously differentiable, and the integral converges uniformly for all

Proof (single variable)

"Proof" (alternative, also single variable)

- Notation

denotes the expectation over the data

denotes the expectation over the data  , assuming the data arises from the model specified by the parameter

, assuming the data arises from the model specified by the parameter

is the same as above, but assuming

is the same as above, but assuming

denotes the score, where we've made the dependence on the data

denotes the score, where we've made the dependence on the data  explicit by including it as an argument

explicit by including it as an argument

- Stuff

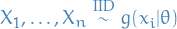

- Consistency of

as an estimator

as an estimator

Suppose the true parameter is

, that is:

, that is:

Then, for any

(not necessarily

(not necessarily  ), the Law of Large Numbersimplies the convergence in probability

), the Law of Large Numbersimplies the convergence in probability

![\begin{equation*}

\frac{1}{n} \sum_{i=1}^{n} \log f(X_i \mid \theta) \to \mathbb{E}_{\theta_0}[\log f(X \mid \theta)]

\end{equation*}](../../assets/latex/probability_and_statistics_38a1afa016cb0802911d69d3fb99b6c48d855b5c.png)

Under suitable regularity conditions, this implies that the value of

maximizing the LHS, which is

maximizing the LHS, which is  , converges in probability to the value of

, converges in probability to the value of  maximizing RHS, which we claim is

maximizing RHS, which we claim is  .

.

Indeed, for any

![\begin{equation*}

\mathbb{E}_{\theta_0}[ \log f(X \mid \theta)] - \mathbb{E}_{\theta_0} [ \log f(X \mid \theta_0)] = \mathbb{E}_{\theta_0} \Bigg[ \log \frac{f(X \mid \theta)}{f(X \mid \theta_0} \Bigg]

\end{equation*}](../../assets/latex/probability_and_statistics_8a065868d54f7d3f27f9eaa55dee2843d2a54aa5.png)

Noting that

is concave, Jensen's Inequality implies

is concave, Jensen's Inequality implies

![\begin{equation*}

\mathbb{E}[\log X] \le \log \mathbb{E}[X]

\end{equation*}](../../assets/latex/probability_and_statistics_c3d09c146a6ce43fab76633e4dfca4ca0d6f58cd.png)

for any positive random variable

, so

, so

![\begin{equation*}

\begin{split}

\mathbb{E}_{\theta_0}\Bigg[ \log \frac{f(X \mid \theta)}{f(X \mid \theta_0)} \Bigg] & \le \log \mathbb{E}_{\theta_0} \Bigg[ \frac{f(X \mid \theta)}{f(X \mid \theta_0)} \Bigg] \\

& = \log \int \frac{f(x \mid \theta)}{f(x \mid \theta_0)} f(x \mid \theta_0) \ dx \\

&= \log \int f(x \mid \theta_0) \ dx \\

&= 0

\end{split}

\end{equation*}](../../assets/latex/probability_and_statistics_0ed41b771f87ebc4eb100285a0baf81662be5549.png)

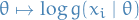

Which establishes "consistency" of

since

since ![$\theta \mapsto \mathbb{E}_{\theta_0}[\log f(X \mid \theta)]$](../../assets/latex/probability_and_statistics_95959728964caee763d8ae36db8189c9c744e019.png) is maximized at

is maximized at  .

.

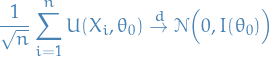

- Expectation and variance of score

For

,

,

![\begin{equation*}

\mathbb{E}_{\theta}[U(X, \theta)] = 0, \qquad \text{Var}_{\theta} \big( U(X, \theta) \big) = - \mathbb{E}[U'(X, \theta)]

\end{equation*}](../../assets/latex/probability_and_statistics_64bc641e48dae229ceb7b967982458f04c4273ae.png)

First, for the expectation we have

![\begin{equation*}

\begin{split}

\mathbb{E}_{\theta}[U] &= \int U(x, \theta) f(x; \theta) \ dx \\

&= \int \Bigg( \frac{\partial \log f(x; \theta)}{\partial \theta} \Bigg) f(x; \theta) \ dx \\

&= \int \Bigg( \frac{1}{f(x; \theta)} \frac{\partial f}{\partial \theta} \Bigg) f(x; \theta) \ dx \\

&= \int \frac{\partial f}{ \partial \theta} \ dx

\end{split}

\end{equation*}](../../assets/latex/probability_and_statistics_2992b8afaa3afeabbdde632f36d8abfb74e8a772.png)

Assuming we can interchange the order of the derivative and the integral (which we can for analytic functions), we have

![\begin{equation*}

\begin{split}

\mathbb{E}_{\theta}[U(X, \theta)] &= \frac{\partial}{\partial \theta} \int f(x; \theta) \ dx \\

&= \frac{\partial}{\partial \theta} \big( 1 \big) \\

&= 0

\end{split}

\end{equation*}](../../assets/latex/probability_and_statistics_e875f5d9ee6249a92e736ef5d54ef78f91fa04d6.png)

For the variance, we can differentiate the above identity:

![\begin{equation*}

\begin{split}

0 &= \frac{\partial}{\partial \theta} \mathbb{E}_{\theta}[U] \\

&= \frac{\partial}{\partial \theta} \int U(x, \theta) f(x \mid \theta) \ dx \\

&= \int U'(x, \theta) f(x \mid \theta) + U(x, \theta) \Big( \frac{\partial}{\partial \theta} f(x \mid \theta) \Big) \ dx \\

&= \int U'(x, \theta) f(x \mid \theta) \ dx + \int U(x, \theta) \Big( U(x, \theta) f(x \mid \theta) \Big) \ dx \\

&= \int U'(x, \theta) f(x \mid \theta) \ dx + \int U^2 f(x \mid \theta) \ dx \\

&= \mathbb{E}_{\theta}[U'] + \mathbb{E}_{\theta}[U^2] \\

&= \mathbb{E}_{\theta}[U'] + \text{Var}_{\theta}(U^2)

\end{split}

\end{equation*}](../../assets/latex/probability_and_statistics_0c91422235ea46c6b9b99425dbd18573eb2ecebd.png)

where we've used the fact that

and

and ![$\mathbb{E}_{\theta}[U] = 0$](../../assets/latex/probability_and_statistics_01a6c4156e5615ab2aa85e4d2d4df02bc654b0f5.png) , which implies that

, which implies that ![$\text{Var}_{\theta}(U) = \mathbb{E}_{\theta}[U^2]$](../../assets/latex/probability_and_statistics_a5e3956f996d406f4942a9948347f460d0275fcb.png) .

.

This is equivalent to

![\begin{equation*}

\text{Var}_{\theta}(U^2) = - \mathbb{E}_{\theta}[U']

\end{equation*}](../../assets/latex/probability_and_statistics_dfafe59504c65eb46349a7c5653e73f1b550e663.png)

as wanted.

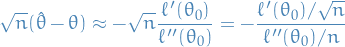

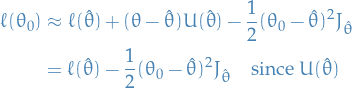

- Asymptotic behavior

Now, since

maximizes

maximizes  , we must have

, we must have  .

.

Consistency of

ensures that

ensures that  is close to

is close to  (for large n, with high probability). Thus, we an apply first-order Taylor expansion to the equation

(for large n, with high probability). Thus, we an apply first-order Taylor expansion to the equation  about the point

about the point  :

:

Thus,

For the denominator, we rewrite as

![\begin{equation*}

\begin{split}