Measure theory

Table of Contents

Notation

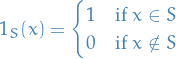

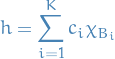

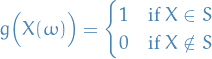

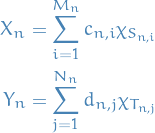

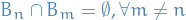

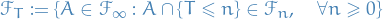

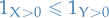

and

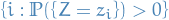

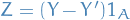

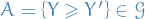

and  are used to denote the indicator or characteristic function

are used to denote the indicator or characteristic function

Definition

Motivation

The motivation behind defining such a thing is related to the Banach-Tarski paradox, which says that it is possible to decompose the 3-dimensional solid unit ball into finitely many pieces and, using only rotations and translations, reassemble the pieces into two solid balls each with the same volume as the original. The pieces in the decomposition, constructed using the axiom of choice, are non-measurable sets.

Informally, the axiom of choice, says that given a collecions of bins, each containing at least one object, it's possible to make a selection of exactly one object from each bin.

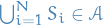

Measure space

If  is a set with the sigma-algebra

is a set with the sigma-algebra  and the measure

and the measure  , then we have a measure space .

, then we have a measure space .

Product measure

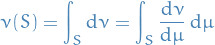

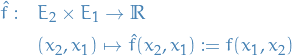

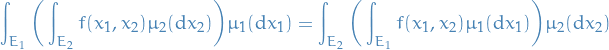

Given two measurable spaces and measures on them, one can obtain a product measurable space and a product measure on that space.

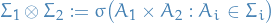

A product measure  is defined to be a measure on the measurable space

is defined to be a measure on the measurable space  , where we've let

, where we've let  be the algebra on the Cartesian product

be the algebra on the Cartesian product  . This sigma-algebra is called the tensor-product sigma-algebra on the product space, which is defined

. This sigma-algebra is called the tensor-product sigma-algebra on the product space, which is defined

A product measure  is defined to be a measure on the measurable space

is defined to be a measure on the measurable space  satisfying the property

satisfying the property

and

and

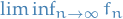

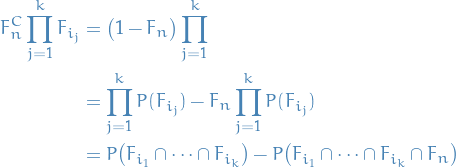

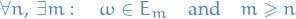

Let  be a sequence of extended real numbers.

be a sequence of extended real numbers.

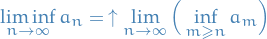

The limit inferior is defined

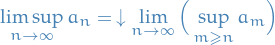

The limit supremum is defined

Premeasure

Given a space  , and a collection of sets

, and a collection of sets  is an algebra of sets on

is an algebra of sets on  if

if

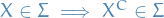

- If

, then

, then

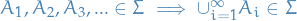

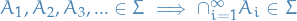

- If

and

and  are in

are in  , then

, then

Thus, a algebra of sets allow only finite unions, unlike σ-algebras where we allow countable unions.

Given a space  and an algebra

and an algebra  , a premeasure is a function

, a premeasure is a function ![$\lambda: \mathcal{A} \to [0, \infty]$](../../assets/latex/measure_theory_e17a0e8668dbd5fd1ed0e08c218ece154e7c2ed7.png) such that

such that

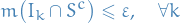

For every finite or countable collection of disjoint sets

with

with  , if

, if  then

then

Observe that the last property says that IF this "possibly large" union is in the algebra, THEN that sum exists.

A premeasure space is a triple  where

where  is a space,

is a space,  is an algebra, and a premeasure

is an algebra, and a premeasure  .

.

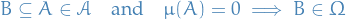

Complete measure

A complete measure (or, more precisely, a complete measure space ) is a measure space in which every subset of every null set is measurable (having measure zero).

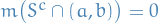

More formally,  is complete if and only if

is complete if and only if

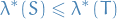

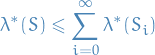

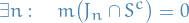

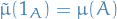

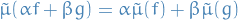

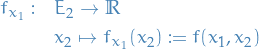

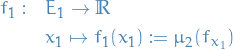

If  is a premeasure space, then there is a complete measure space

is a premeasure space, then there is a complete measure space  such that

such that

we have

we have

If  is σ-finite, then

is σ-finite, then  is the only measure on

is the only measure on  that is equal to

that is equal to  on

on  .

.

Atomic measure

Let  be a measure space.

be a measure space.

Then a set  is called an atom if

is called an atom if

and

A measure  which has no atoms is called non-atomic or diffuse

which has no atoms is called non-atomic or diffuse

In other words, a measure  is non-atomic if for any measurable set

is non-atomic if for any measurable set  with

with  , there exists a measurable subset

, there exists a measurable subset  s.t.

s.t.

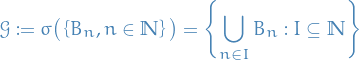

π-system

Let  be any set. A family

be any set. A family  of subsets of

of subsets of  is called a π-system if

is called a π-system if

If

, then

, then

So this is an even weaker notion than being an (Boolean) algebra. We introduce it because it's sufficient to prove uniqueness of measures:

Theorems

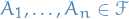

Jensen's inequality

Let

be a probability space

be a probability space be random variable

be random variable![$c: \mathbb{R} \to (-\infty, \infty]$](../../assets/latex/measure_theory_0fbf01fc0b204736d63b072e13cefceee1e07280.png) be a convex function

Then

be a convex function

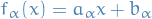

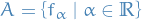

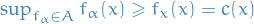

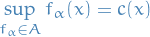

Then  is the supremum of a sequence of affine functions

is the supremum of a sequence of affine functions

for

, with

, with  .

.

Then ![$\mathbb{E}[c(X)]$](../../assets/latex/measure_theory_a9eba1896bb741b60c40f7b709c13a7dfdf79cbf.png) is well-defined, and

is well-defined, and

![\begin{equation*}

\mathbb{E}[c(X)] \overset{a.s.}{\ge} a_n \mathbb{E}[X] + b_n

\end{equation*}](../../assets/latex/measure_theory_27722a1c52318e0443a664830effa0bb1a3503a3.png)

Taking the supremum over  in this inequality, we obtain

in this inequality, we obtain

![\begin{equation*}

\mathbb{E}[c(X)] \ge c \big( \mathbb{E}[X] \big)

\end{equation*}](../../assets/latex/measure_theory_8c3280bc7d5e2fce41ec6d17771166c73ae27475.png)

![$c: \mathbb{R} \to (-\infty, \infty]$](../../assets/latex/measure_theory_0fbf01fc0b204736d63b072e13cefceee1e07280.png) be a convex function

Then

be a convex function

Then  is the supremum of a sequence of affine functions

is the supremum of a sequence of affine functions

Suppose  is convex, then for each point

is convex, then for each point  there exists an affine function

there exists an affine function  s.t.

s.t.

- the line

corresponding to

corresponding to  passes through

passes through

- the graph of

lies entirely above

lies entirely above

Let  be the set of all such functions. We have

be the set of all such functions. We have

because

because  passes through the point

passes through the point

beacuse all

beacuse all  lies below

lies below

Hence

(note this is for each  , i.e. pointwise).

, i.e. pointwise).

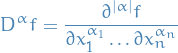

Sobolev space

Notation

is an open subset of

is an open subset of

denotes a infinitively differentiable function

denotes a infinitively differentiable function  with compact support

with compact support is a multi-index of order

is a multi-index of order  , i.e.

, i.e.

Definition

Vector space of functions equipped with a norm that is a combination of  norms of the function itself and its derivatoves to a given order.

norms of the function itself and its derivatoves to a given order.

Intuitively, a Sobolev space is a space of functions with sufficiently many derivatives for some application domain, e.g. PDEs, and equipped with a norm that measures both size and regularity of a function.

The Sobolev space spaces  combine the concepts of weak differentiability and Lebesgue norms (i.e.

combine the concepts of weak differentiability and Lebesgue norms (i.e.  spaces).

spaces).

For a proper definition for different cases of dimension of the space  , have a look at Wikipedia.

, have a look at Wikipedia.

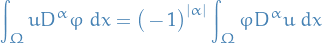

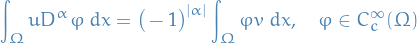

Motivation

Integration by parst yields that for every  where

where  , and for all infinitively differentiable functions with compact support

, and for all infinitively differentiable functions with compact support  :

:

Observe that LHS only makes sense if we assume  to be locally integrable. If there exists a locally integrable function

to be locally integrable. If there exists a locally integrable function  , such that

, such that

we call  the weak

the weak  -th partial derivative of

-th partial derivative of  . If this exists, then it is uniquely defined almost everywhere, and thus it is uniquely determined as an element of a Lebesgue space (i.e.

. If this exists, then it is uniquely defined almost everywhere, and thus it is uniquely determined as an element of a Lebesgue space (i.e.  function space).

function space).

On the other hand, if  , then the classical and the weak derivative coincide!

, then the classical and the weak derivative coincide!

Thus, if  , we may denote it by

, we may denote it by  .

.

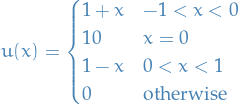

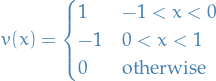

Example

is not continuous at zero, and not differentiable at −1, 0, or 1. Yet the function

satisfies the definition of being the weak derivative of  , which then qualifies as being in the Sobolev space

, which then qualifies as being in the Sobolev space  (for any allowed

(for any allowed  ).

).

Lebesgue measure

Notation

denotes the collection of all measurable sets

denotes the collection of all measurable sets

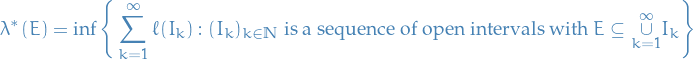

Stuff

Given a subset  , with the length of a closed interval

, with the length of a closed interval ![$I = [a,b]$](../../assets/latex/measure_theory_3fdf3f4bf882725c6261a1b413e5bc0b103e1281.png) given by

given by  , the Lebesgue outer measure

, the Lebesgue outer measure  is defined as

is defined as

Lebesgue outer-measure has the following properties:

Idea: Cover by

Idea: Cover by  .

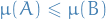

.(Monotinicy)

if

if  , then

, then

Idea: a cover of

is a cover of

is a cover of  .

.

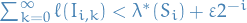

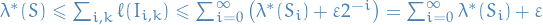

(Countable subadditivity) For every set

and every sequence of sets

and every sequence of sets  if

if  then

then

Idea: construct a cover of each

,

,  such that

such that  :

:

- Every point in

is in one of the

is in one of the

- Every point in

Q: Is it possible for every  to find a cover

to find a cover  such that

such that  ?

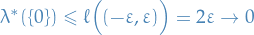

A: No. Consider

?

A: No. Consider  . Given

. Given  , consider

, consider  .

This is a cover of

.

This is a cover of  so

so  .

If

.

If  is a cover by open intervals of

is a cover by open intervals of  , then there is at least one

, then there is at least one  such that

such that  is a nonempty open interval, so it has a strictly positive lenght, and

is a nonempty open interval, so it has a strictly positive lenght, and

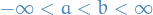

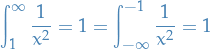

If  , then

, then

![\begin{equation*}

\lambda^* \big( [a, b] \big) = \lambda^*([a, b)) = \lambda^* \big( (a, b] \big) = \lambda^* \big( (a, b) \big)

\end{equation*}](../../assets/latex/measure_theory_e6c6a70aa01d16ddfce344bbaedabbd1746e51f5.png)

Idea: ![$\big( a ,b \big) \subseteq [a, b]$](../../assets/latex/measure_theory_c7ddd35e2c2b0a06fa21ecb3622aa3c7a90f9cd9.png) , so

, so ![$\lambda^* \big( (a, b) \big) \le \lambda^* \big( [a, b] \big)$](../../assets/latex/measure_theory_a246293098d05d0199b421bc104c358809bd3568.png) .

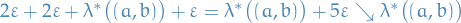

For reverse, cover

.

For reverse, cover  by intervals giving a sum within

by intervals giving a sum within  .

Then cover

.

Then cover  and

and  by intervals of length

by intervals of length  .

Put the 2 new sets at at the start of the sequence, to get a cover of

.

Put the 2 new sets at at the start of the sequence, to get a cover of ![$[a, b]$](../../assets/latex/measure_theory_c9a1e8df376ecb942b106e02d3e6d1b417da2600.png) , and sum of the lengths is at most

, and sum of the lengths is at most  . Hence,

. Hence,

![\begin{equation*}

\lambda^* \big( (a, b) \big) \le \lambda^* \big( [a, b] \big) \text{ and } \lambda^* \big( [a, b] \big) \le \lambda^* \big( (a, b) \big)

\end{equation*}](../../assets/latex/measure_theory_e07a5a74202cf48119137eaee61040e633e6ac87.png)

If  is an open interval, then

is an open interval, then  .

.

Idea: lower bound from  .

Only bounded nonempty intervals are interesting.

Take the closure to get a compact set. Given a countable cover by open intervals, reduce to a finite subcover.

Then arrange a finite collection of intervals in something like increasing order, possibly dropping unnecessary sets.

Call these new intervals

.

Only bounded nonempty intervals are interesting.

Take the closure to get a compact set. Given a countable cover by open intervals, reduce to a finite subcover.

Then arrange a finite collection of intervals in something like increasing order, possibly dropping unnecessary sets.

Call these new intervals  and let

and let  be the number of such intervals, and such that

be the number of such intervals, and such that

i.e. left-most interval cover the starting-point, and right-most interval cover the end-point. Then

Taking the infimum,

The Lebesgue measure is then defined on the Lebesgue sigma-algebra, which is the collection of all the sets  which satisfy the condition that, for every

which satisfy the condition that, for every

For any set in the Lebesgue sigma-algrebra, its Lebesgue measure is given by its Lebesgue outer measure  .

.

IMPORTANT!!! This is not necessarily related to the Lebesgue integral! It CAN be be, but the integral is more general than JUST over some Lebesgue measure.

Intuition

- First part of definition states that the subset

is reduced to its outer measure by coverage by sets of closed intervals

is reduced to its outer measure by coverage by sets of closed intervals - Each set of intervals

covers

covers  in the sense that when the intervals are combined together by union, they contain

in the sense that when the intervals are combined together by union, they contain

- Total length of any covering interval set can easily overestimate the measure of

, because

, because  is a subset of the union of the intervals, and so the intervals include points which are not in

is a subset of the union of the intervals, and so the intervals include points which are not in

Lebesgue outer measure emerges as the greatest lower bound (infimum) of the lengths from among all possible such sets. Intuitively, it is the total length of those interval sets which fit  most tightly and do not overlap.

most tightly and do not overlap.

In my own words: Lebesgue outer measure is smallest sum of the lengths of subintervals  s.t. the union of these subintervals

s.t. the union of these subintervals  completely "covers" (i.e. are equivalent to)

completely "covers" (i.e. are equivalent to)  .

.

If you take an a real interval ![$I = [a, b]$](../../assets/latex/measure_theory_f5678fa616e44d479ff19a74dbf6956a9abbbf8c.png) , then the Lebesge outer measure is simply

, then the Lebesge outer measure is simply  .

.

Properties

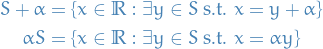

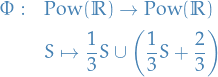

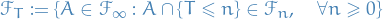

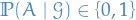

Notation

For

and

and  , we let

, we let

Stuff

The collection of Lebesgue measurable sets is a sigma-algebra.

Easy to see

is in this collection:

is in this collection:

Closed under complements is clear: let

be Lebesgue measurable, then

be Lebesgue measurable, then

hence this is also true for

, and so

, and so  is Lebesgue measurable.

is Lebesgue measurable.

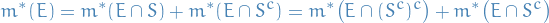

- Closed under countable unions:

Finite case:

.

Consider

.

Consider  both Lebesgue measurable and some set

both Lebesgue measurable and some set  .

Since

.

Since  is L. measurable:

is L. measurable:

Since

is L. measurable:

is L. measurable:

which allows us to rewrite the above equation for

:

:

Observe that

By subadditivity:

Hence,

Then this follows for all finite cases by induction.

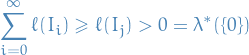

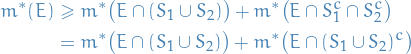

Countable disjoint case: Let

, and

, and  . Further, let

. Further, let  .

.

Hence

is L. measurable. Thus,

is L. measurable. Thus,

Since the

are disjoint

are disjoint  and

and  :

:

Let

and note that

and note that  .

Thus, by indiction

.

Thus, by indiction

Thus,

Taking

:

:

Thus,

is L. measurable if the

is L. measurable if the  are disjoint and L. measurable!

are disjoint and L. measurable!

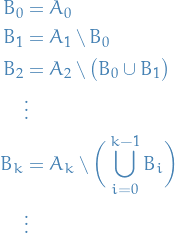

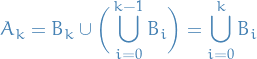

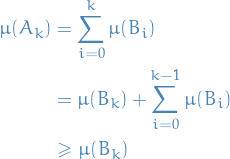

- Countable (not-necessarily-disjoint) case:

If

are not disjoint, let

are not disjoint, let  and let

and let  , which gives a sequence of disjoint sets, hence the above proof applies.

, which gives a sequence of disjoint sets, hence the above proof applies.

Every open interval is Lebesgue measurable, and the Borel sigma-algebra is a subset of the sigma-algebra of Lebesgue measurable sets.

Want to prove measurability of intervals of the form  .

.

Idea:

- split any set

into the left and right part

into the left and right part - split any cover in the same way

- extend covers by

to make them open

to make them open

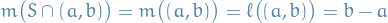

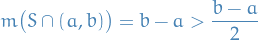

is a measure space, and for al intervals

is a measure space, and for al intervals  , the measure is the length.

, the measure is the length.

Cantor set

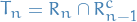

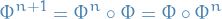

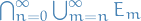

Define

For  , with

, with  being identity, and

being identity, and

Let ![$E_0 = [0, 1]$](../../assets/latex/measure_theory_2841c9b582f2d0607515b04b91c33a1a58903197.png) and

and  . Then the Cantor set is defined

. Then the Cantor set is defined

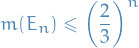

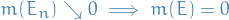

The Cantor set has a Lebesgue measure zero.

We make the following observations:

- Scaled and shifted closed sets are closed

is a finite union of closed intervals and so is in the Borel sigma-algebra

is a finite union of closed intervals and so is in the Borel sigma-algebra- σ-algebras are closed under countable intersections, hence Cantor set is in the Borel σ-algebra

- Finally, Borel σ-algebra is a subset of Lebesgue measurable sets, hence the Cantor set is Lebesuge measurable!

Since Lebesgue measure satisfy  for any Lebesgue measurable set

for any Lebesgue measurable set  with finite measure and any

with finite measure and any  with

with  . Since Lebesgue measure is subadditive, we have for any

. Since Lebesgue measure is subadditive, we have for any

Since  , by induction, it follows that

, by induction, it follows that

Taking the infimum of over  , we have that the Cantor set has measure zero:

, we have that the Cantor set has measure zero:

Cardinality of the Cantor set

Let ![$x \in [0, 1]$](../../assets/latex/measure_theory_1abcf6a6ba0996351c652ec55b2a137f25774cfc.png) .

.

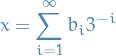

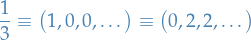

The terniary expansion is a sequence  with

with  such that

such that

The Cantor set  is uncountable.

is uncountable.

We observe that if the first  elements of the expansion for

elements of the expansion for  are in

are in  , then

, then  . But importantly, observe that some numbers have more than one terniary expansion, i.e.

. But importantly, observe that some numbers have more than one terniary expansion, i.e.

in the terniary expansion. One can show that a number  if and only if

if and only if  has a terniary expansion with no 1 digits. Hence, the Cantor set

has a terniary expansion with no 1 digits. Hence, the Cantor set  is uncountable!

is uncountable!

One can see that  if and only if terniary expansion with no 1 digits, since such an

if and only if terniary expansion with no 1 digits, since such an  would land in the "gaps" created by the construction of the Cantor set.

would land in the "gaps" created by the construction of the Cantor set.

Uncountable Lebesgue measurable set

There exists uncountable Lebesgue measurable sets.

Menger sponge

- Generalization of Cantor set to

Vitali sets

Let  if and only if

if and only if  .

.

- There are uncountable many equivalence classes, with each equivalence class being countable (as a set).

- By axiom of choice, we can pick one element from each equivalence class.

- Can assume each representative picked is in

![$[0, 1]$](../../assets/latex/measure_theory_68c8fa38d960e53d4308cbf1e65d04c66a554817.png) , and this set we denote

, and this set we denote

Suppose, for the sake of contradiction, that  is measurable.

is measurable.

Observe if ![$x \in [0, 1]$](../../assets/latex/measure_theory_1abcf6a6ba0996351c652ec55b2a137f25774cfc.png) , then there is a

, then there is a  and

and  s.t.

s.t. ![$q \in [-1, 1]$](../../assets/latex/measure_theory_e2a141e95f622cba27e76a7586a7befe39020eda.png) , i.e.

, i.e.

![\begin{equation*}

[0, 1] \subseteq \bigcup_{q \in [-1, 1] \cap \mathbb{Q}}^{} \Big( R + q \Big) \subseteq [-1, 2]

\end{equation*}](../../assets/latex/measure_theory_f79853c87366563f923b51372436ae537e0d78ed.png)

Then, by countable additivity

![\begin{equation*}

\begin{split}

m([0, 1]) &\le m \bigg( \bigcup_{q \in [-1, 1] \cap \mathbb{Q}}^{} R + q \bigg) \le m \big( [-1, 2] \big) = 3 \\

m([0, 1]) &\le \sum_{q \in [-1, 1] \cap \mathbb{Q}}^{} m (R + q) \le 3 \\

m([0, 1]) &\le \sum_{q \in [-1, 1] \cap \mathbb{Q}}^{} m (R) \le 3

\end{split}

\end{equation*}](../../assets/latex/measure_theory_10cc4bf4c4028ae61e6bec3e9a6df729d34e627e.png)

where we've used

![\begin{equation*}

m \bigg( \bigcup_{q \in [-1, 1] \cap \mathbb{Q}}^{} R + q \bigg) = \sum_{q \in [-1, 1] \cap \mathbb{Q}}^{} m (R + q) = \sum_{q \in [-1, 1] \cap \mathbb{Q}}^{} m (R)

\end{equation*}](../../assets/latex/measure_theory_4c878026d358487ad76ea895c4e45d05d969e74a.png)

Hence, we have our contradiction and so this set, the Vitali set, is not measurable!

There exists a subset of  that is not measurable wrt. Lebesgue measure.

that is not measurable wrt. Lebesgue measure.

Lebesgue Integral

The Lebesgue integral of a function  over a measure space

over a measure space  is written

is written

which means we're taking the integral wrt. the measure  .

.

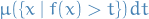

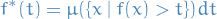

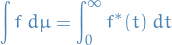

Special case: non-negative real-valued function

Suppose that  is a non-negative real-valued function.

is a non-negative real-valued function.

Using the "partitioning of range of  " philosophy, the integral of

" philosophy, the integral of  should be the sum over

should be the sum over  of the elementary area contained in the thin horizontal strip between

of the elementary area contained in the thin horizontal strip between  and

and  , which is just

, which is just

Letting

The Lebesgue integral of  is then defined by

is then defined by

where the integral on the right is an ordinary improper Riemann integral. For the set of measurable functions, this defines the Lebesgue integral.

Radon measure

- Hard to find a good notion of measure on a topological space that is compatible with the topology in some sense

- One way is to define a measure on the Borel set of the topological space

Let  be a measure on the sigma-algebra of Borel sets of a Hausdorff topological space

be a measure on the sigma-algebra of Borel sets of a Hausdorff topological space  .

.

is called inner regular or tight if, for any Borel set

is called inner regular or tight if, for any Borel set  ,

,  is the supremum of

is the supremum of  over all compact subsets of

over all compact subsets of  of

of  , i.e.

, i.e.

where

denotes the compact interior, i.e. union of all compact subsets

denotes the compact interior, i.e. union of all compact subsets  .

.

is called outer regular if, for any Borel set

is called outer regular if, for any Borel set  ,

,  is the infimum of

is the infimum of  over all open sets

over all open sets  containing

containing  , i.e.

, i.e.

where

denotes the closure of

denotes the closure of  .

.

is called locally finite if every point of

is called locally finite if every point of  has a neighborhood

has a neighborhood  for which

for which  is finite (if

is finite (if  is locally finite, then it follows that

is locally finite, then it follows that  is finite on compact sets)

is finite on compact sets)

The measure  is called a Radon measure if it is inner regular and locally finite.

is called a Radon measure if it is inner regular and locally finite.

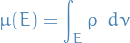

Suppose  and

and  are two

are two  measures on a measures on a measurable space

measures on a measures on a measurable space  and

and  is absolutely continuous wrt.

is absolutely continuous wrt.  .

.

Then there exists a non-negative, measurable function  on

on  such that

such that

The function  is called the density or Radon-Nikodym derivative of

is called the density or Radon-Nikodym derivative of  wrt.

wrt.  .

.

If a Radon-Nikodym derivative of  wrt.

wrt.  exists, then

exists, then  denotes the equivalence class of measurable functions that are Radon-Nikodym derivatives of

denotes the equivalence class of measurable functions that are Radon-Nikodym derivatives of  wrt.

wrt.  .

.

is often used to denote

is often used to denote  , i.e.

, i.e.  is just in the equivalence class of measurable functions such that this is the case.

is just in the equivalence class of measurable functions such that this is the case.

This comes from the fact that we have

Suppose  and

and  are Radon-Nikodym derivatives of

are Radon-Nikodym derivatives of  wrt.

wrt.  iff

iff  .

.

The δ measure cannot have a Radon-Nikodym derivative since integrating  gives us zero for all measurable functions.

gives us zero for all measurable functions.

Continuity of measure

Suppose  and

and  are two sigma-finite measures on a measure space

are two sigma-finite measures on a measure space  .

.

Then we say that  is absolutely continuous wrt.

is absolutely continuous wrt.  if

if

We say that  and

and  are equivalent if each measure is absolutely continuous wrt. to the other.

are equivalent if each measure is absolutely continuous wrt. to the other.

Density

Suppose  and

and  are two sigma-finite measures on a measure space

are two sigma-finite measures on a measure space  and that

and that  is absolutely continuous wrt.

is absolutely continuous wrt.  . Then there exists a non-negative, measurable function

. Then there exists a non-negative, measurable function  on

on  such that

such that

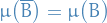

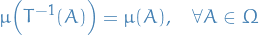

Measure-preserving transformation

is a measure-preserving transformation is a transformation on the measure-space

is a measure-preserving transformation is a transformation on the measure-space  if

if

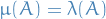

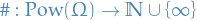

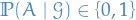

Measure

A measure on a set is a systematic way of defining a number to each subset of that set, intuitively interpreted as size.

In this sense, a measure is a generalization of the concepts of length, area, volume, etc.

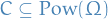

Formally, let  be a

be a  of subsets of

of subsets of  .

.

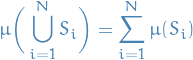

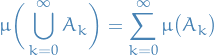

Suppose ![$\mu: \mathcal{A} \to [0, \infty]$](../../assets/latex/measure_theory_beef385f676dedc4e7697f3bc64effffd9d7d3aa.png) is a function. Then

is a function. Then  is a measure if

is a measure if

Whenever

are pairwise disjoint subsets of

are pairwise disjoint subsets of  in

in  , then

, then

- Called σ-additivity or sub-additivity

Properties

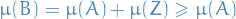

Let  be a measure space, and

be a measure space, and  such that

such that  .

.

Then  .

.

Let

Then  , and by finite additivity property of a measure:

, and by finite additivity property of a measure:

since  by definition of a measure.

by definition of a measure.

If  are

are  subsets of

subsets of  , then

, then

We know for a sequence of disjoint sets  we have

we have

So we just let

Then,

Thus,

Concluding our proof!

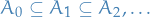

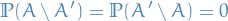

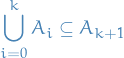

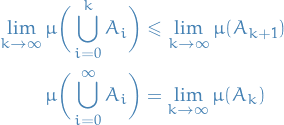

Let  be an increasing sequence of measurable sets.

be an increasing sequence of measurable sets.

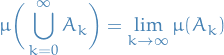

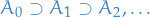

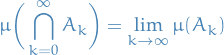

Then

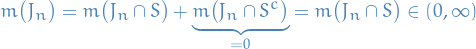

Let  be sets from some

be sets from some

.

.

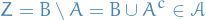

If  , then

, then

Examples of measures

Let

be a space

be a space

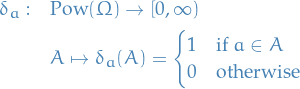

The δ-measure (at  ) is

) is

Sigma-algebra

Definition

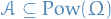

Let  be some set, and let

be some set, and let  be its power set. Then the subset

be its power set. Then the subset  is a called a σ-algebra on

is a called a σ-algebra on  if it satisfies the following three properties:

if it satisfies the following three properties:

is closed under complement: if

is closed under complement: if

is closed under countable unions: if

is closed under countable unions: if

These properties also imply the following:

is closed under countable intersections: if

is closed under countable intersections: if

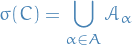

Generated σ-algebras

Given a space  and a collection of subsets

and a collection of subsets  , the σ-algebra generated by

, the σ-algebra generated by  , denoted

, denoted  , is defined to be the intersection of all σ-algebras on

, is defined to be the intersection of all σ-algebras on  that contain

that contain  , i.e.

, i.e.

where

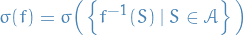

Let  be a measurable space and

be a measurable space and  a function from some space

a function from some space  to

to  .

.

The σ-algebra generated by  is

is

Observe that though this is similar to σ-algebra generated by MEASURABLE function, the definition differs in a sense that the preimage does not have to be measurable. In particular, the σ-algebra generated by a measurable function can be defined as above, where  is measurable by definition of

is measurable by definition of  being a measurable function, hence corresponding exactly to the other definition.

being a measurable function, hence corresponding exactly to the other definition.

Let  and

and  be measure spaces and

be measure spaces and  a measurable function.

a measurable function.

The σ-algebra generated by  is

is

Let  be a space.

be a space.

If  is a collection of σ-algebras, then

is a collection of σ-algebras, then  is also a σ-algebra.

is also a σ-algebra.

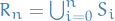

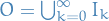

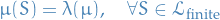

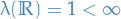

σ-finite

A measure or premeasure space  is finite if

is finite if  .

.

A measure  on a measure space

on a measure space  is said to be sigma-finite if

is said to be sigma-finite if  can be written as a countable union of measurable sets of finite measure.

can be written as a countable union of measurable sets of finite measure.

Example: counting measure on uncountable set is not σ-finite

Let  be a space.

be a space.

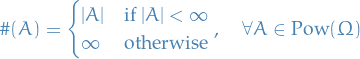

The counting measure is defined to be  such that

such that

On any uncountable set, the counting measure is not σ-finite, since if a set has finite counting measure it has countably many elements, and a countable union of finite sets is countable.

Properties

Let  be a

be a  of subsets of a set

of subsets of a set  . Then

. Then

If

, then

, then

- If

then

then

Borel sigma-algebra

Any set in a topological space that can be formed from the open sets through the operations of:

- countable union

- countable intersection

- complement

is called a Borel set.

Thus, for some topological space  , the collection of all Borel sets on

, the collection of all Borel sets on  forms a σ-algebra, called the Borel algebra or Borel σ-algebra .

forms a σ-algebra, called the Borel algebra or Borel σ-algebra .

More compactly, the Borel σ-algebra on  is

is

where  is the σ-algebra generated by the standard topology on

is the σ-algebra generated by the standard topology on  .

.

Borel sets are important in measure theory, since any measure defined on the open sets of a space, or on the closed sets of a space, must also be defined on all Borel sets of that space.

Any measure defined on the Borel sets is called a Borel measure.

Lebesgue sigma-algebra

Basically the same as the Borel sigma-algebra but the Lebesgue sigma-algebra forms a complete measure.

Note to self

Suppose we have a Lebesgue mesaure on the real line, with measure space  .

.

Suppose that  is non-measurable subset of the real line, such as the Vitali set. Then the

is non-measurable subset of the real line, such as the Vitali set. Then the  measure of

measure of  is not defined, but

is not defined, but

and this larger set (  ) does have

) does have  measure zero, i.e. it's not complete !

measure zero, i.e. it's not complete !

Motivation

Suppose we have constructed Lebesgue measure on the real line: denote this measure space by  . We now wish to construct some two-dimensional Lebesgue measure

. We now wish to construct some two-dimensional Lebesgue measure  on the plane

on the plane  as a product measure.

as a product measure.

Naïvely, we could take the sigma-algebra on  to be

to be  , the smallest sigma-algebra containing all measureable "rectangles"

, the smallest sigma-algebra containing all measureable "rectangles"  for

for  .

.

While this approach does define a measure space, it has a flaw: since every singleton set has one-dimensional Lebesgue measure zero,

for any subset of  .

.

What follows is the important part!

However, suppose that  is non-measureable subset of the real line, such as the Vitali set. Then the

is non-measureable subset of the real line, such as the Vitali set. Then the  measure of

measure of  is not defined (since we just supposed that

is not defined (since we just supposed that  is non-measurable), but

is non-measurable), but

and this larger set (  ) does have

) does have  measure zero, i.e. it's not complete !

measure zero, i.e. it's not complete !

Construction

Given a (possible incomplete) measure space  , there is an extension

, there is an extension  of this measure space that is complete .

of this measure space that is complete .

The smallest such extension (i.e. the smallest sigma-algebra  ) is called the completion of the measure space.

) is called the completion of the measure space.

It can be constructed as follows:

- Let

be the set of all

be the set of all  measure zero subsets of

measure zero subsets of  (intuitively, those elements of

(intuitively, those elements of  that are not already in

that are not already in  are the ones preventing completeness from holding true)

are the ones preventing completeness from holding true) - Let

be the sigma-algebra generated by

be the sigma-algebra generated by  and

and  (i.e. the smallest sigma-algreba that contains every element of

(i.e. the smallest sigma-algreba that contains every element of  and of

and of  )

)  has an extension to

has an extension to  (which is unique if

(which is unique if  is sigma-finite), called the outer measure of

is sigma-finite), called the outer measure of  , given by the infimum

, given by the infimum

Then  is a complete measure space, and is the completion of

is a complete measure space, and is the completion of  .

.

What we're saying here is:

- For the "multi-dimensional" case we need to take into account the zero-elements in the resulting sigma-algebra due the product between the 1D zero-element and some element NOT in our original sigma-algebra

- The above point means that we do NOT necessarily get completeness, despite the sigma-algebras defined on the sets individually prior to taking the Cartesian product being complete

- To "fix" this, we construct a outer measure

on the sigma-algebra where we have included all those zero-elements which are "missed" by the naïve approach,

on the sigma-algebra where we have included all those zero-elements which are "missed" by the naïve approach,

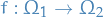

Measurable functions

Let  and

and  be measurable spaces.

be measurable spaces.

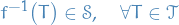

A function  is a measurable function if

is a measurable function if

where  denotes the preimage of the

denotes the preimage of the  for the measurable set

for the measurable set  .

.

Let  .

.

We define the indicator function of  to be the function

to be the function  given by

given by

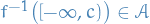

Let  . Then

. Then  is measurable if and only if

is measurable if and only if  .

.

Let  be a measure space or a probability space.

be a measure space or a probability space.

Let  be a sequence of measurable functions.

be a sequence of measurable functions.

- For each

, the function

, the function  is measurable

is measurable - The function

is measurable

is measurable - Thus, if

converge pointwise,

converge pointwise,  is measurable.

is measurable.

Let  be a measurable space, and let

be a measurable space, and let ![$f: X \to \big[ - \infty, \infty \big]$](../../assets/latex/measure_theory_66895ba3da05558127228a3c438c5e8a19adce3c.png) .

.

The following statements are equivalent:

is measurable.

is measurable. we have

we have ![$f^{-1} \big( (c, \infty] \big) \in \mathcal{A}$](../../assets/latex/measure_theory_a1403a63281df14e6f9406be7d355f985890dd71.png) .

. we have

we have ![$f^{-1} \big( [c, \infty] \big) \in \mathcal{A}$](../../assets/latex/measure_theory_7f928ebba157d8b0e32bf8ad73e9fa2873ab57d7.png) .

.![$\forall c \in (- \infty, \infty]$](../../assets/latex/measure_theory_16961a674cf394a5edb55231552df5ef2387dc61.png) we have

we have  .

. we have

we have ![$f^{-1} \big( [-\infty, c] \big) \in \mathcal{A}$](../../assets/latex/measure_theory_279d8a31d560b94f35569ec9df587984d4a28e58.png) .

.

A function  is measurable if

is measurable if

![\begin{equation*}

\forall c \in [- \infty, \infty) : f^{-1} \Big( (c, \infty] \Big) \in \mathcal{A}

\end{equation*}](../../assets/latex/measure_theory_cf13df725bd4ef04ae4396b0f5bcc9e8fdddb4bf.png)

We also observe that by Proposition proposition:equivalent-statements-to-being-a-measurable-function, it's sufficient to prove

![\begin{equation*}

\forall c \in (-\infty, \infty) : f^{-1} \big( [c, \infty] \big) \in \mathcal{A}

\end{equation*}](../../assets/latex/measure_theory_8e5e1bcca38b5b97bece913a91079e5c8dbc7a7d.png)

so that's what we set out to do.

For  and

and  , consider the following equivalent statements:

, consider the following equivalent statements:

![\begin{equation*}

\begin{align*}

& & x &\in \bigg( \inf_{n \ge m} f_n \bigg)^{- 1} \Big( [c, \infty] \Big) \\

& & \inf_{n \ge m} f_n(x) &\in [c, \infty] \\

& & \inf_{n \ge m} f_n(x) &\ge c \\

&\forall n \ge m : & f_n(x) &\ge c \\

& \forall n \ge m : & x & \in f_n^{-1} \big( [c, \infty \big) \\

& & x & \in \bigcap_{n \ge m}^{\infty} f_n^{-1} \big( [c, \infty] \big)

\end{align*}

\end{equation*}](../../assets/latex/measure_theory_94af009dc1af4db3187dfbf9a593271d7e6f84f7.png)

Thus,

![\begin{equation*}

\Big( \inf_{n \ge m} f_n \Big)^{-1} \big( [c, \infty] \big) = \bigcap_{n \ge m}^{} f_n^{-1} \big( [c, \infty] \big)

\end{equation*}](../../assets/latex/measure_theory_50a948d91ff66fee7b9175dd150be89b0439004f.png)

so

![\begin{equation*}

\big( \inf_{n \ge m} f_n \big)^{-1} \big( [c, \infty] \big) \in \mathcal{A}

\end{equation*}](../../assets/latex/measure_theory_e82c9dc08e0706905a550f6f6dcfba1d845ac5bb.png)

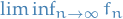

Recall that for each  , the sequence

, the sequence  is an increasing sequence in

is an increasing sequence in  . Therefore, similarily, the following are equivalent:

. Therefore, similarily, the following are equivalent:

![\begin{equation*}

\begin{align}

& & x & \in \big( \liminf_{n \to \infty} f_n \big)^{- 1} \big( [c, \infty] \big) \\

& & \liminf_{n \to \infty} f_n(x) & \in [c, \infty] \\

& & \uparrow \lim_{m \to \infty} \inf_{n \ge m} f_n(x) &\ge c \\

& & \sup_m \inf_{n \ge m} f_n(x) &\ge c \\

& \forall N \in \mathbb{Z} : \exist m \in \mathbb{N} & \inf_{n \ge m} f_n(x) &\ge c - \frac{1}{N} \\

& \forall N \in \mathbb{N} : & x &\in \bigcup_{m \in \mathbb{N}}^{} \bigg( \inf_{n \ge m} f_n \bigg)^{-1} \bigg( \bigg[ c - \frac{1}{N}, \infty \bigg] \bigg) \\

& & x & \in \bigcap_{N \in \mathbb{N}}^{} \bigcup_{m \in \mathbb{N}}^{} \bigg( \inf_{n \ge m} f_n \bigg)^{-1} \bigg( \bigg[ c - \frac{1}{N}, \infty \bigg] \bigg)

\end{align}

\end{equation*}](../../assets/latex/measure_theory_caecf37c6ca6765e01f08e6648c9e28ae668ffe0.png)

Thus,

![\begin{equation*}

\big( \liminf f_n \big)^{-1} \big( [c, \infty] \big) = \bigcap_{N \in \mathbb{N}}^{} \bigcup_{m \in \mathbb{N}}^{} \bigg( \inf_{n \ge m} f_n \bigg)^{-1} \bigg( \bigg[ c - \frac{1}{N}, \infty \bigg] \bigg)

\end{equation*}](../../assets/latex/measure_theory_c759a8999f8697bf52bd6d4e35f493d7ca24ec11.png)

Hence,

![\begin{equation*}

\big( \liminf f_n \big)^{-1} \big( [c, \infty] \big) \in \mathcal{A}

\end{equation*}](../../assets/latex/measure_theory_e6028aa99aabfafc829083191d1bf45609989c03.png)

concluding our proof!

Basically says the same as Prop. proposition:limits-of-measurable-functions-are-measurable, but a bit more "concrete".

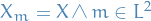

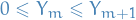

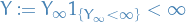

Let  be a

be a  of subsets of a set

of subsets of a set  , and let

, and let  with

with  be a sequence of measurable functions.

be a sequence of measurable functions.

Furthermore, let

Then  is a measurable function.

is a measurable function.

Simple functions

Let  be a

be a  of subsets of a set

of subsets of a set  .

.

A function  is called a simple function if

is called a simple function if

- it is measurable

- only takes a finite number of values

Let  be a

be a  of subsets of a set

of subsets of a set  .

.

Let ![$f : X \to [0, \infty]$](../../assets/latex/measure_theory_79e902dffac5e5c0b65197f8c0b14589c6664f65.png) be a nonnegative measurable function.

be a nonnegative measurable function.

Then there exists a sequence  of simple functions such that

of simple functions such that

for all

for all

Converges to

:

:

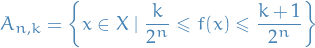

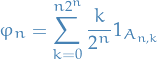

Define a function  as follows. Let

as follows. Let

and let

Then the function

obeys the required properties!

Almost everywhere and almost surely

Let  be a measure or probability space.

be a measure or probability space.

Let  be a sequence of measurable functions

be a sequence of measurable functions

- For each

the function

the function  is measurable

is measurable - The function

is measurable

is measurable - Thus, if the

converge pointwise, then

converge pointwise, then  is measurable

is measurable

Let  be a measure space. Let

be a measure space. Let  be a condition in oe variable.

be a condition in oe variable.

holds almost everywhere (a.e.) if

holds almost everywhere (a.e.) if

Let  be a probability space and

be a probability space and  be a condition in one variable, then

be a condition in one variable, then  holds almost surely (a.e.) if

holds almost surely (a.e.) if

also denoted

Let  be a complete measure space.

be a complete measure space.

- If

is measurable and if

is measurable and if  a.e. then

a.e. then  is measurable.

is measurable. - Being equal a.e. is an equivalence relation on measurable functions.

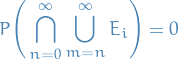

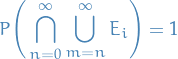

Convergence theorems for nonnegative functions

Problems

Clearly if  with

with  s.t.

s.t.  , then

, then

hence

Therefore it's sufficient to prove that if  , then there exists a non-degenerate open interval

, then there exists a non-degenerate open interval  s.t.

s.t.  . (first I said contained in

. (first I said contained in  , but that is a unecessarily strong statement; if contained then what we want would hold, but what we want does not imply containment).

, but that is a unecessarily strong statement; if contained then what we want would hold, but what we want does not imply containment).

As we know, for every  there exists

there exists  such that

such that  and

and

Which implies

which implies

Letting  , this implies that there exists an open cover

, this implies that there exists an open cover  s.t.

s.t.

and

(this fact that this is true can be seen by considering  for all

for all  and see that this would imply

and see that this would imply  not being a cover of

not being a cover of  , and if

, and if  , then since

, then since  there exists a "smaller" cover).

there exists a "smaller" cover).

Thus,

Hence, letting  be s.t.

be s.t.

we have

as wanted!

, we have

, we have  for almost every

for almost every  if and only if for almost every

if and only if for almost every  ,

,  for all

for all  .

.

This is equivalent to saying

![\begin{equation*}

m \Big( f_n^{-1} \big( (-\infty, 0] \big) \Big) = 0, \quad \forall n \in \mathbb{N}

\end{equation*}](../../assets/latex/measure_theory_67584d2b69d979eee0f59c423a7e6cfbc7c4b82a.png)

if and only if

![\begin{equation*}

m \bigg( \bigcup_{n = 0}^{\infty} f_n^{-1} \Big( (-\infty, 0] \Big) \bigg) = 0

\end{equation*}](../../assets/latex/measure_theory_4acf342f12fd15b4c6d3b130fe41fb4825810116.png)

i.e.  is a set of measure zero.

is a set of measure zero.

Then clearly

Then clearly

![\begin{equation*}

m \bigg( \bigcup_{n=0}^{\infty} f_n^{-1} \big( (- \infty, 0] \big) \bigg) = \sum_{n=0}^{\infty} \underbrace{m \Big( f_n^{-1} \big( (- \infty, 0] \big) \Big)}_{= 0} = 0

\end{equation*}](../../assets/latex/measure_theory_b5c3b4a7db26f3d66f1400bc53d458741769e23b.png)

by the assumption.

Follows by the same logic:

Follows by the same logic:

![\begin{equation*}

\sum_{n=0}^{\infty} m \Big( f_n^{-1} \big( (- \infty, 0] \big) \Big) = \underbrace{m \bigg( \bigcup_{n=0}^{\infty} f_n^{-1} \big( (- \infty, 0] \big) \bigg)}_{= 0} = 0

\end{equation*}](../../assets/latex/measure_theory_6b06dbd7651e8132421b8a66be9d25590a352936.png)

This concludes our proof.

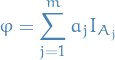

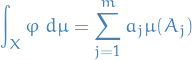

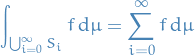

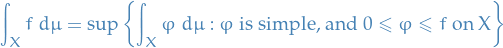

Integration

Notation

We let

where

Stuff

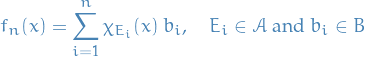

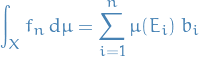

Let

where  are a set of positive values.

are a set of positive values.

Then the integral  of

of  over

over  wrt.

wrt.  is given by

is given by

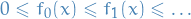

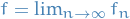

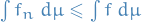

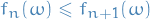

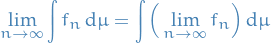

Let  be a sequence of nonnegative measurable functions on

be a sequence of nonnegative measurable functions on  . Assume that

. Assume that

for each

for each

for each

for each  .

.

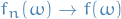

Then, we write  pointwise.

pointwise.

Then  is measurable, and

is measurable, and

Let  . By Proposition proposition:limit-of-measurable-functions-is-measurable,

. By Proposition proposition:limit-of-measurable-functions-is-measurable,  is measurable.

is measurable.

Since each  satisfies

satisfies  , we know

, we know  .

.

- If

, then since

, then since  and for all

and for all  we have

we have  , and

, and  .

.

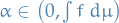

Let  and

and  .

.

Step 1: Approximate  by a simple function.

by a simple function.

Let  be a simple function such that

be a simple function such that  and

and  .

Such an

.

Such an  exists by definition of Lebesgue integral. Thus, there are

exists by definition of Lebesgue integral. Thus, there are  such that

such that  , and disjoint mesurable sets

, and disjoint mesurable sets  such that

such that

If any  , it doesn't contribute to the integral, so we may ignore it and assume that there are no such sets.

, it doesn't contribute to the integral, so we may ignore it and assume that there are no such sets.

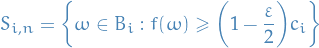

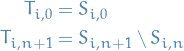

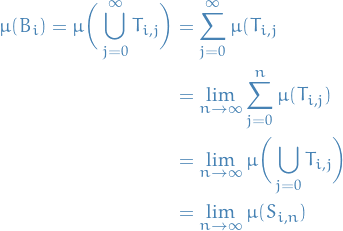

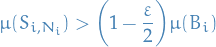

Step 2: Find sets of large measure where the convergence is controlled.

Note that for all  we have

we have

That is, for each  and

and  ,

,

For  and

and  , let

, let

And since it's easier to work with disjoint sets,

Observe that,

Then,

We don't have a "rate of convergence" on  , but on

, but on  we know that we are

we know that we are  close, and so we can "control" the convergence.

close, and so we can "control" the convergence.

Step 3: Approximate  from below.

from below.

For each  if

if  , then let

, then let  be such that

be such that

and otherwise, let  be such that

be such that

Let  , and let

, and let  .

.

For each  ,

,  and

and  we have

we have

Thus,  , and

, and  ,

,

If there is a  such that

such that  , then

, then

Otherwise (if the integral is finite), then

For every  and

and  , there is an

, there is an  such that

such that

For every  such that

such that

Therefore

Thus,

as wanted.

Let  be any nonnegative measurable functions on

be any nonnegative measurable functions on  .

.

Then

Let  and observe

and observe  are pointwise increasing

are pointwise increasing

Properties of integrals

Let  be a measure space.

be a measure space.

If  is a nonnegative measurable function, then there is an increasing sequence of simple functions

is a nonnegative measurable function, then there is an increasing sequence of simple functions  such that

such that

Given  as above and

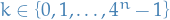

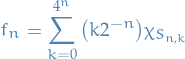

as above and  for

for  , let

, let

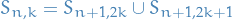

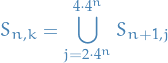

![\begin{equation*}

\begin{split}

S_{n, k} &= f^{-1} \Big( \big[k 2^{-n}, (k + 1) 2^{-n} \big) \Big) \\

S_{n, 4^n} &= f^{-1} \Big( [2^n, \infty] \Big)

\end{split}

\end{equation*}](../../assets/latex/measure_theory_aa6b395505b8bd8701e0668288bf3f46245d7906.png)

and

Or a bit more explicit (and maybe a bit clearer),

![\begin{equation*}

f_n = \underbrace{4^n 2^{-n}}_{= 2^n} \chi_{f^{-1} \Big( [2^n, \infty] \Big)} + \sum_{k=0}^{4^n - 1} \big( k 2^{-n} \big) \chi_{f^{-1} \Big( \big[\frac{k}{2^n}, \frac{k + 1}{2^n} \big) \Big)

\end{equation*}](../../assets/latex/measure_theory_bc744558768b4a547f739ded454eaedaafbc4971.png)

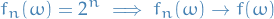

For each  ,

,  is a cover of

is a cover of  . On each

. On each  we have

we have  , hence

, hence  on entirety of

on entirety of  .

.

Consider  . If

. If  , then for

, then for  which in turn implies

which in turn implies

Hence  .

.

Finally, if  , then

, then  and for all

and for all

take on values

take on values

Hence,  for all cases.

for all cases.

Furthermore, for any  and

and  , there is the nesting property

, there is the nesting property

so on  we have

we have  .

.

(This can be seen by observing that what we're really doing here is dividing the values  takes on into a grid, and observing that if we're in

takes on into a grid, and observing that if we're in  then we're either in

then we're either in  or

or  ).

).

For  , then

, then

so again  and

and  is pointwise increasing.

is pointwise increasing.

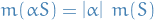

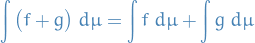

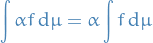

Let  be a measure space.

be a measure space.

Let

be nonnegative, measurable functions

be nonnegative, measurable functions![$\alpha \in [0, \infty]$](../../assets/latex/measure_theory_37d5fe22c2c90662a9b0b8b33308006a3475d65c.png) s.t.

s.t.

is defined

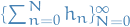

be a sequence of nonnegative measurable functions.

be a sequence of nonnegative measurable functions.

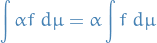

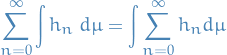

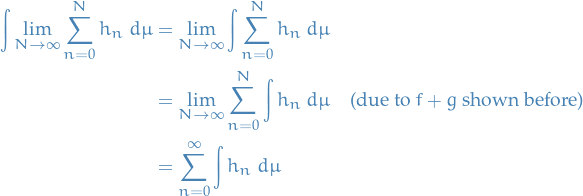

Then

Finite sum

Scalar multiplication

Infinte sums

Let  and

and  be increasing sequence of simple functions converging to

be increasing sequence of simple functions converging to  ,

,  , respectively.

, respectively.

Note  is aslo increasing to

is aslo increasing to  .

.

By monotone convergence theorem

The argument is similar for products.

Finally,  is an increasing sequence of nonnegative measurable functions, since sums of measurable functions is a measurable function.

is an increasing sequence of nonnegative measurable functions, since sums of measurable functions is a measurable function.

Thus, by monotone convergence and the result for finite sums

Integrals on sets

Let  be a measure or probability space.

be a measure or probability space.

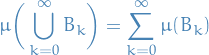

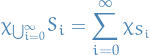

If  is a sequence of disjoint measurable sets then

is a sequence of disjoint measurable sets then

Let  be a measure or probability space.

be a measure or probability space.

If  is a simple function and

is a simple function and  is a measurable set, then

is a measurable set, then  is a simple function.

is a simple function.

Let  be a measure or probability space.

be a measure or probability space.

Let  be a nonnegative measurable function and

be a nonnegative measurable function and  .

.

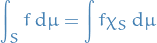

The integral of  on

on  is defined to be

is defined to be

Let  be a measure or probability space.

be a measure or probability space.

Let  be a nonnegative measurable function.

be a nonnegative measurable function.

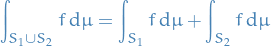

If

and

and  are disjoint measurable sets, then

are disjoint measurable sets, then

If

are disjoint measurable sets, then

are disjoint measurable sets, then

Let  be a measure or probability space.

be a measure or probability space.

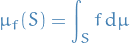

If  is a nonnegative measurable function, then

is a nonnegative measurable function, then ![$\mu_f: \mathcal{A} \to [0, \infty]$](../../assets/latex/measure_theory_5431344eb18be9cd48ff98f38d15812bb8f3f3a3.png) defined by

defined by  :

:

is a measure on  .

.

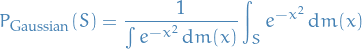

If  , then

, then ![$P_f: \mathcal{A} \to [0, \infty]$](../../assets/latex/measure_theory_595ab36a9344eed11eb64916b5fa997660643f02.png) defined by

defined by  :

:

The (real) Gaussian measure on  is defined as:

is defined as:

where  denotes the Lebesgue measure.

denotes the Lebesgue measure.

A Gaussian probability measure can also be defined for an arbitrary Banach space  as follows:

as follows:

Then, we say  is a Gaussian probability measure on

is a Gaussian probability measure on  if and only if

if and only if  is a Borel measure, i.e.

is a Borel measure, i.e.

such that  is a real Gaussian probability measure on

is a real Gaussian probability measure on  for every linear functional

for every linear functional  , i.e.

, i.e.  .

.

Here we have used the notation  , defined

, defined

where  denotes the Borel measures on

denotes the Borel measures on  .

.

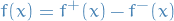

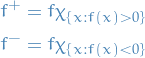

Integrals of general functions

Let  be a measure or probability space.

be a measure or probability space.

If ![$f: \Omega \to [- \infty, \infty]$](../../assets/latex/measure_theory_27db8ee19d1a570b0cf117dd687777fac66160ec.png) is a measurable function, then the positive and negative parts are defined by

is a measurable function, then the positive and negative parts are defined by

Note:  and

and  are nonnegative.

are nonnegative.

Let  be a measure or probability space.

be a measure or probability space.

If ![$f: \Omega \to [- \infty, \infty]$](../../assets/latex/measure_theory_27db8ee19d1a570b0cf117dd687777fac66160ec.png) is a measurable function, then

is a measurable function, then  and

and  are measurable functions.

are measurable functions.

Let  be a measure or probability space.

be a measure or probability space.

- A nonnegative function is defined to be integrable if it is measurable and

.

. - A function

is defined to be integrable if it is measurable and

is defined to be integrable if it is measurable and  is integrable.

is integrable.

For an integrable function  , the integral of

, the integral of  is defined to be

is defined to be

On a set  , the integral is defined to be

, the integral is defined to be

Note that  , but in the actual definition of the integral, we use

, but in the actual definition of the integral, we use  .

.

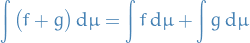

Let  be a measure or probability space.

be a measure or probability space.

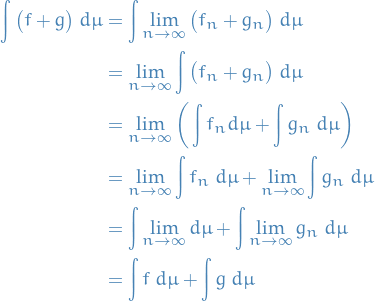

If  and

and  are real-valued integrable functions and

are real-valued integrable functions and  , then

, then

(Scalar multiplication)

(Additive)

Let  be a measure or probability space.

be a measure or probability space.

Let  and

and  be measurable functions s.t.

be measurable functions s.t.

If  is integrable then

is integrable then  is integrable.

is integrable.

Examples

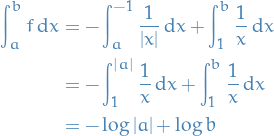

Consider  with Lebesgue measure. Is

with Lebesgue measure. Is  integrable?

integrable?

And

and

therefore

Thus,  is integrable.

is integrable.

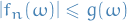

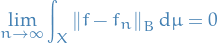

Lebesge dominated convergence theorem

Let  be a measure or probability space.

be a measure or probability space.

Let  be a nonnegative integrable function and let

be a nonnegative integrable function and let  be a sequence of (not necessarily nonnegative!) measurable functions.

be a sequence of (not necessarily nonnegative!) measurable functions.

Asssume  and all

and all  are real-valued.

are real-valued.

If  and

and  such that

such that

and the pointwise limit

exists.

Then

That is, if there exists a "dominating function"  , then we can "move" the limit into the integral.

, then we can "move" the limit into the integral.

Since  and

and  such that

such that  , we find

, we find  that

that  and

and  are nonnegative.

are nonnegative.

Consider

From Fatou's lemma, we have

Therefore

Consider  , then

, then

(this looks very much like Fatou's lemma, but it ain't;  does not necessarily have to be nonnegative as in Fatou's lemma)

does not necessarily have to be nonnegative as in Fatou's lemma)

Consider

Therefore,

Which implies

Since  , we then have

, we then have  exists and is equal to

exists and is equal to  .

.

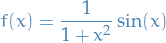

Examples of failure of dominated convergence

Where dominated convergence does not work

On  with Lebesgue measure, consider

with Lebesgue measure, consider

![\begin{equation*}

\begin{split}

f_n &= \chi_{[n, n + \frac{1}{2}]} \\

g_n &= \arctan \big( x - n \big) + \frac{\pi}{2}

\end{split}

\end{equation*}](../../assets/latex/measure_theory_86b9124678b092e327558bdc249245b93ada07c3.png)

such that ![$g_n \in [0, \pi]$](../../assets/latex/measure_theory_1cda4a0be5367504c88a0871f4e46c2cc110f596.png) instead of

instead of ![$[- \frac{\pi}{2}, \frac{\pi}{2}]$](../../assets/latex/measure_theory_4c0845ce4ba68814a80ace45d8f5f56fddfec79f.png) as "usual" with

as "usual" with  .

.

Both of these are nonnegative sequences that converge to  pointwise.

pointwise.

Notice there is no integrable dominating function for either of these sequences:

would require a dominating function to have infinite integral, therefore no dominating integrable function exists.

would require a dominating function to have infinite integral, therefore no dominating integrable function exists. on the right, and so a dominating function would have to be above

on the right, and so a dominating function would have to be above  on some interval

on some interval  which would lead to infinite integral.

which would lead to infinite integral.

Thus, Lebesgue dominated convergence does not apply

Noncummtative limits: simple case

Noncommutative limits: another one

Consider  with Lebesgue measure and

with Lebesgue measure and

![\begin{equation*}

f(x) = \frac{1}{x} \chi_{[1, \infty)} - \frac{1}{\left| x \right|} \chi_{(-\infty, -1]}

\end{equation*}](../../assets/latex/measure_theory_14635fa0f57a58ce700b3856836b8bcda95d51e0.png)

Consider  and $ b > 1$ and

and $ b > 1$ and

Note that  , so

, so  is not integrable.

is not integrable.

Consider

![\begin{equation*}

\begin{split}

\lim_{N \to \infty} \int \chi_{[-N, N]} f \dd{x} &= \lim_{N \to \infty} \Big( - \log \left| - N \right| + \log N \Big) = \lim_{N \to \infty} 0 = 0 \\

\lim_{N \to \infty} \int \chi_{[-N, 2N]} f \dd{x} &= \lim_{N \to \infty} \Big( - \log N + \log 2N \Big) = \lim_{N \to \infty} \log 2 = \log 2

\end{split}

\end{equation*}](../../assets/latex/measure_theory_7503b43d535ab9bfd060eaa19b94e17bd2de244e.png)

Commutative limits

Consider

![\begin{equation*}

\lim_{N \to \infty} \lim_{M \to \infty} \int \chi_{[-M, N]} f \dd{x} = \lim_{M \to \infty} \lim_{N \to \infty} \int \chi_{[- M, N]} f \dd{x}

\end{equation*}](../../assets/latex/measure_theory_1591ddcfb29c1faa905731373db01dfdb888bb3e.png)

We know that  is integrable and for all

is integrable and for all  and

and  ,

,

By multiple applications of LDCT

![\begin{equation*}

\begin{split}

\lim_{N \to \infty} \lim_{M \to \infty \to \infty} \int \chi_{[-M, N]} f \dd{x} &= \lim_{N \to \infty} \int \chi_{(-\infty, N]} f \dd{x} \\

&= \int \chi_{(-\infty, \infty)} f \dd{x} \\

&= \int f \dd{x} \\

&= \int \chi_{(-\infty, \infty)} f \dd{x} \\

&= \lim_{M \to \infty} \int \chi_{[-M, \infty)} f \dd{x} \\

&= \lim_{M \to \infty} \lim_{N \to \infty} \int \chi_{[-M, N]} f \dd{x}

\end{split}

\end{equation*}](../../assets/latex/measure_theory_dbd2a8cecbc43b92c2b5adc3837df5b30a770f80.png)

Showing that in this case the limits do in fact commute.

Riemann integrable functions are measurable

All Riemann integrable functions are measurable.

For any Riemann integrable function, the Riemann integral and the Lebesgue integral are equal.

Almost everywhere and Lp spaces

If  is a nonnegative, measurable function, and

is a nonnegative, measurable function, and  , then

, then  .

.

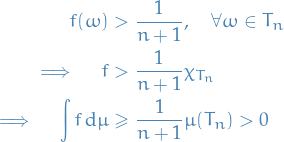

For  , let

, let

![\begin{equation*}

\begin{split}

T_0 &= f^{-1} \Big( (1, \infty] \Big) \\

T_n &= f^{-1} \bigg( \bigg( \frac{1}{n + 1}, \frac{1}{n} \bigg] \bigg), \quad n \in \mathbb{Z}^+

\end{split}

\end{equation*}](../../assets/latex/measure_theory_2ceab74592bc736a2823d5c8f0aa92dafd661f57.png)

Observe the  are disjoint and

are disjoint and

![\begin{equation*}

\bigcup_{i = 1}^{\infty} T_i = f^{-1} \bigg( (0, \infty] \bigg)

\end{equation*}](../../assets/latex/measure_theory_93b55f6349b02c3782503473a25b3f7b47350719.png)

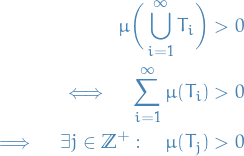

Suppose that  . This implies that

. This implies that  on a set of positive measure, i.e.

on a set of positive measure, i.e.

![\begin{equation*}

\mu \bigg( f^{-1} \Big( (0, \infty] \Big) \bigg) > 0

\end{equation*}](../../assets/latex/measure_theory_e85507015506242700deeda1834343a94f438944.png)

but this implies that

Thus,

which is a contradiction, hence  .

.

Let  and

and  be integrable.

be integrable.

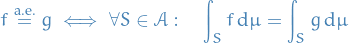

is the set of all equivalence classes of integrable functions wrt. the equivalence relation given by a.e. equality, i.e.

is the set of all equivalence classes of integrable functions wrt. the equivalence relation given by a.e. equality, i.e.

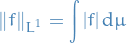

If  is an integrable function, the

is an integrable function, the  norm is

norm is

If ![$[f] \in L^1(\dd{\mu})$](../../assets/latex/measure_theory_febf1d9c9d9fc46e4fc2d9e79dd425642660a25e.png) and

and ![$g \in [f]$](../../assets/latex/measure_theory_0674799b02ef974d9bce66406bc2481c9c7bb951.png) , the integral and norm are defined to be

, the integral and norm are defined to be

![\begin{equation*}

\begin{split}

\int [f] \dd{\mu} &= \int g \dd{\mu} \\

\norm{[f]}_{L^1} &= \norm{g}_{L^1}

\end{split}

\end{equation*}](../../assets/latex/measure_theory_6917d223cd066748131b36bb5557b619ee598345.png)

If ![$[f], [g], [h] \in L^1$](../../assets/latex/measure_theory_e32b14a7500cc43f20c1a1657905bfee0ed6d43e.png) , then

, then ![$[f + g] \in L^1$](../../assets/latex/measure_theory_9ee9da136403a0f769d8056adb9124c5934f6f3f.png) , and

, and

![\begin{equation*}

\norm{[f - g]}_{L^1} \le \norm{f - g}_{L^1} + \norm{[h - g]}_{L^1}

\end{equation*}](../../assets/latex/measure_theory_280aa5fd4f0f61951e29cf2fba4c67eb4af32159.png)

is a real vector space with addition and scalar multiplication given pointwise almost everywhere.

is a real vector space with addition and scalar multiplication given pointwise almost everywhere.

Functions taking on  on a set of zero measure are fine!

on a set of zero measure are fine!

These functions are still the almost everywhere equal to some integrable function (even those these infinite-valued functions are integrable), hence these are in  .

.

Let ![$\left\{ [f_k] \right\}$](../../assets/latex/measure_theory_adf0c6a74e755c8ef23b722bb511497982e81a93.png) be a Cauchy sequence. Since the

be a Cauchy sequence. Since the  are integrable, we may assume we choose

are integrable, we may assume we choose  valued representatives.

valued representatives.

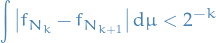

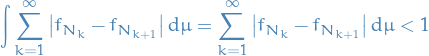

For  , let

, let  be such that for

be such that for  ,

,

and  .

.

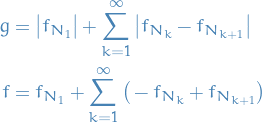

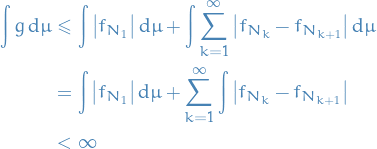

Thus,

and

Thus,  is finite almost everywhere. Thus, this series is infinite on a set of measure zero, so we may assume the representatives

is finite almost everywhere. Thus, this series is infinite on a set of measure zero, so we may assume the representatives  are zero there and the sum is finite at each

are zero there and the sum is finite at each  .

.

Thus,  converges everywhere.

converges everywhere.

Let

(observe that the last part is just rewriting the  ).

).

By monotone convergence theorem

Observe that pointwise

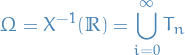

Applications to Probability

Notation

is a probability space

is a probability space- Random variable

is a measurable function

is a measurable function  denotes the Borel sigma-algebra on

denotes the Borel sigma-algebra on

denotes the probability distribution measure for

denotes the probability distribution measure for

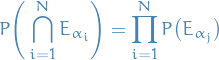

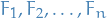

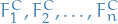

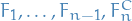

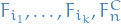

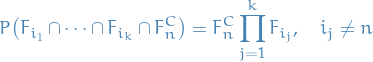

be a sequence of random events

be a sequence of random events be a sequence of finitely many random events

be a sequence of finitely many random events

Probability and cumulative distributions

An elementary event is an element of  .

.

A random event is an element of

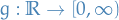

A random variable is a measurable function from  to

to ![$\Big( [- \infty, \infty], \mathcal{B} \big( [- \infty, \infty] \big) \Big)$](../../assets/latex/measure_theory_71661c6fca4577bd7a8dc72d729db6c0c7455aef.png) .

.

Let

be a measure space and

be a measure space and  be a measurable space

be a measurable space be a measurable function

be a measurable function

Then we say that the push-forward of  by

by  is defined

is defined

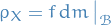

The probability distribution measure of  , denoted

, denoted ![$\rho_X: \mathcal{B}(\mathbb{R}) \to [0, 1]$](../../assets/latex/measure_theory_a5c7220fd4e2a7451ed9120399b51f8339ac82f6.png) , is defined

, is defined

Equivalently, it's the push-forward of  by

by  :

:

In certain circles not including measure-theorists (existence of such circles is trivial), you might hear talks about "probability distributions". Usually what is meant by this is  for some random variable

for some random variable  .

.

That is, a "distribution of  " usually means that there is some probability space

" usually means that there is some probability space  in which

in which  is a random variable, i.e.

is a random variable, i.e.  and the "distribution of

and the "distribution of  " is the corresponding probability distribution measure!

" is the corresponding probability distribution measure!

Confusingly enough, they will often talk about " distribution of

distribution of  ", in which case

", in which case  is NOT a probability measure, but denotes a probability distribution measure of the random variable.

is NOT a probability measure, but denotes a probability distribution measure of the random variable.

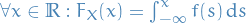

The cumulative distribution function of  , denoted

, denoted ![$F_X: \mathbb{R} \to [0, 1]$](../../assets/latex/measure_theory_857de4732cc5895e72b48743bb1ed0b6c6ef9120.png) , is defined by

, is defined by

![\begin{equation*}

\forall x \in \mathbb{R}, \quad F_X(x) = P(X \le x) = \rho_X \big( (- \infty, x] \big)

\end{equation*}](../../assets/latex/measure_theory_1135df16d8d98e78eda428fdded8af11029fc26f.png)

where  is the probability distribution measure of

is the probability distribution measure of  .

.

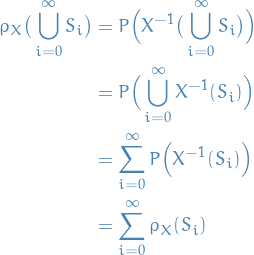

The probability distribution measure  is a probability measure on the Borel sets

is a probability measure on the Borel sets  .

.

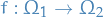

If  is a disjoint sequence of sets in

is a disjoint sequence of sets in  , then

, then

so  satisfies countable additivity and is a measure.

satisfies countable additivity and is a measure.

Finally,

so  is a probability measure.

is a probability measure.

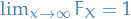

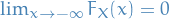

is increasing

is increasing and

and

is right continuous (i.e. continuous from the right)

is right continuous (i.e. continuous from the right)

If

, then

, then

![\begin{equation*}

\begin{split}

F_X(x) &= P(X \le x) \\

&= P \Big( X^{-1} \big( (- \infty, x] \big) \Big) \\

& \le P \Big( X^{-1} \big( ( -\infty, x] \big) \Big) + P \Big( X^{-1} \big( (x, y] \big) \Big) \\

&= P \Big( X^{-1} \big( (-\infty, x] \cup (x, y] \big) \Big) \\

&= P \Big( X^{-1} \big( (- \infty, y] \big) \Big) \\

&= F_X(y)

\end{split}

\end{equation*}](../../assets/latex/measure_theory_02e5898fb6b34b5dbd3e516b4c58fb5d27ddfe1b.png)

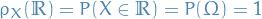

Consider the limit as

. Let

. Let

![\begin{equation*}

\begin{split}

T_0 &= X^{-1} \big( (- \infty, 0] \big) \\

T_n &= X^{-1} \big( (n - 1, n] \big), \quad \forall n \in \mathbb{Z}^+

\end{split}

\end{equation*}](../../assets/latex/measure_theory_822502b5a0806137387a045422e41a8d00ed4b6e.png)

so

Then,

![\begin{equation*}

\begin{split}

1 &= P (\Omega) = P \bigg( \bigcup_{i=0}^{\infty} T_i \bigg) \\

&= \lim_{n \to infty} \sum_{i=0}^{n} P(S_i) \\

&= \lim_{n \to \infty} \bigg( P \Big( X^{-1} \big( (- \infty, 0] \big) \Big) \bigg) + \sum_{i=1}^{n} P \bigg( X^{-1} \Big( (n - 1, n] \Big) \bigg) \\

&= \lim_{n \to \infty} P \bigg( X^{-1} \Big( (- \infty, n] \Big) \bigg) \\

&= \lim_{n \to \infty} F_X(n)

\end{split}

\end{equation*}](../../assets/latex/measure_theory_c85daac3994fefdf28c3cd1e69dd2a502d02db52.png)

which, since

is increasing implies

is increasing implies

Let

and

and  . Let

. Let

![\begin{equation*}

S_n = \bigg( x, x + \frac{1}{n} \bigg]

\end{equation*}](../../assets/latex/measure_theory_71877cd7b7156217e1f928f060006ff74416c33a.png)

The

are nested, and similarily

are nested, and similarily  are nested.

are nested.

![\begin{equation*}

\begin{split}

0 &= P (\emptyset) = P \bigg( x^{-1} \bigg( \bigcup_{i=0}^{\infty} S_i \bigg) \bigg) \\

&= \lim_{n \to \infty} P \Big( X^{-1}(S_n) \Big) \\

&= \lim_{n \to \infty} P \bigg( X^{-1} \bigg( \bigg(x, x + \frac{1}{n} \bigg] \bigg) \bigg) \\

&= \lim_{n \to \infty} P \bigg( X^{-1} \bigg( \bigg( - \infty, x + \frac{1}{n} \bigg] \bigg) \bigg) - P \bigg( X^{-1} \bigg( \bigg(-\infty, x \bigg] \bigg) \bigg) \\

&= \lim_{n \to \infty} \bigg[ F_X \bigg( x + \frac{1}{n} \bigg) - F_X(x) \bigg]

\end{split}

\end{equation*}](../../assets/latex/measure_theory_3f72b108040e545c8a2dbe673817e5955c4dd7a7.png)

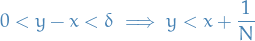

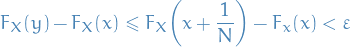

Thus, given

, there exists

, there exists  such that

such that

Let

so

so

Radon-Nikodym derivatives and expectations

Let

be a rv.

be a rv. its probability distribution measure

its probability distribution measure its cumulative distribution function

its cumulative distribution function a Borel measureable function

a Borel measureable function

The following are equivalent:

is a Radon-Nikodym derivative for

is a Radon-Nikodym derivative for  wrt.

wrt.  (the Lebesgue measure but restricted to Borel measurable sets)

(the Lebesgue measure but restricted to Borel measurable sets)

(2) and (3) are immediately equivalent:

![\begin{equation*}

\rho_X \Big( (- \infty, x] \Big) = F_X(x) = \int_{-\infty}^{x} f(s) \dd{s} = \int_{-\infty}^{x} f \dd{m}

\end{equation*}](../../assets/latex/measure_theory_6826f1b8d841609a361882c3b6b70bc5ea1174ef.png)

iff (2) or (3) holds when considering only sets of the form ![$(- \infty, x]$](../../assets/latex/measure_theory_e122470acfe9dda86dc876d6b068fadc47cc17be.png) .

.

This statement is also equivalent to (1).

Thus (1) is equivalent to (2) or (3) restricted to sets of the form ![$(-\infty, x]$](../../assets/latex/measure_theory_4da7df6ea37290e01a6b13bd339c125dc9d812cd.png) .

.

However, sets of the form ![$(-\infty, x]$](../../assets/latex/measure_theory_4da7df6ea37290e01a6b13bd339c125dc9d812cd.png) generate

generate  , so from the Carathéodory extension theorem this gives

, so from the Carathéodory extension theorem this gives  .

.

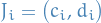

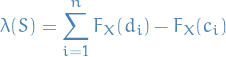

To prove  more rigorously, let

more rigorously, let

for ![$c_i, d_i \in [-\infty, \infty]$](../../assets/latex/measure_theory_879949f8d7167858f9f8cf9fe0fb70cc850702cf.png) s.t.

s.t.  and none of these intervals overlap. That is all finite unions of left-closed, right-open, disjoint intervals.

and none of these intervals overlap. That is all finite unions of left-closed, right-open, disjoint intervals.

Also let

Observe that

![\begin{equation*}

\lambda(S) = \sum_{i=1}^{n} \bigg[ \int_{-\infty}^{d_i} f(x) \dd{x} - \int_{-\infty}^{c_i} f(x) \dd{x} \bigg] = \sum_{i=1}^{n} \int_{c_i}^{d_i} f(x) \dd{x} = \int_S f \dd{m}

\end{equation*}](../../assets/latex/measure_theory_a1afc8f6f2c8e420ab88be7447f2e38b913fb942.png)

and that

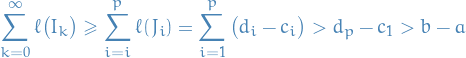

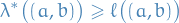

One can show that  is a premeasure space. Therefore, by the Carathéodory extension theorem, there is a measure

is a premeasure space. Therefore, by the Carathéodory extension theorem, there is a measure  on

on  s.t.

s.t.

Furthermore, since  ,

,  is unique! But both the measures

is unique! But both the measures  and

and  satisfy these properties, thus

satisfy these properties, thus

which is the definition of  being a Radon-Nikodym derivative of

being a Radon-Nikodym derivative of  wrt. Lebesgue measure restricted to the Borel σ-algebra, as wanted.

wrt. Lebesgue measure restricted to the Borel σ-algebra, as wanted.

A function  is a probability density function for

is a probability density function for  if

if  is a Radon-Nikodym derivative of the probability distribution measure

is a Radon-Nikodym derivative of the probability distribution measure  , wrt. Lebesgue measure restricted to Borel sets, i.e.

, wrt. Lebesgue measure restricted to Borel sets, i.e.

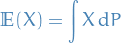

Expectation via distributions

Expectation of a random variable is

If  is a nonnegative function that is

is a nonnegative function that is  measurable, then

measurable, then

![\begin{equation*}

\mathbb{E} \big[ g(X) \big] = \int g(s) \dd{\rho_X(s)}

\end{equation*}](../../assets/latex/measure_theory_58b872956e73f042002d8b23eb8c33aa0d3cde55.png)

If  is the characterstic function, then, if

is the characterstic function, then, if  ,

,

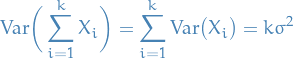

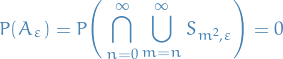

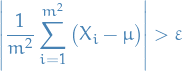

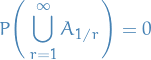

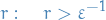

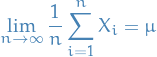

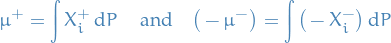

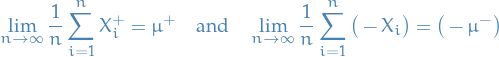

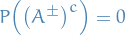

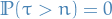

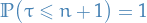

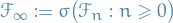

so