Quantum Mechanics

Table of Contents

- Reading

- Progress

- Definitions

- Stuff

- Zero point energy

- Quantum Harmonic Oscillator

- Correspondance principle

- Operators

- Coherence

- Observables

- Momentum

- Angular momentum

- Angular momentum - reloaded

- Central potential

- Hydrogen atom

- Spin - intrinsic angular momentum

- Addition of angular momenta

- Identical Particles

- Fourier Transforms and Uncertainty Relations

- Bra, kets, and matrices

- Time-independent Perturbation Theory

- Time-dependent Perturbation Theory

- Radiation

- Quantum Scattering Theory

- Central Field Approximation

- Variational Method

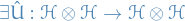

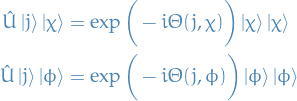

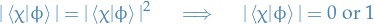

- Hidden Variables, EPR Paradox, Bell's Inequality

- Quantum communication

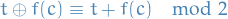

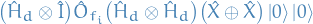

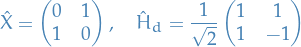

- Quantum computing

- Cohen-Tannoudji - Quantum Mechanics

- Principles of Quantum Mechanics - Dirac

- Quantum Theory for Mathematicians

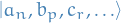

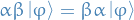

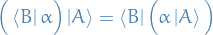

- Notation

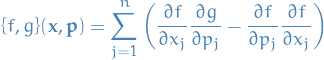

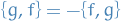

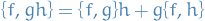

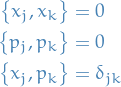

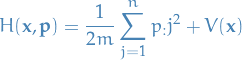

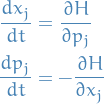

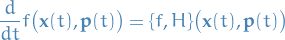

- 2. A First Approach to Classical Mechanics

- 3. A First Approach to Quantum Mechanics

- 4. The Free Schrödinger Equation

- 5. A Particle in a Square Well

- 6. Perspectives on the Spectral Theorem

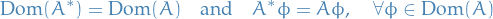

- 7. Spectral Theorem for Bounded Self-Adjoint Operators

- 8. Spectral Theorem for Bounded Self-Adjoint Operators: Proofs

- Practice problems

- Q & A

Reading

- Quantum Physics - Stephen Gasiorowicz

- "Quantum Physics", Messiah, p. 463 Appendix A: Distributions & Fourier Transform

Progress

- Note taken on

p. 115

Definitions

Words

- plane wave

- multi-dimensional wave

- wave packet

- superposition of plane waves

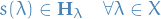

- hilbert space

- Banach space withe addition of an inner product

- banach space

- Space with metric, and is complete wrt. to the metric in a sense that each Cauchy sequence converges to a limit within the space.

- isotropic

- Independent of orientation.

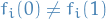

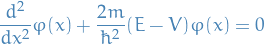

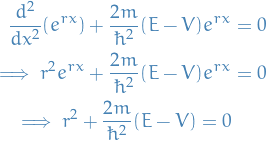

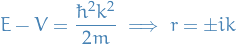

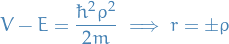

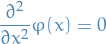

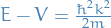

Bound / unbound wave equations

Bound energy

Restricts us to positive energies

Unbound energy

Allows both positive and negative energies

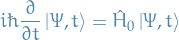

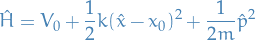

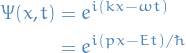

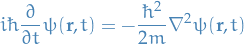

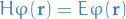

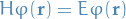

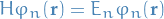

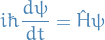

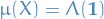

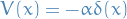

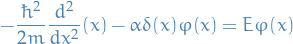

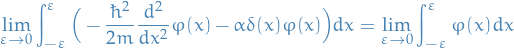

Schrödinger equation

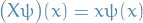

Operators

Position operator

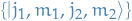

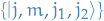

Russel-Sanders notation

States that arise in coupling orbital angular momentum  and spin

and spin  to give total angular momentum

to give total angular momentum  are denoted:

are denoted:

where the  is "optional" (not always included).

is "optional" (not always included).

Further, remember that we often use the following notation to denote the different angular momentum  :

:

Stuff

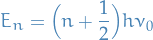

Zero point energy

Heisenbergs uncertainty principle tells us that a particle cannot sit motionless at the bottom of it's potential well, as this would imply that the uncertainty in momentum  would be infinite. Therefore, the lowest-energy state of the system (called ground state ) must be a distribution for the position and momentum which at all times satisfy the uncertainty principle.

would be infinite. Therefore, the lowest-energy state of the system (called ground state ) must be a distribution for the position and momentum which at all times satisfy the uncertainty principle.

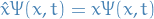

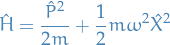

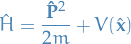

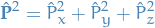

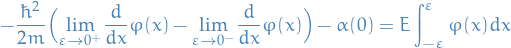

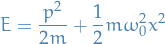

Near the bottom of a potential well, the Hamiltonian of a general system (the quantum-mechanical operator giving a system its energy) can be approximated as a quantum harmonic oscillator:

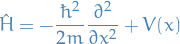

Quantum Harmonic Oscillator

![\begin{equation*}

\hat{H} = \Bigg[ - \frac{\hbar^2}{2m} \frac{\partial^2}{\partial x^2} + \frac{1}{2} m \omega^2 x^2 \Bigg]

\end{equation*}](../../assets/latex/quantum_mechanics_7c9244b8b792fc892f30045f30726f21b7065032.png)

Thus, the eigenvalue function of the Hamiltonian becomes

![\begin{equation*}

\Bigg[ - \frac{\hbar^2}{2m} \frac{\partial^2}{\partial x^2} + \frac{1}{2} m \omega^2 x^2 \Bigg] \psi = E \psi

\end{equation*}](../../assets/latex/quantum_mechanics_2f748733cb811a4375991e37f90dfb46653a92b6.png)

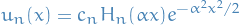

As it turns out, this differential equation has the eigenfunctions:

where  denote the n-th [BROKEN LINK: No match for fuzzy expression: *Hermite%20polynomials], with

denote the n-th [BROKEN LINK: No match for fuzzy expression: *Hermite%20polynomials], with  .

.

Further, using the raising and lowering operators discussed in Angular momentum - reloaded we can rewrite the solutions as

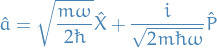

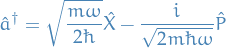

Annihiliation / lowering operator:

Creation / Raising operator:

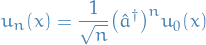

Solution

There's two ways of going about this:

- Solving using the ladder operators, but you somehow need to obtain the ground levels

- Solving using straight up good ole' method of variation of parameters and Sturm-Liouville theory

Solving using ladder-operators

- Notation

is the eigenfunction corresponding to the n-th eigenvalue

is the eigenfunction corresponding to the n-th eigenvalue

, which is dimensionless

, which is dimensionless , which is dimensionless

, which is dimensionless

- Stuff

The Hamiltonian in this case is given by

with the TISE

For convenience we rewrite the Hamiltonian

![\begin{equation*}

\begin{split}

\hat{H} &= \hbar \omega \Bigg[ \frac{\hat{P}^2}{2 m \hbar \omega} + \frac{m \omega}{2 \hbar} \hat{X}^2 \Bigg] \\

&= \hbar \omega \Big[ \hat{\eta}^2 + \hat{\xi}^2 \Big]

\end{split}

\end{equation*}](../../assets/latex/quantum_mechanics_1b9eb857df709299df3905ea14d5e3c26105ae1c.png)

But

and

and  do not commute:

do not commute:

![\begin{equation*}

[ \hat{\xi}, \hat{\eta} ] = \frac{i}{2}

\end{equation*}](../../assets/latex/quantum_mechanics_bc97facb3df45546b6eb9ea1c63fdc884d279f40.png)

So we instead use the notation of

and

and  which have the property

which have the property

![\begin{equation*}

[ \hat{a}, \hat{a}^\dagger ] = 1

\end{equation*}](../../assets/latex/quantum_mechanics_437cd4aa86dd5274e5e835448fe308d9ced59efa.png)

Once again rewriting the Hamiltonian

Observe that

![\begin{equation*}

[ \hat{H}, \hat{a} ] = - \hbar \omega \ \hat{a}

\end{equation*}](../../assets/latex/quantum_mechanics_c327ba7d4fd17a0a95440c738d7aa8b70d19d63b.png)

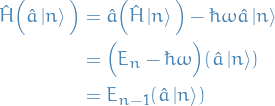

Conider the following operation:

where we in the last line used the fact that for a simple Harmonic Oscillator

.

.

Thus,

I.e. applying

to an eigenfunction

to an eigenfunction  gives us the eigenfunction of the

gives us the eigenfunction of the  eigenstate.

eigenstate.

We call this operator the annhiliation or lowering operator.

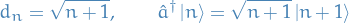

We can do exactly the same of

to observe that

to observe that

which is why we call

the creation or rising operator.

the creation or rising operator.

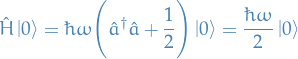

Buuut there's a problem: we can potential "annihilate" below to a state with energy below zero! To fix this we simply define the ground state such that

Thus,

And all other higher energy states can then be constructed from this, by successsive application of

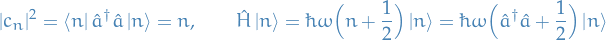

- Normalizing the lowering- and raising-operator

Thus,

Doing the same for

, we get

, we get

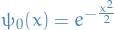

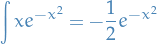

- Solving for the ground-state

We can find the proper solution for the ground-state by solving the differential equation

And substituting in for

both the

both the  and

and  , so that we get the differential equation. Solving this, we get

, so that we get the differential equation. Solving this, we get

- Normalizing the lowering- and raising-operator

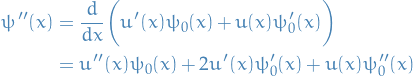

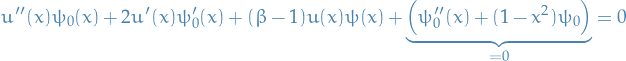

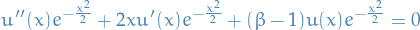

Solving the differential equation

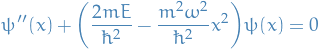

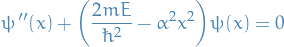

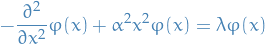

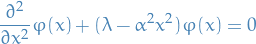

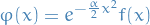

We start of by rewriting the Schrödinger equation for the harmonic oscillator:

Letting  , we have

, we have

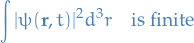

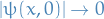

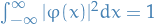

We'll require our solution to be normalizable, i.e. square-integrable, therefore we need the differential equation to be satisfied as  , in which case we can drop the constant term:

, in which case we can drop the constant term:

Letting  which is just a constant (apparently…), we have

which is just a constant (apparently…), we have

I'm not sure I'm "cool" with this. There's clearly something weird going on here, since  is a parameter of the potential, NOT a variable which we can just set.

is a parameter of the potential, NOT a variable which we can just set.

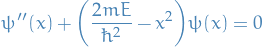

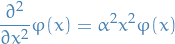

Letting  , we then have

, we then have

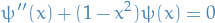

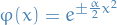

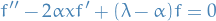

Therefore we instead consider the simpler problem:

where we've dropped the  , which we will include later on through the us of method of variation of parameters. This clearly has the solution

, which we will include later on through the us of method of variation of parameters. This clearly has the solution

Now, using method of variation of parameters, we suppose there exists some particular solution of the form:

For which we have

Substituting into the diff. eqn. involving  ,

,

Since  satisfies the original equation, we have

satisfies the original equation, we have

Seeing this, we substitute in our solution for  ,

,

Thus,

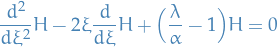

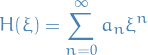

Which, if we let

gives us

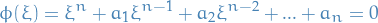

which we recognize as Hermite differential equation! Hence, we have the solutions

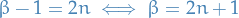

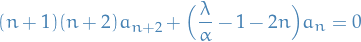

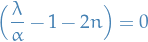

Why the  though? Well, if you attempt to solve the Hermite equation above using a series solution, i.e. assuming

though? Well, if you attempt to solve the Hermite equation above using a series solution, i.e. assuming

you end up seeing that you'll have a recurrence relation which depends on the integer  , and further that for this to be square-integrable we require the series to terminate for some

, and further that for this to be square-integrable we require the series to terminate for some  , hence you get that dough above.

, hence you get that dough above.

Also, it shows us that we do indeed have a (countably) infinite number of solutions, as we'd expect from a Sturm-Liouville problem such as the Schrödinger equation :)

Correspondance principle

The correspondence principle states that the behavior of systems described by quantum mechanics reproduces the classical physics in the limit of large numbers.

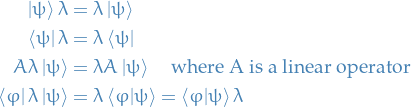

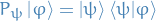

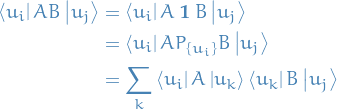

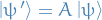

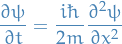

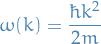

Operators

Momentum

"Derivation"

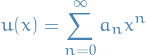

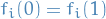

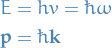

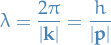

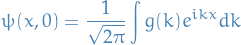

Considering the wave equation of a free particle, we have

Taking the derivative of the above, and rearranging, we obtain:

![\begin{equation*}

p \big[ \Psi(x, t) \big] = - i \hbar \frac{\partial}{\partial x} \big[ \Psi(x, t) \big]

\end{equation*}](../../assets/latex/quantum_mechanics_e9d4453e606f945090485ae9d0290df6002f09da.png)

and thus we have the momentum operator

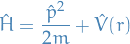

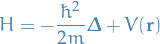

Hamiltonian

"Derivation"

Where the Hamiltonian comes from the fact that for TISE we have the following:

which we can then write as:

![\begin{equation*}

\Big[ - \frac{\hbar^2}{2m} \nabla^2 + V(x) \Big] \varphi(x) = E \varphi(x)

\end{equation*}](../../assets/latex/quantum_mechanics_8bb0ba994b3bb23184cb1145a8f97a41fbf95342.png)

and we have our operator  !

!

In the above "derivation" of the Hamiltonian, we assume TISE for convenience. It works equally well with the time-dependence, which is why we can write the TIDE expression using the Hamiltonian.

In fact, one can deduce it from writing the wave-function as a function of  and

and  , and then note the operator defined for the momentum

, and then note the operator defined for the momentum  . This operator can then be substituted into the classical formula for the energy to provide use with a definition of the Hamiltonian operator.

. This operator can then be substituted into the classical formula for the energy to provide use with a definition of the Hamiltonian operator.

Theorems

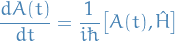

Conserved operators

A time-independent operator is conserved if and only if ![$[\hat{H}, \hat{O}] = 0$](../../assets/latex/quantum_mechanics_0e8e7c400c52fc780f5169d1701b8510b3d8e98a.png) , i.e. it commutes with the Hermitian operator.

, i.e. it commutes with the Hermitian operator.

Coherence

Stack excange answer

This guy provides a very nice explanation of quantum coherence and entanglement.

Basically what he's saying is that coherence refers to the case where the wave-functions which are superimposed to create the wave-function of the particle under consideration have a definite phase difference , i.e. the phase-difference between the "eigenstates" is does not change wrt. time and is not random .

Another answer to the same question says:

"It is better to think of there being one and only one wavefunction that describes all the particles in the universe."

Which is trying to make sense of entanglement, saying that we can look at the "joint probability" of e.g. two particles and based on measurement taken from one particle we can make a better judgement of the probability-distribution of the measurement of the other particle. At least this is how I interpret it.

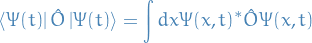

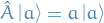

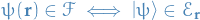

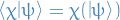

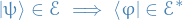

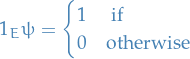

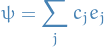

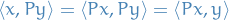

Observables

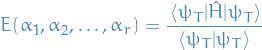

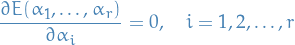

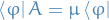

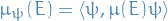

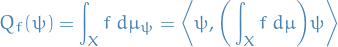

Each dynamic variable is associated with a linear operator, say  , and it's expectation can be computed:

, and it's expectation can be computed:

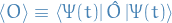

when there is no ambiguity about the state in which the average is computed, we shall write

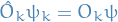

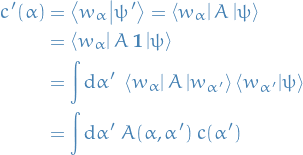

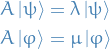

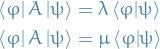

The possible outcomes of an observable is given by the eigenvalue of the corresponding operator  , i.e. the solutions of the eigenvalue equation:

, i.e. the solutions of the eigenvalue equation:

where  is the eigenstate corresponding to the eigenvalue

is the eigenstate corresponding to the eigenvalue  .

.

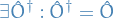

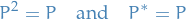

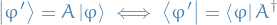

Observables as Hermitian operators

Observables are required to be Hermition operators, i.e. operators such that for the operator

where  is called the Hermitian conjugate of

is called the Hermitian conjugate of  .

.

We an operator corresponding to an Observable to be a Hermitian operator as this is the only way to ensure that all the eigenvalues of the corresponding operator are real, and we want our measurements to be real, of course.

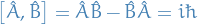

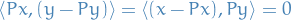

Compatilibity Theorem

Given two observables  and

and  , represented by the Herimitian operators

, represented by the Herimitian operators  and

and  , then the following statements are equivalent:

, then the following statements are equivalent:

and

and  are compatible

are compatible and

and  have a common eigenbasis

have a common eigenbasis and

and  commute, i.e.:

commute, i.e.: ![$[\hat{A}, \hat{B}] = 0$](../../assets/latex/quantum_mechanics_81fb46d729367fa031cd2235cf831733ac641fb3.png)

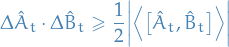

Generalised Uncertainty Relation

If  and

and  denote the uncertainties in observables

denote the uncertainties in observables  and

and  respectively in the state

respectively in the state  , then the generalised uncertainty relation states that

, then the generalised uncertainty relation states that

Momentum

Then

And we have commutability, and thus the we can interchange the order of derivation

![\begin{equation*}

[ \hat{P}_k, \hat{P}_l ] = 0 \quad \iff \quad \frac{\partial^2}{\partial x_k \partial x_l} \psi(\mathbf{x}) = \frac{\partial^2}{\partial x_l \partial x_k} \psi(\mathbf{x})

\end{equation*}](../../assets/latex/quantum_mechanics_7658b0d23bc723beff7c1a5c272a23ed3ccbe578.png)

and

![\begin{equation*}

[\hat{X}_k, \hat{P}_l ] = i \hbar \delta_{k l}

\end{equation*}](../../assets/latex/quantum_mechanics_a027de200f6a6bd03149d7d3e73a77262e3bc648.png)

Hence,

where

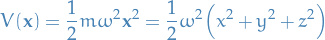

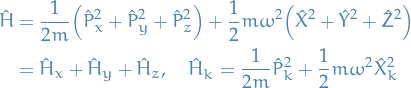

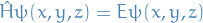

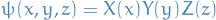

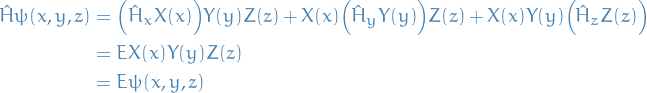

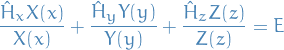

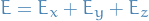

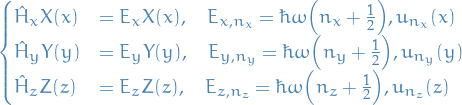

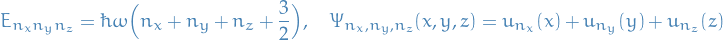

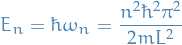

Separation of variables

Here we consider the TISE for a isotropic harmonic oscillator in 3 dimensions, for which the potential is

We can then factorize the Hamiltonian in the following way

Using this in the Schrödinger equation, we get

And we then assume that we can express  as

as

which gives us

Which gives us

And since  is a constant, we can write

is a constant, we can write  , which gives us a system of equations

, which gives us a system of equations

where  denotes the eigenfunction in the k-th dimension for the n-th quantised energy-level (remember we're working with a harmonic osicallator).

Which gives

denotes the eigenfunction in the k-th dimension for the n-th quantised energy-level (remember we're working with a harmonic osicallator).

Which gives

where  denotes the factors of the Hermite polynomials.

denotes the factors of the Hermite polynomials.

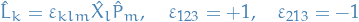

Angular momentum

Commutation

The interesting thing about the angular momentum operators, is that unlike the position- and momentum-operators in different dimensions does not commute!

![\begin{equation*}

[ \hat{L}_i, \hat{L}_j ] = i \hbar \ \varepsilon_{ijk} \hat{L}_k

\end{equation*}](../../assets/latex/quantum_mechanics_f8bde5f495abd6e1ba2602cd2d88deb66c4bf878.png)

This implies that the angular momentum in different dimensions are not compatible observables, i.e. we cannot observe one without affecting the distribution of measurements for the other!

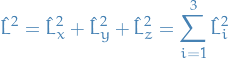

Square of the angular momentum

or in spherical coordinates,

![\begin{equation*}

\hat{L}^2 = - \hbar^2 \Bigg[ \frac{1}{\sin \theta} \frac{\partial}{\partial \theta} \Big( \sin \theta \frac{\partial}{\partial \theta} \Big) + \frac{1}{\sin^2 \theta} \frac{\partial^2}{\partial \phi^2} \Bigg]

\end{equation*}](../../assets/latex/quantum_mechanics_e278815117a1879fd90a7bb09ce03062e544f1c5.png)

We then observe that  is compatible with any of the Cartesian components of the angular momentum:

is compatible with any of the Cartesian components of the angular momentum:

![\begin{equation*}

[\hat{L}^2, \hat{L}_x] = [\hat{L}^2, \hat{L}_y] = [\hat{L}^2, \hat{L}_z] = 0

\end{equation*}](../../assets/latex/quantum_mechanics_fe55448db26b86f1a2a21359463590e941836788.png)

which also tells us that they have a common eigenbasis / simultaneous eigenfunctions .

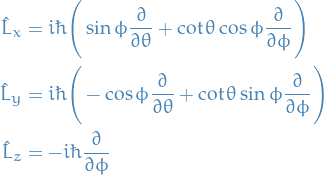

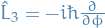

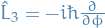

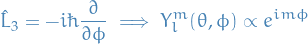

Angular momentum operators in spherical coordinates

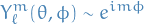

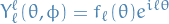

Eigenfunctions

where  and

and

![\begin{equation*}

Y_{\ell}^m (\theta, \phi) = (-1)^m \Bigg[ \frac{2 \ell + 1}{4 \pi} \frac{(\ell - m)!}{(\ell + m)!} \Bigg]^{-1/2} P_{\ell}^m ( \cos \theta ) e^{im \phi}

\end{equation*}](../../assets/latex/quantum_mechanics_6b0080c4bd12192170ef19bf5522f169365a378f.png)

with  known as the associated Legendre polynomials.

known as the associated Legendre polynomials.

![\begin{equation*}

Y_{\ell}^m (\theta, \phi) = (-1)^m \Bigg[ \frac{2 \ell + 1}{4 \pi} \frac{(\ell - m)!}{(\ell + m)!} \Bigg]^{-1/2} P_{\ell}^m ( \cos \theta ) e^{im \phi}

\end{equation*}](../../assets/latex/quantum_mechanics_6b0080c4bd12192170ef19bf5522f169365a378f.png)

with  known as the associated Legendre polynomials.

known as the associated Legendre polynomials.

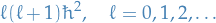

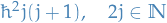

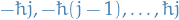

Quantisation of angular momentum

The eigenvalue function for the magnitude of the angular momentum tells us that the angular momentum is quantised. The square of the magnitude of the angular momentum can only assume one of the discrete set of values

and the z-component of the angular momentum can only assume one of the discrete set of values

for a given value of  .

.

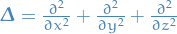

Laplacian operator

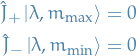

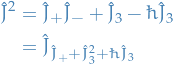

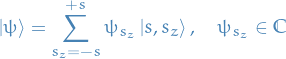

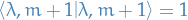

Angular momentum - reloaded

We move away from the notation of using  for angular momentum because what follows is true for any operator satisfying these properties.

for angular momentum because what follows is true for any operator satisfying these properties.

To generalize what the raising and lowering operators we found for the angular momentum we let  denote an (Hermitian) operator which satisfies the following commutation relations:

denote an (Hermitian) operator which satisfies the following commutation relations:

![\begin{equation*}

[\hat{J}_k, \hat{J}_\ell ] = i \hbar \varepsilon_{k \ell m} \hat{J}_m, \qquad \hat{J}^2 = \sum_{k} \hat{J}_k^2, \qquad [ \hat{J}^2, \hat{J}_k ] = 0

\end{equation*}](../../assets/latex/quantum_mechanics_0d94e73b27f56d9bfa0d4ca74705817023e31037.png)

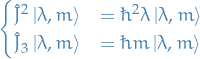

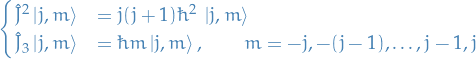

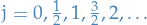

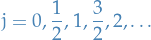

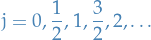

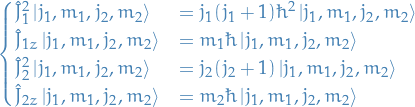

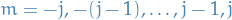

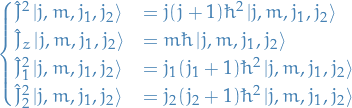

And since  and

and  are compatible, we know that these have a common eigenbasis, which we denote

are compatible, we know that these have a common eigenbasis, which we denote  , and we write the eigenvalues in the following form:

, and we write the eigenvalues in the following form:

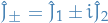

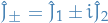

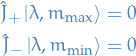

Further, we introduce the raising and lowering operators defined by:

for which we can compute the following properties:

![\begin{equation*}

[ \hat{J}^2, \hat{J}_\pm ] = 0

\end{equation*}](../../assets/latex/quantum_mechanics_114b4d8c8dc48f5d8a1bf1fe7d3a0b3e011ede3c.png)

![\begin{equation*}

[ \hat{J}_3, \hat{J}_\pm ] = \pm \hbar \hat{J}_{\pm}

\end{equation*}](../../assets/latex/quantum_mechanics_b83e24ac7ccf18b9fc35a2c0ee7b44e4a132c084.png)

I.e. we're working with a raising operator for  .

.

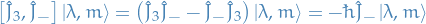

Considering the action of the commutator  on an eigenstate

on an eigenstate  , we obtain the following:

, we obtain the following:

which tells us that both  are also eigenstates of the

are also eigenstates of the  but with eigenvalues

but with eigenvalues  , unless

, unless  .

.

Thus  and

and  act as raising and lowering operators for the z-component of

act as raising and lowering operators for the z-component of  .

.

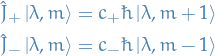

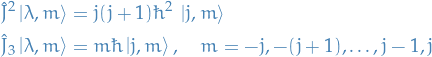

Further, notice that since  we have

we have

Hence, the eigenstates generated by the action of  are still eigenstates of

are still eigenstates of  belonging to the same eigenvalue

belonging to the same eigenvalue  . Thus, we can write

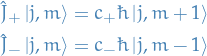

. Thus, we can write

where  are proportionality constants.

are proportionality constants.

The notation used for the eigenstates  is simply saying that we know that the eigenvalue for

is simply saying that we know that the eigenvalue for  does not change under the operator

does not change under the operator  , and thus only the eigenvalue corresponding to

, and thus only the eigenvalue corresponding to  changes with the factor

changes with the factor  (or rather another term of

(or rather another term of  ) and so we denote this new eigenstate as

) and so we denote this new eigenstate as  .

.

To generalize the raising and lowering operators we found for the angular momentum we let  denote an (Hermitian) operator which satisfies the following commutation relations:

denote an (Hermitian) operator which satisfies the following commutation relations:

![\begin{equation*}

[\hat{J}_k, \hat{J}_\ell ] = i \hbar \varepsilon_{k \ell m} \hat{J}_m, \qquad \hat{J}^2 = \sum_{k} \hat{J}_k^2, \qquad [ \hat{J}^2, \hat{J}_k ] = 0

\end{equation*}](../../assets/latex/quantum_mechanics_0d94e73b27f56d9bfa0d4ca74705817023e31037.png)

And since  and

and  are compatible, we know that these have a common eigenbasis, which we denote

are compatible, we know that these have a common eigenbasis, which we denote  , and we write the eigenvalues in the following form:

, and we write the eigenvalues in the following form:

with

The set of  states

states  is called a multiplet.

is called a multiplet.

We introduce the raising and lowering operators defined by:

for which we can compute the following properties:

![\begin{equation*}

[ \hat{J}^2, \hat{J}_\pm ] = 0

\end{equation*}](../../assets/latex/quantum_mechanics_114b4d8c8dc48f5d8a1bf1fe7d3a0b3e011ede3c.png)

![\begin{equation*}

[ \hat{J}_3, \hat{J}_\pm ] = \pm \hbar \hat{J}_{\pm}

\end{equation*}](../../assets/latex/quantum_mechanics_b83e24ac7ccf18b9fc35a2c0ee7b44e4a132c084.png)

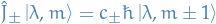

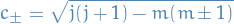

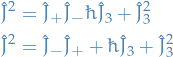

And we have the relations:

with

or, if we're assuming  , we have

, we have

There was originally also the relation

which I somehow picked up from the lecture. But I'm not entirely sure what this actually means…

Some proofs

Lowering operator on an eigenstate is another eigenstate

Where the RHS is due to the commutation relation we deduced earlier. This gives us the equation

which tells us that  is also an eigenstate of

is also an eigenstate of  but with the eigenvalue

but with the eigenvalue  .

.

Just to make it completely obvious what we're saying, we can write the relation above as:

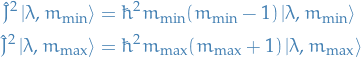

Eigenvalues are bounded

The eigenvalues of  are bounded above and below, or more specifically

are bounded above and below, or more specifically

Further, we have the following properties:

Eigenvalues of

are

are  , where

, where  is one of the allowed values

is one of the allowed values

thus we label the eigenstates of

thus we label the eigenstates of  and

and  by

by  rather than by

rather than by  , so that

, so that

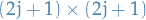

- For an eigenstate

, there are

, there are  possible eigenvalues of

possible eigenvalues of

The set of  states

states  is called a multiplet

is called a multiplet

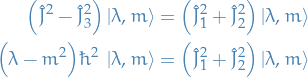

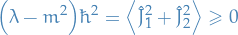

We start by observing the following:

Taking the scalar product with  yields

yields

so that

Hence the spectrum of  is bounded above and below, for a given

is bounded above and below, for a given  . We can deduce that

. We can deduce that

Using the following relations:

and applying the  to

to  and

and  in turn, we can obtain the following relations:

in turn, we can obtain the following relations:

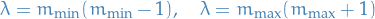

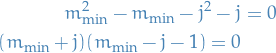

Using the notation  and using the equality above, we get the equation

and using the equality above, we get the equation

and we see that, since  by definition, the only acceptable root to the above quadratic is

by definition, the only acceptable root to the above quadratic is

Now, since  and

and  differ by some integer,

differ by some integer,  , we can write

, we can write

Or equivalently,

Hence, the allowed values are

for any given value of  , we see that

, we see that  ranges over the values

ranges over the values

which is a total of  values.

values.

Concluding the following:

Eigenvalues of

are

are  , where

, where  is one of the allowed values

is one of the allowed values

Since

we can equally well label the simultaneous eigenstates of

we can equally well label the simultaneous eigenstates of  and

and  by

by  rather than by

rather than by  , so that

, so that

- For a eigenvalue of

, there are

, there are  possible eigenvalues of

possible eigenvalues of  , denoted by

, denoted by  , where

, where  runs from

runs from  to

to

- The set of

states

states  is called a multiplet

is called a multiplet

- Which step makes it so that the j in the case of "normal" angular momentum only takes on integer values?

In the case where we're working with "normal" angular momentum, the spherical harmonics are also eigenfunctions

. Since we require that the wave function must be single-valued, the spherical coordinates must be periodic in

. Since we require that the wave function must be single-valued, the spherical coordinates must be periodic in  with period

with period  :

:

this implies that

is an integer, hence

is an integer, hence  must also be an integer.

must also be an integer.

Properties of J

implies

implies

I.e.

, thus

, thus  is positive definite

is positive definite

such that

such that

This is :

![\begin{equation*}

\begin{split}

\hat{J}_+ \hat{J}_- &= (\hat{J}_1 + i \hat{J}_2) (\hat{J}_1 - i \hat{J}_2) \\

&= \hat{J}_1^2 + \hat{J}_2^2 + i [\hat{J}_2, \hat{J}_1] \\

&= \hat{J}^2 - \hat{J}_3^2 + \hbar \hat{J}_3

\end{split}

\end{equation*}](../../assets/latex/quantum_mechanics_911ceee78d27d219e0244df53696cbbeaab6bfa0.png)

and

:

,

,  implies that

implies that

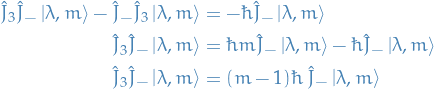

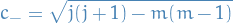

Normalization constant

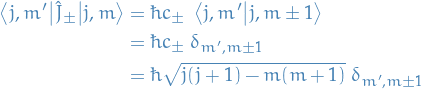

Computing the matrix elements

The matrix is

And for the raising and lowering operators

Q & A

DONE Which step makes it so that the j in the case of "normal" angular momentum only takes on integer values?

In the case where we're working with "normal" angular momentum, the spherical harmonics are also eigenfunctions  . Since we require that the wave function must be single-valued(?), the spherical coordinates must be periodic in

. Since we require that the wave function must be single-valued(?), the spherical coordinates must be periodic in  with period

with period  :

:

this implies that  is an integer, hence

is an integer, hence  must also be an integer.

must also be an integer.

I'm not sure what they mean by "single-valued". From the notes they do this:

of which I'm not entirely sure what they mean.

From looking at looking at the spherical harmonics from a [BROKEN LINK: No match for fuzzy expression: *Spherical%20harmonics] the eigenvalue  for some non-negative integer

for some non-negative integer  , is due to regularity on the poles

, is due to regularity on the poles  .

.

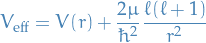

Central potential

Notation

is the angle vertical to the xy-plane

is the angle vertical to the xy-plane is the angle in the xy-plane

is the angle in the xy-plane is the mass (avoids confusion with the magnetic quantum number

is the mass (avoids confusion with the magnetic quantum number  )

) is the position vector

is the position vector denotes the magnitude of the position vector

denotes the magnitude of the position vector

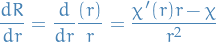

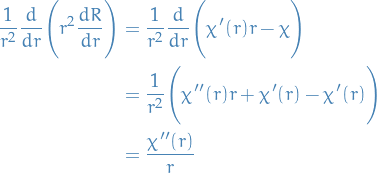

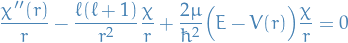

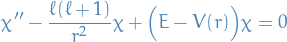

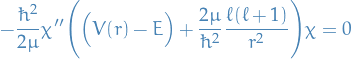

Stuff

![\begin{equation*}

\Bigg[ - \frac{\hbar^2}{2 \mu} \nabla^2 + V(r) \Bigg] u(\mathbf{r}) = E u(\mathbf{r})

\end{equation*}](../../assets/latex/quantum_mechanics_13bbbaed0b51bcbaa3c90d5ffd6342c6a32f24fc.png)

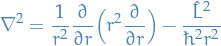

In spherical coordinates we write the Laplacian as

we can then rewrite this in terms of the angular momentum operator:

and thus the TISE becomes

![\begin{equation*}

\frac{h^2}{2 \mu} \Big[ - \frac{1}{r^2} \frac{\partial}{\partial r} \Big( r^2 \frac{\partial}{\partial r} \Big) + \frac{1}{\hbar^2 r^2} \hat{L}^2 \Big] u(r, \theta, \phi) = \big(E - V(r) \big) u(r, \theta, \phi)

\end{equation*}](../../assets/latex/quantum_mechanics_11e638756cc41efa66c97d677103f28bd6280626.png)

where  , then any pair of

, then any pair of  commutes, so there exists a set of simultaneous eigenstates

commutes, so there exists a set of simultaneous eigenstates  .

.

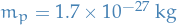

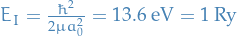

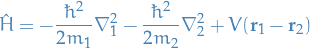

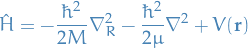

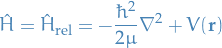

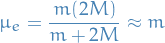

Hydrogen atom

Notation

effective mass of system

effective mass of system

is the Bohr radius

is the Bohr radius

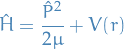

Stuff

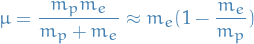

Superposition of eigenstates of proton and electron

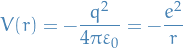

(Coloumb) potential:

where the effective mass is

The TISE is:

![\begin{equation*}

\Big[ - \frac{\hbar^2}{2 \mu} \nabla^2 + V(r) \Big] \Psi(\mathbf{x}) = E \Psi(\mathbf{x})

\end{equation*}](../../assets/latex/quantum_mechanics_ed915b37098138dd5f7f255de76794f6f6c99b51.png)

We make the ansatz:

Thus,

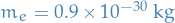

Thus, we end up with

![\begin{equation*}

\Bigg[ \frac{\hbar^2}{2 \mu}\frac{d^2}{dr^2} - \frac{e^2}{r} + \frac{\hbar^2 \ell (\ell + 1)}{2 \mu r^2} \Bigg] \chi_{\ell}(r) = E \chi_{\ell} (r), \quad \chi_\ell(0) = 0

\end{equation*}](../../assets/latex/quantum_mechanics_5d7a57dcaaf7ea61855fd930d4fbea34b21a8e8c.png)

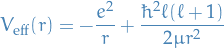

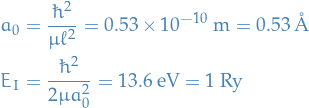

and we let

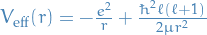

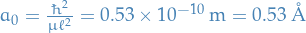

We now introduce some "scales" for length and energy:

where  is the Bohr radius. Further, we let

is the Bohr radius. Further, we let

Substituting these scales into the TISE deduced earlier:

![\begin{equation*}

\Bigg[ - \frac{\hbar^2}{2 \mu} \frac{1}{a_0^2} \frac{d^2}{d \rho^2} + \frac{\hbar^2 \ell (\ell + 1)}{2\mu a_0^2 \rho^2} - \frac{e^2}{a_0 \rho} - E \Bigg] \chi_{\ell}(a_0 \rho) = 0

\end{equation*}](../../assets/latex/quantum_mechanics_cb7a6f560631a5a4b60a73735b6382ba8d308cb2.png)

![\begin{equation*}

- E_I \Bigg[ - \frac{d^2}{d \rho^2} - \frac{\ell (\ell + 1)}{\rho^2} + \frac{2}{\rho} + \frac{E}{E_I} \Bigg] \chi_{\ell} (a_0 \rho) = 0

\end{equation*}](../../assets/latex/quantum_mechanics_eb52366033707e1f92bd3d6ea61d29457dbcfa19.png)

We tehn introduce another function of  instead of

instead of  :

:

which gives us

![\begin{equation*}

\Bigg[ \frac{d^2}{d \rho^2} - \frac{\ell (\ell + 1)}{\rho^2} + \frac{2}{\rho} - \lambda^2 \Bigg] u_\ell(\rho) = 0

\label{tise-wrt-u-rho}

\end{equation*}](../../assets/latex/quantum_mechanics_17c59af110e339bfb23ad04579c83ea8ce5fe806.png) 1

1

with boundary conditions:  .

.

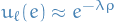

We then consider the boundary condition where  :

:

![\begin{equation*}

\rho \to \infty : \Bigg[ \frac{d^2}{d \rho^2} - \lambda^2 \Bigg] u_{\ell}(\rho) = 0 \implies u_{\ell}(\rho) = e^{\pm \rho \lambda}

\end{equation*}](../../assets/latex/quantum_mechanics_bc28652f07b4d56b79761bafdb60a859a5c65c49.png)

But since we need it to be bounded:

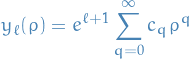

Another ansatz:  , which is simply the familiar method of variation of parameters, where we tack on another function of

, which is simply the familiar method of variation of parameters, where we tack on another function of  , the independent variable, to obtain another solution

, the independent variable, to obtain another solution  which is independent of

which is independent of  .

.

If we then plug our  into the [tise-wrt-u-rho], which ends up being

into the [tise-wrt-u-rho], which ends up being

![\begin{equation*}

y_{\ell}''(\rho) - 2 \lambda y_{\ell}' (\rho) + \Bigg[ - \frac{\ell (\ell + 1)}{\rho^2} + \frac{2}{\rho} \Bigg] y_{\ell}(\rho) = 0

\end{equation*}](../../assets/latex/quantum_mechanics_90918c8acb0edf8b06eb95c61ccc0a0429b23e16.png)

where due to the behaviour of  we have

we have

Ansatz:

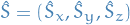

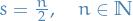

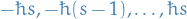

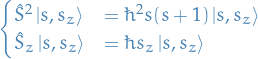

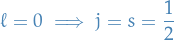

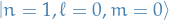

Spin - intrinsic angular momentum

Notation

denotes the eigenstate of a system with spin (eigenvalue of

denotes the eigenstate of a system with spin (eigenvalue of  )

)  in the z-direction, and

in the z-direction, and  being the eigenvalue of

being the eigenvalue of

since the first number (

since the first number ( ) is provided in the second anyways

) is provided in the second anyways and

and

etc. we use to represent the coefficient of the function

etc. we use to represent the coefficient of the function  in some basis

in some basis- when we say a system has spin

, we're talking about a system with eigenvalue of

, we're talking about a system with eigenvalue of  to be

to be

Stuff

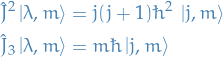

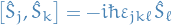

Now that we know

![\begin{equation*}

[ \hat{J}_k , \hat{J}_l ] = i \hbar \varepsilon_{klm} \hat{J}_m

\end{equation*}](../../assets/latex/quantum_mechanics_3b516984539a54c7b52c023f4a3520ef48f77318.png)

eigenvalues:

eigenvalues:

eigenvalues:

eigenvalues:

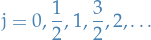

From the orbital angular momentum, we have

In the case of orbital angular momentum , we have the requirement that  , which implies

, which implies  , rather than

, rather than  which we found in the Angular momentum - Reloaded.

which we found in the Angular momentum - Reloaded.

are diff. operators, which implies

are diff. operators, which implies  diff. operators

diff. operators

For a given value of  ,

,  since

since  is the maximum for a spherical harmonic.

is the maximum for a spherical harmonic.

Now using the properties derived for the more general  operator we can deduce the all the other spherical harmonics by the usage of raising and lowering operators.

operator we can deduce the all the other spherical harmonics by the usage of raising and lowering operators.

And it satisfies the commutation relation

and

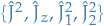

Thus, we can construct a basis using the eigenstates of  , i.e. they form a C.S.O.C..

, i.e. they form a C.S.O.C..

And as we've seen earlier, if an operator satisfies the above properties, we have:

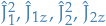

- eigenvalues of

:

:  ,

,

- eigenvalues of

:

:

For a given value of  ,

,  has

has  elements

elements

And the eigenfunctions have the relations:

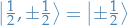

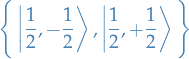

Example: system of spin 1 / 2

Since  , we have:

, we have:

- eigenvalue of

is

is

- eigenvalue of

are

are

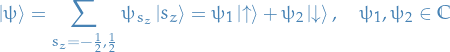

The basis of the space of the physical states is then

where we use the notation

Thus, any eigenstate we can expand in this basis:

and then

is the prob. of finding the system in

is the prob. of finding the system in  measuring

measuring

is the prob. of finding the system in

is the prob. of finding the system in  measuring

measuring

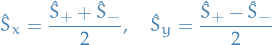

TODO Pauli matrices

Since we can write

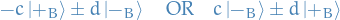

Addition of angular momenta

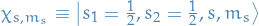

Notation

, without integer subscripts refers to the total angular momentum , and thus

, without integer subscripts refers to the total angular momentum , and thus  refers to the z-component of this total angular momentum

refers to the z-component of this total angular momentum refers to the z-component of the angular momentum indexed by, well, 1

refers to the z-component of the angular momentum indexed by, well, 1 refers to the square of the angular momentum indexed by 1

refers to the square of the angular momentum indexed by 1

Stuff

This excerpt from some book might be a really good way of getting a better feel of all of this

In this section we deal with the case of having two angular momenta!

For example, we might wish to consider an electron which has both an intrinsic spin and some orbital angular momentum, as in a real hydrogen atom.

Total angular momentum operator

where we assume  and

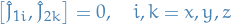

and  are independent angular momenta, meaning each satisfies the usual angular momentum commutation relations

are independent angular momenta, meaning each satisfies the usual angular momentum commutation relations

where

labels the individual angular momenta

labels the individual angular momenta stands for cyclic permutations.

stands for cyclic permutations.

Furthermore, any component of  commutes with any component of

commutes with any component of  :

:

so that the two angular momenta are compatible.

It follows that the four operators  are mutually commuting and thus must posses a common eigenbasis!

are mutually commuting and thus must posses a common eigenbasis!

This common eigenbasis is known as the uncoupled basis and is denoted  .

.

It has the following properties:

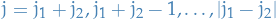

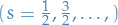

The allowed values of the total angular momentum quantum number  , given two angular momenta corresponding to the quantum numbers

, given two angular momenta corresponding to the quantum numbers  and

and  are:

are:

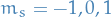

and for each of these values of  , we have

, we have  take on the

take on the  values

values

The z-component of the total angular momentum operator  commutes with

commutes with  and

and  , thus the set of four operators

, thus the set of four operators  are also a mutually commuting set of operators with a common basis known as the coupled basis, denoted

are also a mutually commuting set of operators with a common basis known as the coupled basis, denoted  and satisfying

and satisfying

these are states of definite total angular momentum and definite z-component of total angular momentum but not in general states with definite  or

or  .

.

In fact, these states are expressible as linear combinations of the states of the uncoupled basis, with coefficients known as Clebsch-Gordan coefficients.

I think what they mean by "states of definite angular momentum…" are the states which are coupled in a way that one eigenvalue decides the other?

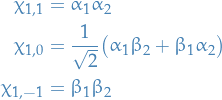

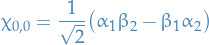

TODO Example: two half-spin particles

Suppose we have two half-spin particles (e.g. electrons) with spin quantum numbers  and

and  .

.

According to the Addition theorem, the total spin quantum number  takes on the values

takes on the values  and we require

and we require  .

.

Thus, two electroncs can only have a total spin:

called the triplet states, for which there are three possible values of the spin magnetic quantum number

called the triplet states, for which there are three possible values of the spin magnetic quantum number

called the singlet states, for which there are only a single possible value

called the singlet states, for which there are only a single possible value

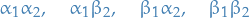

Let's denote the elements of the uncoupled basis as

where the subscripts 1 and 2 refer to electron 1 and 2, respectively. The operators  and

and  act only on the parts labelled 1, and so on.

act only on the parts labelled 1, and so on.

If we let  be the state which has

be the state which has  and

and  then it must have

then it must have  , i.e. total z-component of spin

, i.e. total z-component of spin  , and can therefore only be

, and can therefore only be  and not

and not  .

.

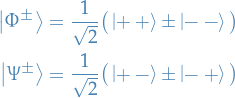

The eigenstates ends up being:

![\begin{equation*}

\begin{split}

\ket{s = 1, m_s = 1, s_1 = \frac{1}{2}, s_2 = \frac{1}{2}} &= \alpha_1 \alpha_2 \\

\ket{s = 1, m_s = - 1, s_1 = \frac{1}{2}, s_2 = \frac{1}{2}} &= \beta_1 \beta_2 \\

\ket{s = 1, m_s = 0, s_1 = \frac{1}{2}, s_2 = \frac{1}{2}} &= \frac{1}{\sqrt{2}} [ \alpha_1 \beta_2 + \beta_1 \alpha_2 ] \\

\ket{s = 0, m_s = 0, s_1 = \frac{1}{2}, s_2 = \frac{1}{2}} &= \frac{1}{\sqrt{2}} [ \alpha_1 \beta_2 - \beta_1 \alpha_2 ]

\end{split}

\end{equation*}](../../assets/latex/quantum_mechanics_fa572e88aaa427e9656062220ce71e930a158698.png)

Identical Particles

Stuff

Systems of identical particles with integer spin  known as bosons , have wave functions which are symmertic under interchange of any pair of particle labels (i.e. swapping the states of say two particles).

known as bosons , have wave functions which are symmertic under interchange of any pair of particle labels (i.e. swapping the states of say two particles).

The wave function is said to obey Bose-Einstein statistics .

Systems of identical particles with half-odd-integer spins  known as fermions , have wave functions which are antisymmetric under interchange of any pair of particle labels.

known as fermions , have wave functions which are antisymmetric under interchange of any pair of particle labels.

The wave function is said to obey Fermi-Dirac statistics .

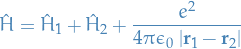

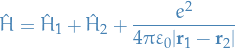

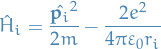

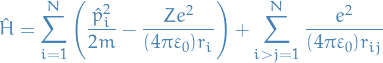

In the simplest model of the helium atom, the Hamiltonian is

where

This is symmetric under permutation of the indices 1 and 2 which label the two electrons. Thus it must be the case if the two electrons are identical or industinguishable : it cannot matter which particle we label 1 and we which we label 2.

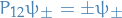

Due to symmerty condition, we have

Suppose that

so we conclude that  and

and  are both eigenfunctions belonging to the same eigenvalue

are both eigenfunctions belonging to the same eigenvalue  . Further, any linear combination of the two is!

. Further, any linear combination of the two is!

In particular, the normalised symmertic and antisymmertic combinations

are eigenfunctions belonging to the eigenvalue  .

.

If we introduce the particle interchange operator,  , with the property that

, with the property that

then the symmertic and antisymmertic combinations are eigenfunctions of  with eigenvalues

with eigenvalues  respectively:

respectively:

Since  are simultaneous eigenfunctions of

are simultaneous eigenfunctions of  and

and  it follows that:

it follows that:

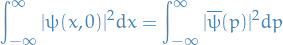

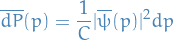

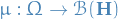

Fourier Transforms and Uncertainty Relations

This is heavily inspired by a post from mathpages.

Notation

is such that

is such that  , i.e. the ket corresponding to the eigenvalue

, i.e. the ket corresponding to the eigenvalue

Stuff

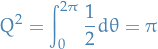

Gaussian integral

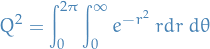

- Basic

Taking the square of the integral

we get

we get

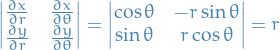

Reparametrizing in polar coordinates

which gives us the Jacobian transformation

so the incremental area element is

Hence the double-integral above becomes

and we since

we get

Finally giving us

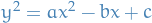

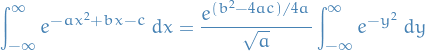

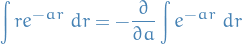

- More general

If we let

, i.e. we consider some arbitrary quadratic, then

, i.e. we consider some arbitrary quadratic, then

in terms of which we can write

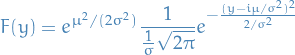

Fourier transform of a normal probability density

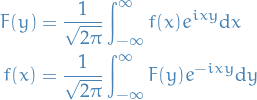

For any function  we have the relation

we have the relation

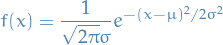

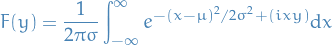

Now, if  is a normal probability density, we have

is a normal probability density, we have

which has the Fourier transform

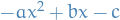

Where we can write the exponent in the integral of the form  with

with

hence the Fourier transform of the normal density function is

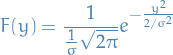

If we then choose our scales so that the mena of  is zero, i.e. so that

is zero, i.e. so that  the expression reduces to

the expression reduces to

which means that the Fourier transform of a normal distribution with mean  and variance

and variance  is a normal distribution with mean

is a normal distribution with mean  and variance

and variance  .

.

Which tells us that the variances of the distributions  and

and  satisfy the uncertainty relation:

satisfy the uncertainty relation:

This is the limiting case of the general inequality on the product of variances of Fourier transform pairs. In general, if  is an arbitrary probability density and

is an arbitrary probability density and  is its Fourier Transform, then

is its Fourier Transform, then

For any probability density function  and it's the Fourier Transform

and it's the Fourier Transform  we have

we have

and

and  are two different ways of characterizing the same distribution, one in the amplitude domain and one in the frequency domain. Given either of these, the other is completely determined.

are two different ways of characterizing the same distribution, one in the amplitude domain and one in the frequency domain. Given either of these, the other is completely determined.

Remember, due to the Central Limit Theorem, i.i.d. the sample mean of any random variable with finite variance follows a Normal distribution

Hence, the equality above is saying that if we have an infinte number of realizations of some random variable  then the variance of this will be bounded below by the inverse of the variance of the Fourier Transform.

then the variance of this will be bounded below by the inverse of the variance of the Fourier Transform.

Hmm, I'm not 100% sure about all this. How can we for sure know that the Fourier Transform of the probability density will have a sample variance which decreases quicker than the actual density function  ?

?

Why can we guarantee that the variance of the Fourier transform does not decrease faster than the variance of the density  ?

?

We're saying that variance of random variable whos sample average converges to  has a variance of

has a variance of  , which is fiiine. Then we're saying that some the variance of some random variable whos sample average converges to

, which is fiiine. Then we're saying that some the variance of some random variable whos sample average converges to  has a variance of

has a variance of  , which is also fine. Then, we're saying that the variance of this random variable is always going to be greater than the limiting variances for these random variables, whiiiich you really can't say.

, which is also fine. Then, we're saying that the variance of this random variable is always going to be greater than the limiting variances for these random variables, whiiiich you really can't say.

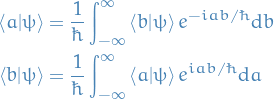

Application to Quantum mechanics

The canonical commutation relation is the fundamental relation between canonical conjugate quantities, i.e. quantities which are related by definition such that one is the Fourier transform of the other, which for two operators which are canonical conjugates, we have

Equivalently, if we have the above commutation relation between two Hermitian operators, then they form a Fourier Transform pair.

Where, if we take the commutation-relation the other way around the the sign of  changes, i.e. it's "symmetric" in a sense.

changes, i.e. it's "symmetric" in a sense.

Now, if we then take some state  and compute the probability amplitudes that the measurements corresponding to the operators

and compute the probability amplitudes that the measurements corresponding to the operators  and

and  will return the eigenvalues

will return the eigenvalues  and

and  respectively, then we find

respectively, then we find

where  is the bra corresponding to the eigenstate

is the bra corresponding to the eigenstate  with the eigenvalue

with the eigenvalue  of the operator

of the operator  .

.

That is; the probability amplitude distributions of two conjugate variables are simply the (suitably scaled) Fourier transforms of each other.

We saw earlier that the variances of two density distributions that comprise a Fourier transform pair satisfy the variance inequality:

Heisenberg Uncertainty Principle

How is this interesting? Well, as it turns out, the position operator  and the momentum operator

and the momentum operator  have the commutation relation

have the commutation relation

which then tells us that they are conjugate varianbles, i.e. the probability amplitude distributions of the two are scaled Fourier transforms of each other! Which means they satisfy the following inequality

which is just the Heisenberg uncertainty principle!

Bra, kets, and matrices

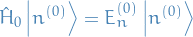

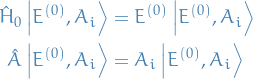

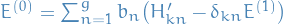

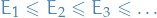

Time-independent Perturbation Theory

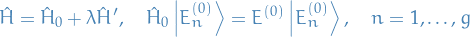

Notation

is the Hamiltonian corresponding to the unperturbed / exactly solvable system

is the Hamiltonian corresponding to the unperturbed / exactly solvable system and

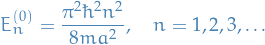

and  represent the n-th eigenvalue and eigenfunction of the unperturbed Hamiltonian

represent the n-th eigenvalue and eigenfunction of the unperturbed Hamiltonian and

and  is the energy and ket of the i-th perturbation term

is the energy and ket of the i-th perturbation term and

and  is the corrected (perturbed with all the terms) eigenvalue and eigenfunction, i.e.

is the corrected (perturbed with all the terms) eigenvalue and eigenfunction, i.e.

denote the total angular momentum (rather than a general ang. mom. as we've used it for earlier)

denote the total angular momentum (rather than a general ang. mom. as we've used it for earlier)

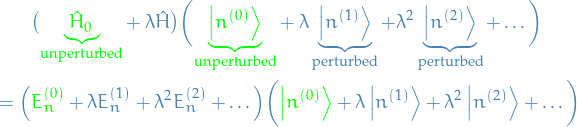

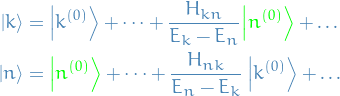

Overview

- Few problems in Quantum Theory can be solved exactly

- Helium atom: inter-electron electrostatic repulsion term in

changes the problem into one which cannot be solved analytically

changes the problem into one which cannot be solved analytically

- Helium atom: inter-electron electrostatic repulsion term in

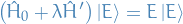

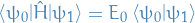

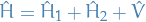

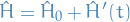

Perturbation Theory provides a method for finding approx. energy eigenvalues and eigenstates for a system whose Hamiltonian is of the form

where

is the Hamiltonian of an exactly solvable system, for which we know the eigenvalues,

is the Hamiltonian of an exactly solvable system, for which we know the eigenvalues,  , and eigenstates,

, and eigenstates,  , and

, and  is small, time-independent perturbation.

is small, time-independent perturbation.

Does not converge

Perturbation does not actually guarantee convergence, i.e. that each term is smaller than the previous.

But, it is something called Borel summable, which is a method for summing up divergent series.

Short and sweet

Let

where:

is a real parameter used for convenience

is a real parameter used for convenience is some small perturbation to the Hamiltonian, e.g.

is some small perturbation to the Hamiltonian, e.g.

Then, the corrected eigenvalues and eigenfunctions are going to be given by:

"My" version (first order mainly)

It is convenient to consider the related problem of a system with Hamiltonian

where:

is a real parameter used for convenience

is a real parameter used for convenience is some small perturbation to the Hamiltonian, e.g.

is some small perturbation to the Hamiltonian, e.g.

Then the eigenvalue problem we're trying to solve is then:

Assume  and

and  posses discrete, non-degenerate eigenvalues only, and we write:

posses discrete, non-degenerate eigenvalues only, and we write:

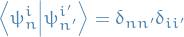

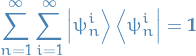

where  are orthonormal.

are orthonormal.

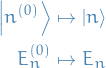

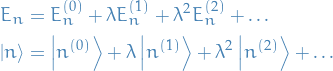

The effect of the perturbation on the eigenstates and eigenvalues is defined by the following maps:

where

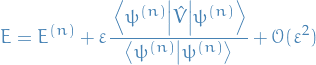

Thus, we solve the full eigenvalue problem by assuming we can exapnd  and

and  in a series as follows:

in a series as follows:

were the correction terms  and

and  are of succesively higher order of "smallness": the power of

are of succesively higher order of "smallness": the power of  keeps track of this for us.

keeps track of this for us.

The correction terms  are not normalized (by defualt)!

are not normalized (by defualt)!

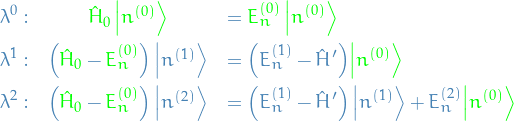

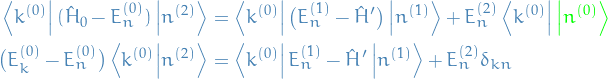

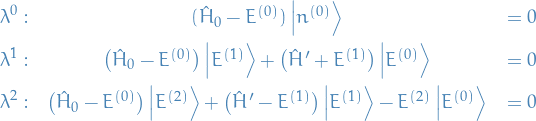

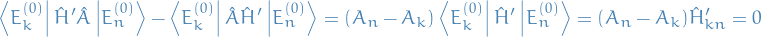

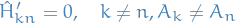

Substitute these into the equation for the full Hamiltonian (can be seen above):

and equating the terms of the same degree of  , giving:

, giving:

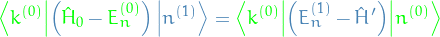

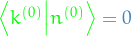

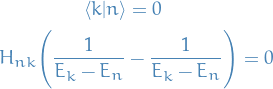

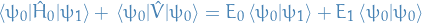

Now let's, for the heck of it, take the inner product of the coefficients of  with some arbitrary non-perturbed state

with some arbitrary non-perturbed state  :

:

which (due to  being Hermitian), becomes

being Hermitian), becomes

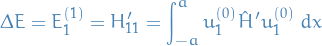

Let's first consider the correction (of first order) to the energy (then we'll to the eigenstate afterwards) by letting  (since

(since  is arbitrary):

is arbitrary):

LHS vanishes, and we're left with

which is the expression for the energy correction (of first order)!

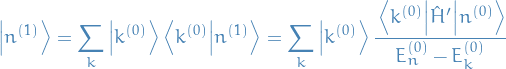

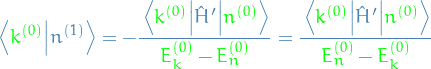

To obtain the correction to the eigenfunction itself, we explore the following:  ,

,

where  when

when  , thus

, thus

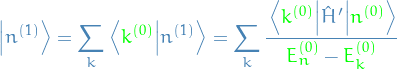

Hence, we can simply expand  in the basis of all

in the basis of all  :

:

Aaaand we have our expression for the eigenstate correction (of first order)!

Preservation of orthogonality

Let's consider the inner product

where we've picked out the terms which will be non-zero in  . Observe that the non-zero terms are of different sign, thus

. Observe that the non-zero terms are of different sign, thus

Thus orthogonality is preserved (for first order approximation).

Notes

Corrected

needs to be normalized, thus we better have

needs to be normalized, thus we better have

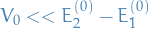

- We require that the level shift be small compared to the level spacing in the unperturbed system:

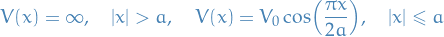

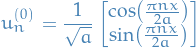

Example: Potential well

with

where  ,

,

Thus, the first order perturbation correction is

And the second order perturbation correction is

Letting  , we have

, we have

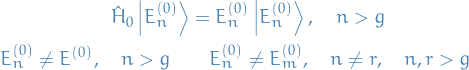

Degeneracy in Perturbation Theory

Notation

- States are ordered s.t. first

states are degenerate

states are degenerate

Stuff

Remember, this is the case where  has degenerate eigenstates, and we're assuming that the "real" Hamiltonian

has degenerate eigenstates, and we're assuming that the "real" Hamiltonian  never is degenerate (which is sort of what you'd expect in nature, I guess).

never is degenerate (which is sort of what you'd expect in nature, I guess).

Consider the eigenstate  with degeneracy

with degeneracy  :

:

Then

We're just saying that we've ordered the states in such a way that we have state of  degeneracy occuring in the first

degeneracy occuring in the first  indices.

indices.

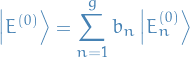

Since we have a g-dimensional subspace to work with for the degenerate eigenstate, now, instead of just finding the coefficients as in non-degenerate perturbation theory, we're looking for the "best" linear combination of the eigenbasis for the degenerate case!

and want to find the "optimal" coefficients / projection.

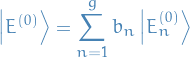

Proceeding as for the non-degenerate case, we assume that

where we assume we can write the following

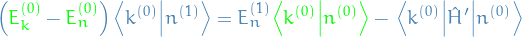

Substituting the expansions into the eigenvalue equation and equating terms of the same degree  , we find:

, we find:

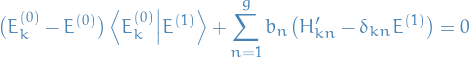

Once again, taking the scalar product with some arbitrary k-th unperturbed eigenstate  , we get

, we get

substituting in

we get

Now we consider the different cases of  .

.

- degenerate states

- degenerate states

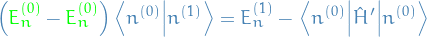

In this case we simply have  and thus the first term vanishes

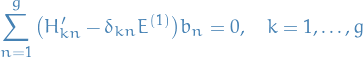

and thus the first term vanishes

which is simply a "linear algebra eigenvalue problem" (when including for all  )!

)!

We'll denote the "linear algebra eigenvalues" as roots, to distinguish from the eigenvalues from the Schrödinger equation.

where

We get the roots  then have the different cases:

then have the different cases:

distinct roots => we've broken the degeneracy of

distinct roots => we've broken the degeneracy of  , yey!

, yey!- one or more repeating roots => there's still some degeneracy left from

, aaargh!

, aaargh! - all equal roots => move on to 2nd order expansion, cuz 1st order didn't help mate!

If we have  distinct roots, we end up with

distinct roots, we end up with

Which is great; it's the same as the non-degenerate case! Therefore, we'd like to arrange for this to always be the case.

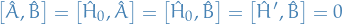

Suppose we can find some observable represented by an operator  such that

such that

Then there are simultaneous eigenstates of  and

and  :

:

If and only if the eigenvalues  are distinct, then we have a C.S.O.C., and we can write

are distinct, then we have a C.S.O.C., and we can write

Thus,

which is

Therefore,

Hence, if  for all

for all  , then

, then  is diagonal, as wanted.

is diagonal, as wanted.

Now, what if  are not distinct?! We then look for another operator

are not distinct?! We then look for another operator  , such that

, such that

i.e.  is a C.S.O.C., and letting

is a C.S.O.C., and letting  we can repeat the about argument.

we can repeat the about argument.

We're saying that if we can find some operator which commutes with both  and

and  , we can make

, we can make  diagonalizable, i.e. making our lives super-easy above.

diagonalizable, i.e. making our lives super-easy above.

- non-degenerate states

- non-degenerate states

We simply take our expansion for  and do exactly what we did for the non-degenerate case!

and do exactly what we did for the non-degenerate case!

Example

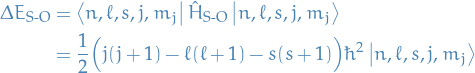

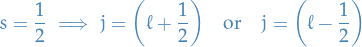

Central potential, spin-(1/2), spin-orbit interaction:

We know that

so if we use the coupled basis  , we can use the non-degeneracy theory to compute the 1st order energy shifts!

, we can use the non-degeneracy theory to compute the 1st order energy shifts!

but

because of the  term in

term in  .

.

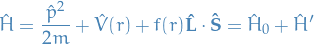

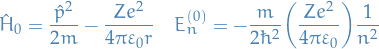

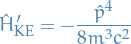

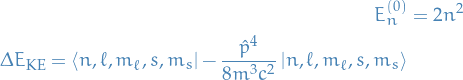

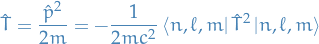

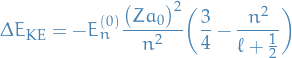

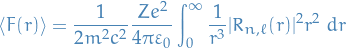

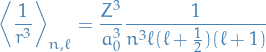

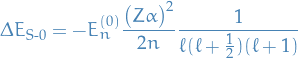

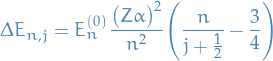

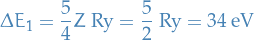

Example: Hydrogen fine structure

Notation

denotes the spin-orbit term, whose physical origin is the interaction between the intrinstic magnetic dipole moment of the electron and the magnetic field due to the electron's orbital motion in the electric field of the nuclues

denotes the spin-orbit term, whose physical origin is the interaction between the intrinstic magnetic dipole moment of the electron and the magnetic field due to the electron's orbital motion in the electric field of the nuclues

Stuff

with

We start with the kinetic energy

Where

Therefore

Which appearantly give us

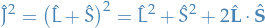

And for the spin-orbit interaction we have

which gives us

Applying this to some ket

Thus,

where

thus, if

Which gives us

implying

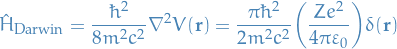

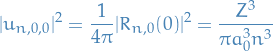

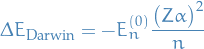

And finally for the Darwin-correction

Observe that  as

as  for

for  , then

, then

where

Which gives us the final Darwin correction

Giving the total correction

Example: Helium atom

Notation

represents the spin-down state

represents the spin-down state represents the spin-up state

represents the spin-up state is the states for the coupled basis, where

is the states for the coupled basis, where  is the total spin quantum number and

is the total spin quantum number and  is the total magnetic quantum number

is the total magnetic quantum number

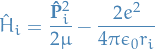

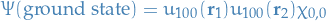

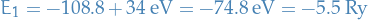

Neglecting mutual Coulomb repulsion between the electrons

Neglecting the mututal Coulomb repulsion between the electrons we end up with the Hamiltonian

It turns out that this yields the ground-state energy just

but experimentally we find

For the Helium atom we use the convention of denoting the ground state with  rather than

rather than  as for the Hydrogen atom.

as for the Hydrogen atom.

Stuff

In the simplest model of the helium atom, the Hamiltonian is

where

The term added to  represents the repulsion of the two electrons, and in the

represents the repulsion of the two electrons, and in the  we have the negative term which represents the attraction.

we have the negative term which represents the attraction.

Also, observe that this is symmertic under permutation of the indices which label the two electrons. Thus, the electrons are indistinguishable, as one would want.

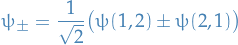

The states of the coupled representation for the two spin-half electrons are the three triplet states:

and the singlet state:

- triplet states are symmetric under permutation

- singlet state is anti-symmetric under permutation

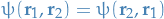

The overall 2-electron wavefunction is a product of a spatial wavefunction and a spin function:

The tensor product between the spatial wavefunction,  , and the spin function,

, and the spin function,  , represents some function

, represents some function  such that

such that

where  is as defined above.

is as defined above.

Thus, the total 2-electron wavefunction has the following symmetries:

symmetry of  |

symmetry of  |

symmerty of  |

|

|---|---|---|---|

(singlet) (singlet) |

anti | sym | anti |

(triplet) (triplet) |

sym | anti | anti |

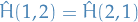

Consider the case of the Helium atom, where (if we're neglecting the inter-electron interactions) we would have the spatial solutions

since we can just use separation of variables and solve the Schrödinger eqn. for each electron separately, both having identical solutions.

Now, suppose further that  , and thus

, and thus  and

and  , then

, then

Now consider the case where they would swap places, i.e. we were to interchange them (we're assuming they're completely opposite to each other, since i 2-electron orbit, they're almost always opposite of each other), then

Now, we know that

Therefore,  , as we have above, is symmetric:

, as we have above, is symmetric:

Thus,

BUT we know that two fermions cannot occupy the same state at the same time, hence the above is not sufficient! We need the wave-functions to be different, further, for the wave-function of the entire Helium to stay the same we require the wave-functions to be anti-symmetric under interchanging of electrons (for  , that is). And this is why we introduce the

, that is). And this is why we introduce the  described earlier

described earlier

Ground state

Compute the first order correction to  , we compute the expectation of the perturbation

, we compute the expectation of the perturbation  , wrt. wavefunction:

, wrt. wavefunction:

which gives us a correction of

giving us the first order estimate of the ground-state energy

which is pretty close to the experimentally observed value of  .

.

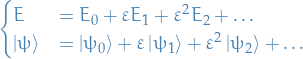

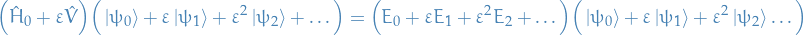

Stuff

Define a new operator

With

Then we want to expand wrt.

Setting up the Schrödinger equation, we have

Now, collecting terms involving the different factors of  , we have:

, we have:

For

we find:

we find:

and therefore

has to be one of the unperturbed eigenfunctions

has to be one of the unperturbed eigenfunctions  and

and  , i.e. the corresponding unperturbed eigenvalue.

, i.e. the corresponding unperturbed eigenvalue.

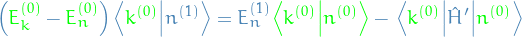

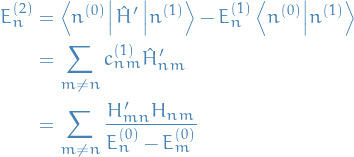

For

we have:

we have:

Which we can take the scalar product of with

:

:

Since

, we have

, we have

Hence, the eigenvalue of the Hamitonian in this case becomes:

This is the correction to the n-th energy level! So to obtain the complete correction due to the perturbation, we have to do this for each of the eigenfunctions which make up the wave-function of the entire system we're looking at.

He atom

Time-dependent Perturbation Theory

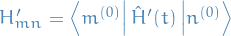

Notation

is the Hamiltonian corresponding to the unperturbed / exactly solvable system

is the Hamiltonian corresponding to the unperturbed / exactly solvable system and

and  represent the n-th eigenvalue and eigenfunction of the unperturbed Hamiltonian

represent the n-th eigenvalue and eigenfunction of the unperturbed Hamiltonian and

and  is the energy and ket of the i-th perturbation term

is the energy and ket of the i-th perturbation term and

and  is the corrected (perturbed with all the terms) eigenvalue and eigenfunction, i.e.

is the corrected (perturbed with all the terms) eigenvalue and eigenfunction, i.e.

is the density of final states

is the density of final states

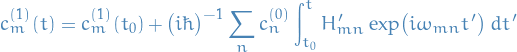

Expression for

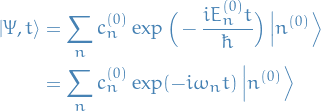

Solution to the TIDE can be written

Generalize to the perturbed Hamiltonian

the coefficients

become time-dependent:

become time-dependent:

Observe that lack of  here!!!

here!!!

We're not yet using the perturbation here, which would be

This is comes in the next section.

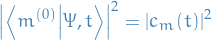

Probability of finding the system in the state

at time

at time  is then

is then

where we have used the orthonormality of

.

.

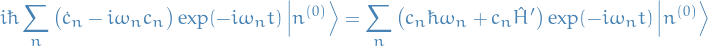

Substituting into TDSE:

which gives:

Taking scalar product with arbitrary unperturbed state

:

:

which gives:

Perturbation

Now consider the Hamiltonian related to the perturbed one above:

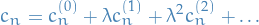

Assume we can expand

in power series:

in power series:

Substitute in the equation for

derived above (factor of

derived above (factor of  on RHS is from

on RHS is from  now instead of

now instead of  ):

):

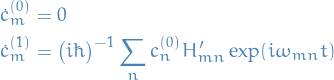

Equate terms of same degree in

:

:

- Zeroth order is time-independent; since to this order the Hamiltonian is time-independent => recover unperturbed result

Integrating first-order correction gives:

Suppose initially system is eigenstate of

, say

, say  , then for

, then for  , /probability of finding system in different state

, /probability of finding system in different state  at time

at time

Thus, transition probability of finding the system at a later time,

, in the state

, in the state  where

where  , is given by

, is given by

TODO Time-independent pertubations for time-dependent wave equation

- Suppose

is actually independent of time

is actually independent of time

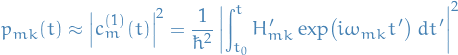

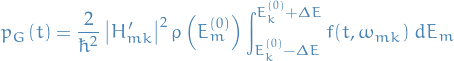

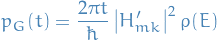

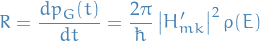

Fermi's Golden Rule

Interested in transitions not to single state, but to a group of final states,

, in some range:

, in some range:

e.g. transitions to continuous part of energy-spectrum.

Total transition probability:

Assume

to be small, s.t. can treat

to be small, s.t. can treat  constant wrt.

constant wrt.  on this interval:

on this interval:

Change of variables

, we get

, we get

where we've used

Number of transitions per time, the transition rate,  , is then just

, is then just

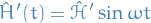

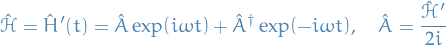

Harmonic Perturbations

Notation

is the time-independent Hermitian operator

is the time-independent Hermitian operator

Stuff

- Transition probability amplitude is a sinusoidal function of itme which is turned on at time

Suppose that

where

is the time-independent Hermitian operator, which we write

is the time-independent Hermitian operator, which we write

Radiation

Notation

is the dipole operator

is the dipole operator

Electromagnetic radiation

is the dipole operator where

is the dipole operator where

is unit charge

is unit charge

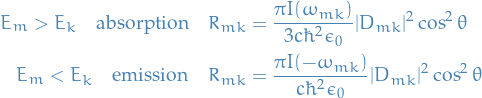

Einstein A-B coefficients

Notation

is the absorption rate

is the absorption rate is the emission rate

is the emission rate Einstein coeff. for spontanous emission

Einstein coeff. for spontanous emission Einstein coef. for stimulated emission

Einstein coef. for stimulated emission dipole matrix elements

dipole matrix elements

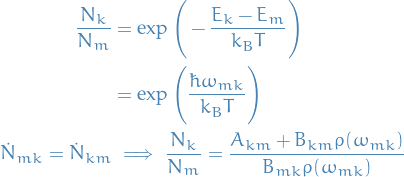

Spontanoues emission (thermodynamic argument)

We have

For absorption we have

![\begin{equation*}

\begin{split}

\dot{N}_{mk} &= N_k \rho(\omega_{mk}) B_{mk} \\

\dot{N}_{mk} &= R_{mk] N_k \\

B_{mk} &= \frac{R_{mk}}{\rho(\omega_{mk})} c \frac{R_{mk}}{I(\omega_{mk}}} \\

\implies B_{mk} & = \frac{\pi}{3 \hbar^2 \epsilon_0} |D_{mk}|^2

\end{split}

\end{equation*}](../../assets/latex/quantum_mechanics_a4141c89b669d5aacc3b6ecd2195364fb6559e66.png)

Now, suppose we have emission , with transition  :

:

Note that this is  , NOT

, NOT  as introduced earlier.

as introduced earlier.

The distribution between the number of particles in the different states is then given by the Boltzmann distribution

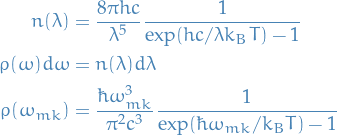

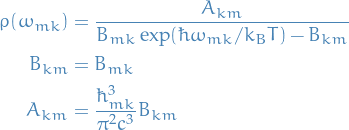

Plank's law tells us that Black body radiation follows

Which we can rewrite as

Substituting back into the epxression for spontaneous emission

One obtains the same result in QED.

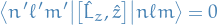

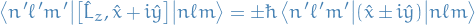

Selection rules

Goal is to evaluate  .

.

Hydrogenic atom

- electric dipole operator is spin-independent

- work in uncoupled basis and ignore spin

Which implies

Which implies either of the following is true

This is called a selection rule.

Doing the same for  , we get

, we get

Considering the matrix elements, we get

which becomes

so we deduce that

giving the selection rule  .

.

We then conclude that the electric dipole transitions are only possible if

Which is due to

hence  would be zero unless

would be zero unless  .

.

Parity Selection Rule

- Under parity operator

, electric dipole operator is odd multi-electron atoms

, electric dipole operator is odd multi-electron atoms - See notes :)

Quantum Scattering Theory

Notation

is the scattering angle

is the scattering angle is the incident angle

is the incident angle is the incident momentum

is the incident momentum is the scattering momentum (i.e. momentum after being scattered)

is the scattering momentum (i.e. momentum after being scattered) , thus when we talk about the direction and so on of

, thus when we talk about the direction and so on of  (wave-number), it's equivalent to the angle of the momentum

(wave-number), it's equivalent to the angle of the momentum is the solid angle

is the solid angleRate of transitions from initial to final plane-wave states

is the density of the final states:

is the density of the final states:  is the number of final states with energy in the range

is the number of final states with energy in the range

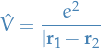

denotes fixed potential (i.e. we say the "potential source" is fixed at some point and

denotes fixed potential (i.e. we say the "potential source" is fixed at some point and  is then the position of the incident particle, and we treat the potential from the fixed scattering-particle as a regular "external" field)

is then the position of the incident particle, and we treat the potential from the fixed scattering-particle as a regular "external" field)

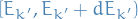

Setup

- Beam of particles

- Each of momentum

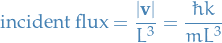

The incident flux is the number of incident particles crossing unit area perpendicular to the beam direcion per unit time.

The scattered flux is the number of scattered particles scattered into the element of solid angle  about the direction

about the direction  ,

,  perunit time per unit solid angle.

perunit time per unit solid angle.

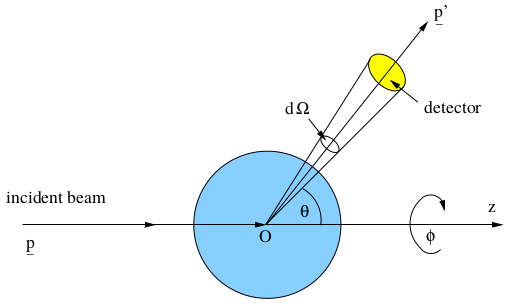

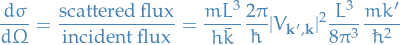

The differential cross-section is usually denoted  and is defined to be the ratio of the scattered flux to the incident flux:

and is defined to be the ratio of the scattered flux to the incident flux:

The differential cross-section thus has dimensions of area: ![$[L]^2$](../../assets/latex/quantum_mechanics_30b4b5cb961eecc96c323ceea58d701ae12b25ef.png)

Total cross-section is then

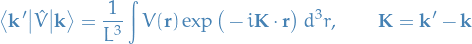

Born approximation

- Can use time-dependent perturbation teory theto approximate the cross-section

- Assume interaction between particle and scattering centre is localized to the region around

Hamiltonian

and treat

as perturbation

as perturbation

- Wave-functions are non-normalizable, therefore we restrict to "potential-well" scenario, as we can take the width of the well as large as we "want"

- Since we're working in 3D: box-normalization

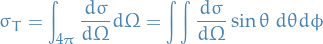

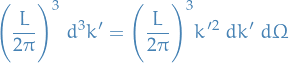

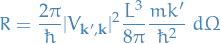

Density of final states

- Final-state vector

is a point in k-space

is a point in k-space - All

form a cubic lattice with lattice spacing

form a cubic lattice with lattice spacing  (because of the potential well approx. discretizing the energy)

(because of the potential well approx. discretizing the energy) - Volume of k'-sphere per lattice point is

# of states in volume element

is

is

using spherical coordinates

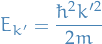

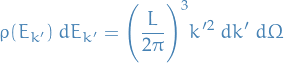

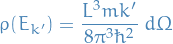

Energy is

thus, the

, the density of states per unit energy, is the enrgy corresponding to wave-vector

, the density of states per unit energy, is the enrgy corresponding to wave-vector  . Therefore,

. Therefore,

is the # of states with energy in the desried interval and with

pointing into the solid angle

pointing into the solid angle  about the direction

about the direction  .

.

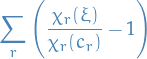

Final result for density of states

Incident flux

Box normalization corresponds to one particle per volume

Scattered flux

-

- Is # of particles scattered into

per unit time

per unit time - Scattered flux therefore obtained by dividing by

to get # of unit time per unit solid angle

to get # of unit time per unit solid angle

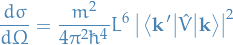

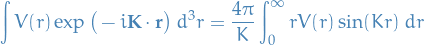

Differential Cross-section for Elastic Scattering

Can compute the cross-section using scattered flux and incident flux found earlier

If potential is real, energy conservation implies elastic scattering, i.e.

The Born approximation to the differential cross-section then becomes

with

where  is called the wave-vector transfer.

is called the wave-vector transfer.

(this is just the 3-dimensional Fourier transform of the potential energy function.)

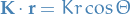

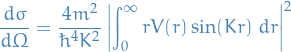

Scattering by Central potentials

- Can simplify further

Work in polar coordinates

, which refer to the wave-vector transfer

, which refer to the wave-vector transfer  so that

so that

Then

Born approximation then becomes

which is independent of

but depends on the scattering angle,

but depends on the scattering angle,  , through

, through  .

.

Trigonometry gives

Quantum Rotator

- Two particles of mass

and

and  separated by fixed distance

separated by fixed distance

- Effective description of the rotational degrees of freedom of a diatomic molecule

- Choose centre-of-mass as origin: system is completely specified by angles

and

and  (since

(since  is fixed)

is fixed) - Neglecting vibrational degrees of freedom, both

and

and  are constant, and

are constant, and

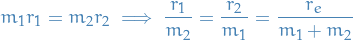

Since origin is frame of CM:

(Classically) Moment of intertia of the system is:

where

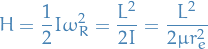

Classical mechanics:

where

is the angular velocity of the system. The energy can be expressed as

is the angular velocity of the system. The energy can be expressed as

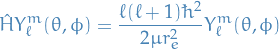

Correspondance principle:

Since we're neglecting vibration,

is fixed, hence the wave function is independent of

is fixed, hence the wave function is independent of  :

:

Two-body scattering

- So far assumed beam of particle scattered by fixed scattering centre; interaction described by

- Useful for electron-atom scattering due to regarding the atom as infinitively heavy

- Consider two-particle system; turns out it's the same!

Hamiltonian with relative separation

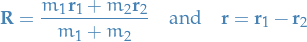

Centre of mass and relative position vectors

Then

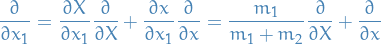

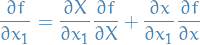

Rewrite gradient operators

and

and  by

by

where

and so on.

To see this just apply the differential operator wrt.  to some function

to some function  :

:

Then

where

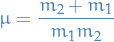

with

called the reduced mass

called the reduced mass

Can be viewed as