Averaged Perceptron

Overview

Averaged perceptron is a optimization algorithm which only has one

hyperparameter:  , the # of iterations.

, the # of iterations.

It will keep updating towards seperating the labels based on the inputs for as long as you specify, hence it's prone to overfit.

Notation

- a single input vector, of

- a single input vector, of  dimensions

dimensions - a single label,

- a single label,

- the dth component of some input vector

- the dth component of some input vector - weight for the dth component of the inputs

- weight for the dth component of the inputs - bias

- bias

Algorithm

Training

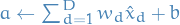

- for

do

do

- for all

do

do

- if

then

then

- end if

- end for

- for all

- end for

- return

Test

- return